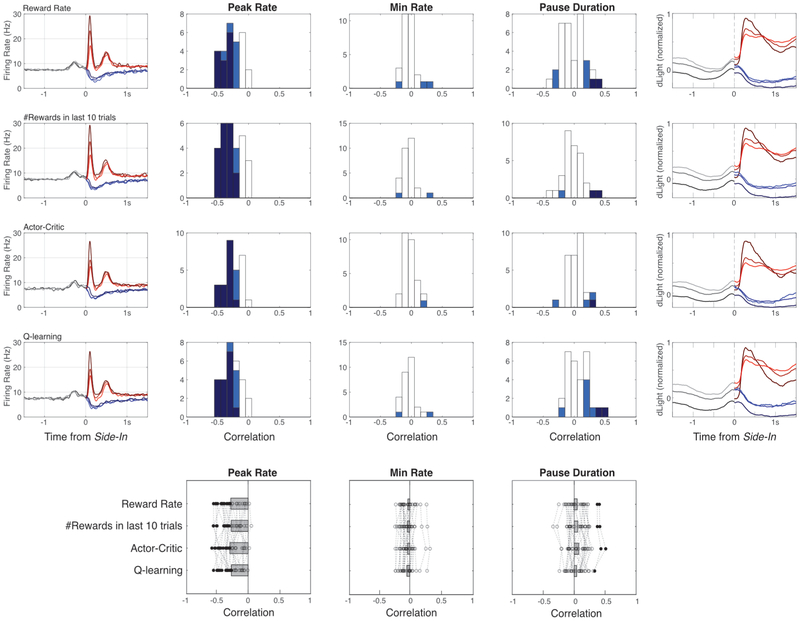

Extended Data Figure 8. Different methods for calculating reward expectation produce similar results.

Left column, average firing rate of dopamine cells around Side-In, broken down by terciles of reward expectation, based either on recent reward rate (top; same as Fig. 5a), # of rewards in previous 10 trials, state value (V) of an actor-critic model, or state value (Qleft+Qright) of a Q-learning model. The actor-critic and Q-learning models were both trial-based, rather than evolving continuously in time. The actor-critic model estimated the overall probability of receiving a reward on each trial, V, using the update rule V’ = V + alpha (RPE), where RPE = actual reward [1 or 0] – V. The Q-learning model kept separate estimates of the probabilities of receiving rewards for left and right choices (Qleft, Qright) and updated Q for the chosen action (only) using Q’ = Q + alpha (RPE), where RPE = actual reward [1 or 0] – Q. The learning parameter alpha was determined for each session by best fit to latencies, for V or (Qleft + Qright) respectively. Next columns show correlations between reward expectation and dopamine cell firing after Side-In, measuring either peak firing rate (within 250ms after rewarded Side-In), minimum firing rate (middle; within 2s after unrewarded Side-In), and pause duration (bottom; maximum inter-spike-interval within 2s after unrewarded Side-In). For all histograms, light blue indicates cells with significant correlations (p < 0.01) before multiple comparisons correction, dark blue indicates cells that remained significant after correction. Positive RPE coding is strong and consistent, negative RPE coding less so.