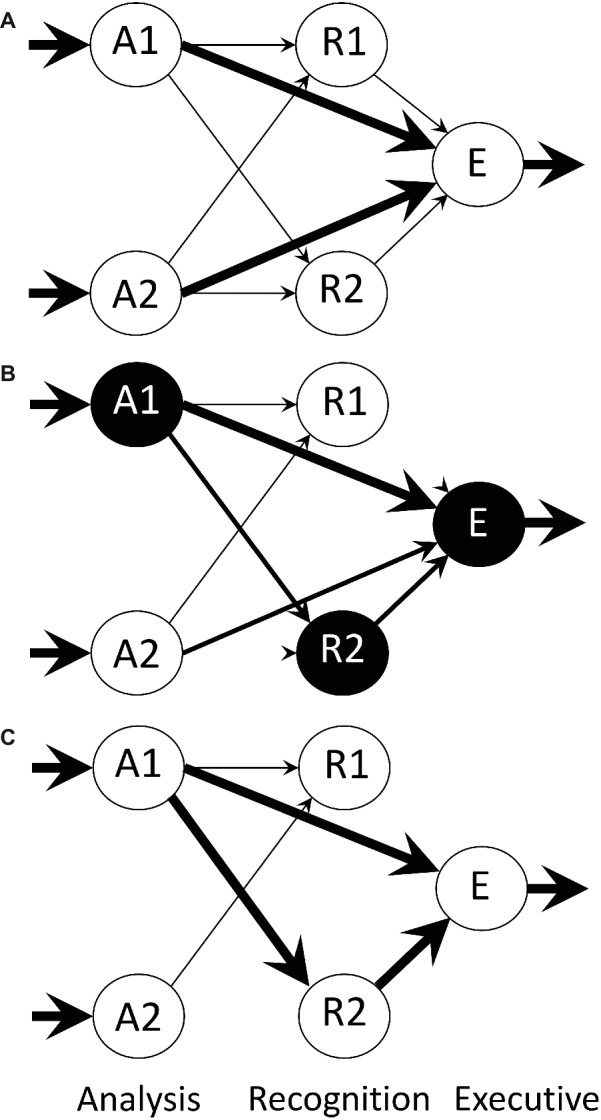

Figure 3.

Architecture of the neural net model of Bateson and Horn (1994), from an illustration by Bateson (2015). The model comprises three layers: A (analysis), R (recognition) and E (executive). The A and R layers contain a number of modules, only two of which are shown here. Each A module relays processed sensory input bearing information about the training object, such as size, shape, color, etc., the arrows indicate flow of information, and the thicknesses of the arrows represent connection strength of the Hebbian synapses at the head of each arrow. Within each of the A and R layers, there is reciprocal inhibition via non-modifiable connections between modules (not shown). There are also direct Hebbian links from analysis to executive, by-passing the R layer, permitting a predisposition to be expressed and allowing simple conditioning outside the R layer. Modules are spontaneously and variably active and mutual inhibition within a layer permits the representation of an imprinting stimulus to be encoded by strengthening the more active pathways. The activity of these pathways weakens alternative inactive pathways. (A) Initial state of the network before training. (B) Network during training with an imprinting stimulus that activates analysis module A1. Recognition module R2 happens to be highly active when input from A1 first arrives and consequently ‘captures’ that input while suppressing other recognition modules. (C) Network after training is complete.