Abstract

Objectives: In the United States, nonadherence to seasonal influenza vaccination guidelines for children and adolescents is common and results in unnecessary morbidity and mortality. We conducted a quality improvement project to improve vaccination rates and test effects of 2 interventions on vaccination guidelines adherence. Methods: We conducted a cluster randomized control trial with 11 primary care practices (PRACTICE) that provided care for 11 293 individual children and adolescents in a children’s health care system from September 2015 through April 2016. Practice sites (with their clinicians) were randomly assigned to 4 arms (no intervention [Control], computerized clinical decision support system [CCDSS], web-based training [WBT], or CCDSS and WBT [BOTH]). Results: During the study, 55.8% of children and adolescents received influenza vaccination, which improved modestly during the study period compared with the prior influenza season (P = .009). Actual adherence to recommendations, including dosing, timeliness, and avoidance of missed opportunities, was 46.4% of patients cared for by the PRACTICE. The WBT was most effective in promoting adherence with vaccination recommendations with an estimated average odds ratio = 1.26, P < .05, to compare between preintervention and intervention periods. Over the influenza season, there was a significantly increasing trend in odds ratio in the WBT arm (P < .05). Encouraging process improvements and providing longitudinal feedback on monthly rate of vaccination sparked some practice changes but limited impact on outcomes. Conclusions: Web-based training at the start of influenza season with monthly reports of adherence can improve correct dose and timing of influenza vaccination with modest impact on overall vaccination rate.

Keywords: influenza vaccination, practice variation, computerized clinical decision support, web-based training, quasi-experimental design

Introduction

The Centers for Disease Control and Prevention and the American Academy of Pediatrics recommend annual seasonal influenza immunization with specific guidance on selection of vaccine by age, single- or 2-dose regimens, extent of underlying egg allergy (applicable during the 2015-2016 influenza season), and other particulars of the dosing algorithm. Although most influenza vaccination recommendations remain consistent each year, with the development of new products and evidence, some are revised.1-3 Optimally, vaccination occurs prior to the onset of influenza disease in a community and continues as long as the influenza viruses are circulating. Vaccination significantly reduces morbidity and mortality,4,5 yet undervaccination is common. Overall in the United States, less than one-half of all people older than 6 months are vaccinated; however, 59.3% (±0.8%) of individuals between 6 months and 17 years old were vaccinated during the 2015-2016 season.6 Influenza vaccination coverage also varies by state; for example, during the 2015-2016 season for youth, it was 47.4% in Florida and 68.7% in Delaware.7

After a gap of one-half year between influenza seasons, health care providers need to reestablish vaccination and incorporate new standards. However, with lack of exposure, clinician knowledge is known to decline over time8 despite overestimating proficiency.9 Several strategies are deployed to promote clinicians’ clinical knowledge. Both human-led didactic educational sessions and computer-based trainings deliver a fixed learning content.10 Furthermore, computerized clinical decision support systems hold the promise to help practitioners operationalize treatment guidelines11 with key features described in the Clinical Decision Support Five Rights model.12 Delivery of educational content during “teachable moments” when the learning is most immediately relevant to the clinician has the potential to promote retention of medical knowledge. Measuring and defining effectiveness of these educational interventions are difficult, although knowledge and self-efficacy appear to be improved.13,14 We proposed that interventions and regular feedback during the influenza season would improve rates of influenza vaccination. Furthermore, we compared the effect of two interventions (web-based training and computerized clinical decision support system) on influenza vaccination and adherence to dosing algorithm recommendations.

Methods

This study was reviewed and approved by the Nemours Institutional Review Board and the participating clinicians gave their written informed consent.

Study Design

A cluster randomized control trial design was used in the evaluation of this quality improvement study implemented September 1, 2015, through April 30, 2016, in the primary care practices of a multistate children’s health system. After pediatric primary care clinicians (physicians and advanced practice nurses) enrolled in the study, their primary care practice (PRACTICE) sites were randomized to 1 of 4 arms: (1) no intervention (Control), (2) computerized clinical decision support system (CCDSS), (3) web-based training (WBT), or (4) computerized clinical decision support system and web-based training (BOTH).

A prompt in the electronic health record alerted the primary care clinician to provide an influenza vaccination when a patient 6 months or older who had no record of seasonal influenza vaccination was seen during an office visit at a PRACTICE in the CCDSS arm. Presence of prior influenza vaccination in the season, documentation of vaccination at a non–health system location or prior documentation of refusal to allow administration of the vaccine would prevent the triggering of the prompt, The CCDSS included a best-practice alert consistent with the Five Rights Model: (1) “what” (information): reasoning for seasonal influenza vaccination requirement; (2) “who” (recipient): patient; (3) “how” (intervention type): based on age and history, a dosage schedule was suggested; (4) “where” (information delivery channel): links facilitated order entry, documentation of parent refusal, or documentation that vaccine was administered elsewhere; and (5) “when” (in the workflow): the CCDSS was launched when study clinicians opened a patient’s office encounter documentation if eligible for influenza vaccine. At subsequent office visits, an electronic prompt alerted the clinician to patients requiring a second dose. Drug-allergy interaction alert would alert as well for those patients with an egg allergy.

Study clinicians receiving WBT were provided links via electronic communications to a 10-minute primer on influenza vaccination recommendations and completed a brief exercise. All clinicians in the WBT and BOTH arms completed the 10-minute primer by October 20, 2015.

Each clinician received feedback on influenza vaccination via a monthly report (aggregated by site). This report featured the proportion of eligible children who had proper documentation of influenza vaccination (in office or elsewhere) with a roster of specific eligible patients seen that prior month and their influenza vaccine disposition.

In addition, a retrospective dataset of measures among patients seen by clinicians between September 2014 and April 2015 was secured for use in predictive modeling and as a baseline for comparison with the interventions’ effects.

Participants

Inclusion criteria required active part- or full-time employment to provide outpatient clinical services on September 1, 2015. Clinicians providing clinical services in more than one practice site or not providing patient care in our health care system the previous year were excluded from participation in this study. Although investigation of influenza vaccination practices required determination of vaccination status in relation to some patient information, the subjects of this study were the PRACTICE and their participating clinicians.

Recruitment of PRACTICE sites included presentations at a webinar meeting of site lead clinicians, dissemination through physician research liaisons in the Delaware and Florida practices and direct email communication to the 38 clinicians from 16 sites. Thirteen clinicians from 11 PRACTICE sites enrolled in the study via an online portal and their sites assigned an intervention arm based on a block randomization scheme generated using the web site “Randomization.com” (http://www.randomization.com). Those in the WBT and BOTH arms were provided a link to the WBT. The CCDSS was activated for those in the CCDSS and BOTH arms. In PRACTICE sites with both enrolled and unenrolled clinicians, only study clinicians were exposed to interventions, and the PRACTICE run charts related to patients seen only by participating clinicians in the practice.

Measures

Primary care practice characteristics of location and volume of patients seen were determined. An enrollment survey collected clinician demographics, professional degree, and length of time since completion of professional education as well as other factors, such beliefs about influenza vaccination. Patient information including age, sex, and presence of a high-risk chronic medical condition was electronically abstracted from the electronic health record.

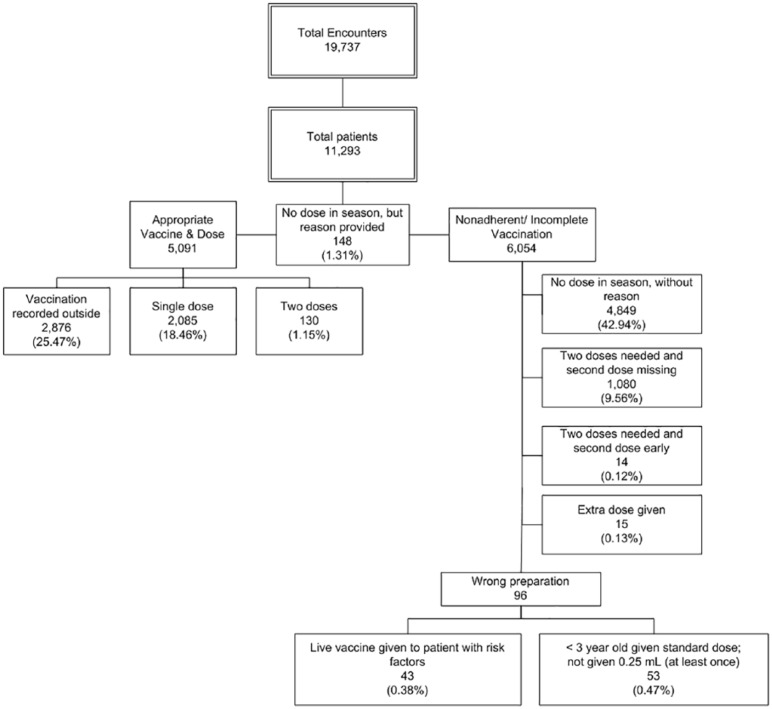

Determination of vaccine administration (or documentation of delivery elsewhere) was electronically abstracted from the electronic health records. A composite measure based on adherence with dosing algorithm, timeliness, and avoidance of missed opportunities was determined (Figure 1).

Figure 1.

Influenza vaccination, adherence with dosing, and missed opportunities among primary care clinicians in the primary care practices.

Statistical Analysis

Practice and clinician characteristics were summarized using frequencies and percentages. Data on adherence to administration and dosing of influenza vaccination among PRACTICE sites were aggregated by month. Using these aggregated proportions, odds ratios (OR) of influenza vaccination adherence by month and intervention arms were calculated for the intervention period using the monthly rates in corresponding pre-intervention period (September 2014 through April 2015) data as the referent. Logarithmic (log)-transformed odds ratios were approximately normally distributed, thereby allowing a comparison of the mean of the log odds ratios among intervention arms over months. Log (0) is undefined and the lowest value of OR can be 0. We used log (OR + 1) for comparisons, which is approximately normally distributed and is the common practice for applying logarithmic transformation. A mixed-effects model was used to compare the mean log (OR + 1) between intervention arms. An AR(1) correlation structure was used to account for the within-clinician correlation in visit activities over time. Model assumptions were checked before analyses. All tests were 2-tailed with a level of significance (P) set at .05. The statistical software SAS, version 9.3 (SAS Institute, Cary, NC, USA) was used for the analysis.

Results

Participants’ Characteristics

Six (55%) of the PRACTICE sites were in Delaware. Baseline characteristics of clinicians in the PRACTICE sites were representative of the health care system (Table 1), and each clinician believed themselves knowledgeable about influenza vaccination and believed they routinely provided the vaccine.

Table 1.

Baseline Characteristics Among Clinicians in the Primary Care Practices.

| PRACTICEa (13 Clinicians) | |

|---|---|

| MD/DO degree, n (%) | 12 (92) |

| Female sex, n (%) | 10 (77) |

| Race, n (%) | |

| Asian | 4 (31) |

| Black | 2 (15) |

| White | 7 (54) |

| Hispanic ethnicity, n (%) | 2 (15) |

| Age (years), mean (SD) | 48.0 (8.6) |

| Years since degree, mean (SD) | 19.5 (7.3) |

| Hours per week spent in direct patient care, n (%) | |

| <24 | 4 (31) |

| 25-40 | 4 (31) |

| >41 | 5 (38) |

| Electronic health record helpful in patient management (Agree/Strongly Agree), n (%) | 10 (77) |

| Beliefs around influenza vaccination (Agree/Strongly Agree), n (%) | |

| Knowledgeable about requirements | 13 (100) |

| Routinely check influenza immunization status | 13 (100) |

| Routinely provide influenza vaccine | 13 (100) |

| Intervention arm assignments, n (%) | |

| Controlb | 4 (31) |

| CCDSS | 4 (31) |

| WBT | 2 (15) |

| BOTHc | 3 (23) |

Abbreviations: CCDSS, computerized clinical decision support system; WBT, web-based training.

PRACTICE indicates primary care practices.

Control indicates no intervention.

BOTH indicates both CCDSS and WBT interventions.

Influenza Vaccine Administration

During the study period of September 2015 to April 2016, 11 293 individual patients were seen in 19 737 encounters in the PRACTICE offices by the enrolled clinicians. Of these, 55.8% received an influenza vaccination with rates of 47.6% in Florida and 60.2% in Delaware. Adherence with administration and dosing of influenza vaccination, documentation of previous proper vaccination during the season, or proper documentation of exclusion (eg, patient moderately or severely ill at encounter or risk factor prohibits vaccination) occurred in only 46.4% of patients seen in the PRACTICE (Figure 1). During the pre-study period (September 2014–April 2015), adherent influenza vaccination occurred among 45.2% of patients seen in the PRACTICE with the same clinicians. Overall, there was an improvement in vaccination among patients seen in the PRACTICE during the study period in contrast with the prestudy period (Relative Risk = 1.074; P = .009). There was 1 additional patient vaccinated for every 56.6 the previous year.

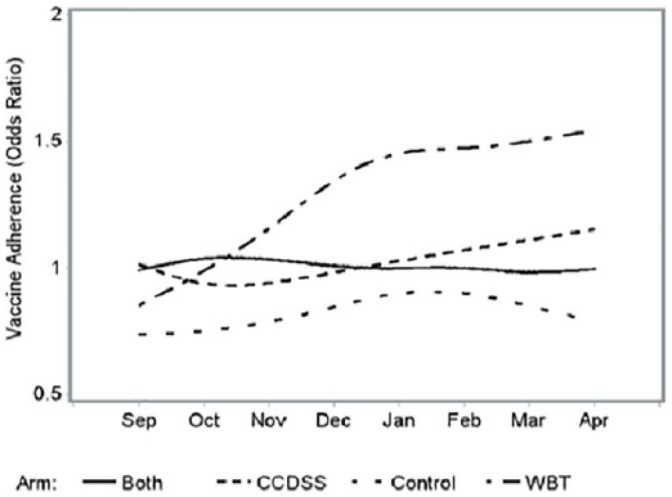

The OR that describe the likelihood of the prevalence of visits with appropriate vaccine administration (including proper dosing) during the intervention period compared with that during the referent cohort (prestudy period) are presented in Figure 2 stratified both by month and by intervention arm for the PRACTICE. There was a statistically significant difference (P = .01) in mean log (OR + 1) among intervention arms (Table 2). Table 2 presents the mean (standard error [SE]) log (OR + 1) as well as its transformation to OR by intervention arms for PRACTICE. Table 3 presents the pairwise comparisons of mean log (OR + 1) between interventions. There was a significantly higher likelihood of patient encounters in the WBT intervention arm with adherence to vaccination recommendations during the intervention period compared with that in the preintervention period (estimated mean [geometric] OR = 1.26, P < .05). The mean OR were 1.04, 1.00, and 0.81 in the CCDSS, BOTH, and Control arms, respectively. In pairwise comparisons, mean log (OR +1) in the WBT arm was statistically different than in the Control arm, P = .03, and although nonsignificant, trended higher than in the CCDSS (P = .22) and BOTH (P = .15) arms. Comparing the intervention arms over time, there was a significantly increasing trend in mean log (OR +1) in the WBT arm (P < .05) (Figure 2), and, even though not significant, there was a trend toward an increase in mean log (OR +1) over time in the CCDSS arm (P = .27). Clinician baseline characteristics did not substantively affect the models.

Figure 2.

Odds ratios of influenza vaccination adherence by intervention arms.

Table 2.

Estimates of Mean (SE) of Log (Odds Ratio + 1) and Odds Ratio by Intervention Arm.

| Arm | Log (Odds Ratio +1) |

Odds Ratio |

||

|---|---|---|---|---|

| Mean | SE | Geometric Mean | SE | |

| BOTHa | 0.693 | 0.049 | 1.00 | 0.05 |

| CCDSS | 0.714 | 0.049 | 1.04 | 0.05 |

| Controlb | 0.595 | 0.049 | 0.81 | 0.05 |

| WBT | 0.816 | 0.049 | 1.26 | 0.05 |

Abbreviations: SE, standard error; CCDSS, computerized clinical decision support system; WBT, web-based training.

BOTH indicates both CCDSS and WBT interventions.

Control indicates no intervention.

Table 3.

Mean Difference in Log (Odds Ratio +1) Between Pairs of Intervention Arms.

| Pairs of Intervention Arms | Mean Difference | SE | P | 95% Confidence Interval for Difference |

||

|---|---|---|---|---|---|---|

| Lower Bound | Upper Bound | |||||

| BOTHa | CCDSS | −0.021 | 0.069 | 0.776 | −0.214 | 0.172 |

| BOTHa | Controlb | 0.098 | 0.069 | 0.231 | −0.095 | 0.291 |

| BOTHa | WBT | −0.123 | 0.069 | 0.151 | −0.316 | 0.07 |

| CCDSS | Controlb | 0.119 | 0.069 | 0.162 | −0.074 | 0.312 |

| CCDSS | WBT | −0.102 | 0.069 | 0.216 | −0.295 | 0.091 |

| Controlb | WBT | −0.221* | 0.069 | 0.034 | −0.414 | −0.028 |

Abbreviations: SE, standard error; CCDSS, computerized clinical decision support system; WBT, web based training.

p < 0.05

BOTH indicates both CCDSS and WBT interventions.

Control indicates no intervention.

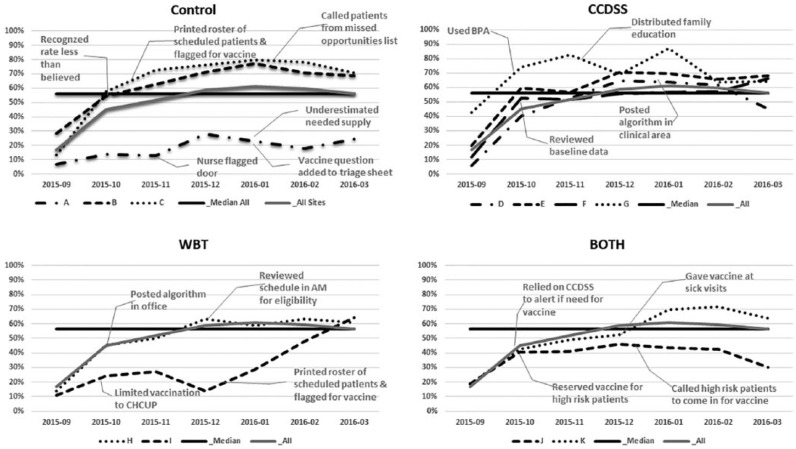

Practice changes

Each clinician received a monthly run chart (Figure 3) of the rate of vaccination by month for the previous months from October, 2015 to March, 2016 and list of missed opportunities. Each clinician completed an online progress report at 2-month intervals. Among those in the CCDSS arm, they reported the CCDSS primed mindfulness around vaccination (particularly for those patients requiring a second dose). However, some complained of best-practice alert fatigue—an unintended effect. A strategy tested in the WBT arm was to print a copy of the provided algorithm for vaccination and posted it in a visible place. Other strategies included printing a daily patient roster that highlighted patients who would need influenza vaccination and engaging staff to identify eligible patients. Furthermore, clinicians proposed future process improvements such as adding a message to families to “inquire about influenza vaccination” to the automated telephone appointment reminder. Among those in the Control or WBT arms, clinicians advised developing various electronic prompts including a best-practice alert.

Figure 3.

Run charts with composite median line and monthly average of all PRACTICE sites separated by arm.

A shared concern was incredulity that adherence with recommendations was not higher and personal belief that PRACTICE rates of vaccination were increasing greater than demonstrated in monthly reports. Clinicians did occasionally report free-text documentation of external vaccination had not been transferred to the electronic immunization record. Other challenges reported were concerns around vaccine supply, among other operational issues.

Discussion

When all doses of influenza vaccine received (including administration errors) during September 2015 to April 2016 were counted, the influenza vaccination coverage (55.8%) was similar to national reports6 for the same season, and we observed expected variation by state7 (47.6% in Florida and 60.2% in Delaware in our cohort). However, as described when the appropriate dosing algorithm was applied, the actual adherence was substantially less (46.4%). Primary care clinicians are inconsistent with their reported knowledge about influenza vaccination requirements and provision of influenza vaccination. The finding that the system allowed delivery of an influenza vaccine early, an extra dose, and even a wrong preparation for the patient (Figure 1) reveals an opportunity for further quality improvement interventions.

Over the course of the influenza season, the WBT arm emerged as the most effective intervention for primary care clinicians. Although the timing of improvement in vaccination adherence corresponds with completion of the online training for the WBT arm, it is unclear why the BOTH arm would not demonstrate a similar improvement. In the WBT arm, patients seen in 5 of 9 encounters were vaccinated in contrast to 4 in the previous influenza season and in contrast, in the Control arm, patients in 4 of 10 encounters were vaccinated in contrast to 5 in the previous influenza season. Although statistically significant, this difference between WBT and Control arm was small. The CCDSS appears to have no effect. The limited effect of the CCDSS on promoting adherence may be related to its degree of intrusion and timing. Improved practitioner performance has been reported in many but not all similar uses of CCDSS.15 The CCDSS provided informational electronic prompts including a best-practice alert consistent with the Five Rights Model12 but did not impose a “hard stop” to continuing documentation or patient flow. In contrast, previous studies have found computerized physician order entry alerts that are hard stops can be effective in changing diagnostic testing and prescription practices but induce treatment delays and so need to be used judiciously.16-18 Another challenge of the best practice alert of the CCDSS to encourage influenza vaccination is competition with between 1 and 3 other similar alerts delivered at the same time. A clinician did remark he was likely to ignore the alert when clustered with multiple prompts. Asynchronous charting with opening the record prior to the patient encounter or at the end of the day would mute the effect of the CCDSS. Whereas opening the record prior the patient encounter would activate the CCDSS, the clinician might delay placing the order for vaccination until the patient actually arrived. On arrival, the best-practice alert would not refire if the patient record was reopened. Furthermore, documenting an office encounter post-hoc would activate the CCDSS after the patient has already left.

Recognizing the potential variability among PRACTICE sites and inherent greater opportunity for vaccination earlier in the influenza season with likely higher rates later as the pool of unvaccinated children would decline, we used the prior influenza season’s corresponding month as a referent to produce Figure 2. As noted in Figure 3, PRACTICE sites started the 2015-2016 influenza season at a lower rate of vaccination. The estimated mean (geometric) OR was used to describe each arm’s adherence to vaccination recommendations during the intervention period compared with that in the preintervention period. The mean OR of 0.81 for adherence to vaccination recommendations in the Control arm was surprising and likely related to the underperforming of PRACTICE site A in relation to the pre-intervention season. The clinician in PRACTICE site A did comment in his second progress report about underestimating the needed supply of vaccine. PRACTICE sites that limited vaccination to child health checkups or reserved the vaccine for higher risk patients had lower vaccination rates. With PRACTICE site I prescreening medical records regardless of reason for encounter, vaccination rates improved and likely contributed to the finding of greater adherence in the WBT arm in relation to the preintervention season despite an overall lower rate of adherence. Paradoxically, PRACTICE sites in the CCDSS and BOTH arms reported reliance on the CCDSS to prompt vaccination but in practice it appears the support had limited effect. Without reliance on CCDSS, the Control and WBT arms innovated with prescreening patients who would need vaccination.

Because of the modest impact of interventions aimed at physician practice, improvements in influenza vaccination rates may require system changes to work flow like the physician order entry alerts that are hard stops and prescreening of patients or moving vaccination out of physician offices into schools or other venues where children and teens assemble.

Limitations

We faced some challenges in studying the effect of interventions to improve influenza vaccination rates among busy practices and their practicing clinicians. Limited recruitment resulted in a small number of practices in each arm of the cluster randomized control trial. Lack of more substantial treatment effects and finding that clinician baseline characteristics did not substantively affect the models are possibly related to this small sample size. A single PRACTICE site with limited engagement or inconsistent practices would have an oversized impact to a study arm as seen in sites A and J (Figure 3). A future study across a large network of primary care practices or involving several networks could address this study weakness. Furthermore, despite completion of online quality improvement training, sending monthly charts to each clinician with key measures to reflect on and collecting a bimonthly progress report, clinician engagement in improving practice processes was less than anticipated. Our quality improvement study employed the model for improvement,19 which encouraged clinicians to test change packages (WBT, CCDSS) and develop their own process improvements as they reflected on regular outcome measures in a run chart. These tests of change may have diluted the effect of the study arms. In particular, the monthly feedback on influenza vaccination may have improved the performance of the Control group. Intermittent shortages in the supply of vaccines and the habit of some clinicians to “save some vaccine” for higher-risk patients likely affected the PRACTICE’s ability to vaccinate. Clinicians cited parents as non-adherent with follow-up appointments to provide a second dose vaccine which contributed to the 17.8% of the nonadherent/incomplete vaccinations found (without affecting any arm disproportionately). It is unknown the degree caregivers’ beliefs, logistical considerations (travel for another office visit), and/or clinician knowledge influenced the failure to administer a second dose when appropriate. Furthermore, the limited documentation (1.3% of total patients) of a reason why vaccination did not occur (including caregiver refusal) suggests caregiver refusal may be underreported. More fundamentally, the common use of a single WBT to remind clinicians about vaccination and CCDSS without hard stops may be weak interventions individually and when paired. Future studies could explore the effect of repeated trainings when the vaccination rate fails to meet a threshold and of CCDSS with hard stops.

Conclusions

We predicted there would be a gradual increase in vaccination adherence during the influenza season, which would be responsive to various interventions. However, in practice, our findings are more nuanced. Among PRACTICE sites, WBT for their clinicians at the start of the influenza season and monthly reports of adherence may be sufficient to improve vaccination rates modestly. Computerized clinical decision support systems are ignored in this setting with best-practice alert fatigue or workflows that dispense with the reminders. Although our rate of any influenza vaccination was similar to that of national reports, when applying an algorithm including the appropriate dose and timing, the actual adherence to recommendations was substantially less. Identifying caregiver motivations and barriers to having their children receive the annual influenza vaccine will be crucial to improving vaccination rates. Providing the appropriate vaccine with correct dose and timing to each child appears problematic in our present system. The electronic health record can be used effectively to test guideline adherence strategies and is an efficient repository of clinical decision making as we explore strategies to improve influenza vaccination.

Acknowledgments

We thank our institution and its health care providers for their support of quality improvement and health services research across its network. We thank Dr. Timothy Wysocki for his guidance and leadership, Dr. James Crutchfield and Eileen Antico for their expertise in modeling and data management, Raymond Villanueva in developing the computerized clinical decision support system, and Alex Taylor for her role as Senior Research Coordinator.

Author Biographies

Lloyd N. Werk, MD, MPH serves as the chief of the divisions of General Academic Pediatrics and Hospital Medicine at Nemours Children’s Hospital and Professor of Pediatrics at the University of Central Florida College of Medicine. He works with the Nemours Department of Quality and Safety in developing systems and measures to help clinicians reach institutional goals in quality and health outcomes while managing the Nemours portfolio of Maintenance of Certification projects.

Maria Carmen Diaz, MD is a clinical professor of Pediatrics and Emergency Medicine at Sidney Kimmel Medical College at Thomas Jefferson University. She is the Medical Director of Simulation for the Nemours Institute for Clinical Excellence and is an Attending Physician in the Division of Emergency Medicine at Nemours/Alfred I. duPont Hospital for Children in Wilmington, Delaware.

Adriana Cadilla, MD is the medical director of the Antimicrobial Stewardship Program, as well as the Medical Director of the HIV program at Nemours Children’s Hospital. She is faculty in the Division of Infectious Disease at Nemours Children’s Hospital and an Assistant Professor of Pediatrics at the University of Central Florida College of Medicine.

James P. Franciosi, MD serves as chief of the Division of Gastroenterology, Hepatology and Nutrition for Nemours Children’s Hospital and Professor of Pediatrics at the University of Central Florida College of Medicine. He is a clinical researcher focused on clinical outcomes, esophageal conditions, and an investigator in the Center for Health Care Delivery Science at Nemours Children’s Health System.

Md Jobayer Hossain, PhD is a Senior Research Scientist and Manager of the Biostatistics Core at Nemours Children’s Health System, and Adjunct Associate Professor at the University of Delaware. He has over 18 years of experience in design and analysis of clinical, epidemiological and public health studies. Besides modeling data, his research focuses on pattern recognition of complex associations.

Footnotes

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by a Department of Defense–Telemedicine and Advanced Technology Research Center (DoD TATRC) research contract (W81XWH-13-1-0316) titled “Maintenance of health care providers’ clinical proficiency: transdisciplinary analysis, modeling and retention” with Dr. Wysocki as the Principal Investigator.

ORCID iD: Lloyd N. Werk  https://orcid.org/0000-0001-9892-898X

https://orcid.org/0000-0001-9892-898X

References

- 1. Committee on Infectious Diseases; American Academy of Pediatrics. Recommendations for prevention and control of influenza in children, 2014-2015. Pediatrics. 2014;134:e1503-e1519. doi: 10.1542/peds.2014-2413 [DOI] [PubMed] [Google Scholar]

- 2. Grohskopf LA, Sokolow LZ, Olsen SJ, Bresee JS, Broder KR, Karron RA. Prevention and control of influenza with vaccines: recommendations of the Advisory Committee on Immunization Practices, United States, 2015-16 influenza season. MMWR Morb Mortal Wkly Rep. 2015;64:818-825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Committee on Infectious Diseases. Recommendations for prevention and control of influenza in children, 2017-2018. Pediatrics. 2017;140:e20172550. doi: 10.1542/peds.2017-2550 [DOI] [PubMed] [Google Scholar]

- 4. Kostova D, Reed C, Finelli L, et al. Influenza illness and hospitalizations averted by influenza vaccination in the United States, 2005-2011. PLoS One. 2013;8:e66312. doi: 10.1371/journal.pone.0066312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Wong KK, Cheng P, Foppa I, Jain S, Fry AM, Finelli L. Estimated paediatric mortality associated with influenza virus infections, United States, 2003-2010. Epidemiol Infect. 2015;143:640-647. doi: 10.1017/S0950268814001198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Santibanez TA, Kahn KE, Zhai Y, et al. Flu vaccination coverage, United States, 2015-16 influenza season. https://www.cdc.gov/flu/fluvaxview/coverage-1516estimates.htm. Accessed April 13, 2017.

- 7. Centers for Disease Control and Prevention. 2015-16 Influenza season vaccination coverage report. https://www.cdc.gov/flu/fluvaxview/reportshtml/reporti1516/reporti/index.html. Accessed April 13, 2017.

- 8. Halm EA, Lee C, Chassin MR. Is volume related to outcome in health care? A systematic review and methodologic critique of the literature. Ann Intern Med. 2002;137:511-520. [DOI] [PubMed] [Google Scholar]

- 9. Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: a systematic review. JAMA. 2006;296:1094-1102. [DOI] [PubMed] [Google Scholar]

- 10. Werk L, Diaz MC, Franciosi JP, Wysocki T, Ingraham L, Crutchfield J. Structured development of interventions to improve physician knowledge retention. In: Proceedings of the Interservice/Industry Training, Simulation, and Education Conference (I/ITSEC) [CD-ROM]. Arlington, VA: National Training Systems Association; 2015. [Google Scholar]

- 11. HealthIT.gov. Clinical decision support. http://www.healthit.gov/policy-researchers-implementers/clinical-decision-support-cds. Updated January 15, 2013. Accessed April 28, 2014.

- 12. Osheroff J. Improving Medication Use and Outcomes with Clinical Decision Support: A Step-by-Step Guide. Chicago, IL: Healthcare Information and Management Systems Society; (HIMSS); 2009. [Google Scholar]

- 13. Reed D, Price EG, Windish DM, et al. Challenges in systematic reviews of educational intervention studies. Ann Intern Med. 2005;142(12 pt 2):1080-1089. [DOI] [PubMed] [Google Scholar]

- 14. Stark CM, Graham-Kiefer ML, Devine CM, Dollahite JS, Olson CM. Online course increases nutrition professionals’ knowledge, skills, and self-efficacy in using an ecological approach to prevent childhood obesity. J Nutr Educ Behav. 2011;43:316-322. doi: 10.1016/j.jneb.2011.01.010 [DOI] [PubMed] [Google Scholar]

- 15. Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223-1238. [DOI] [PubMed] [Google Scholar]

- 16. Goldzweig CL, Orshansky G, Paige NM, et al. Electronic health record-based interventions for improving appropriate diagnostic imaging: a systematic review and meta-analysis. Ann Intern Med. 2015;162:557-565. doi: 10.7326/M14-2600 [DOI] [PubMed] [Google Scholar]

- 17. Strom BL, Schinnar R, Aberra F, et al. Unintended effects of a computerized physician order entry nearly hard-stop alert to prevent a drug interaction: a randomized controlled trial. Arch Intern Med. 2010;170:1578-1583. doi: 10.1001/archinternmed.2010.324 [DOI] [PubMed] [Google Scholar]

- 18. Helmons PJ, Coates CR, Kosterink JG, Daniels CE. Decision support at the point of prescribing to increase formulary adherence. Am J Health Syst Pharm. 2015;72:408-413. doi: 10.2146/ajhp140388 [DOI] [PubMed] [Google Scholar]

- 19. Langley GJ, Moen RD, Nolan KM, Nolan TW, Norman CL, Provost LP. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. 2nd ed. San Francisco, CA: Jossey-Bass; 2009. [Google Scholar]