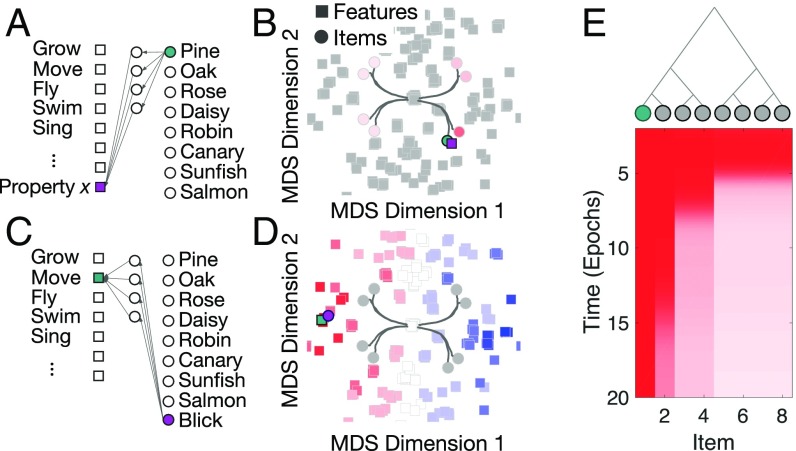

Fig. 10.

The neural geometry of inductive generalization. (A) A novel feature (property x) is observed for a familiar item (e.g., “a pine has property x”). (B) Learning assigns the novel feature a neural representation in the hidden layer of the network that places it in semantic similarity space near the object which possesses the novel feature. The network then inductively projects that novel feature to other familiar items (e.g., “Does a rose have property x?”) only if their hidden representation is close in neural space. (C) A novel item (a blick) possesses a familiar feature (e.g., “a blick can move”). (D) Learning assigns the novel item a neural representation in the hidden layer that places it in semantic similarity space near the feature possessed by the novel item. Other features are inductively projected to that item (e.g., “Does a blick have wings?”) only if their hidden representation is close in neural space. (E) Inductive projection of a novel property (“a pine has property x”) over learning. As learning progresses, the neural representations of items become progressively differentiated, yielding progressively restricted projection of the novel feature to other items. Here, the pine can be thought of as the left-most item node in the tree.