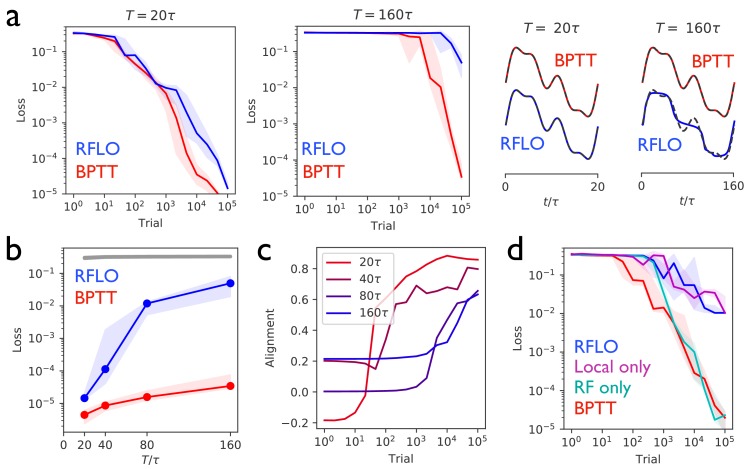

Figure 2. Periodic output task.

(a) Left panels: The mean squared output error during training for an RNN with recurrent units and no external input, trained to produce a one-dimensional periodic output with period of duration (left) or (right), where is the RNN time constant. The learning rules used for training were backpropagation through time (BPTT) and random feedback local online (RFLO) learning. Solid line is median loss over nine realizations, and shaded regions show 25/75 percentiles. Right panels: The RNN output at the end of training for each type of learning (dashed lines are target outputs, offset for clarity). (b) The loss function at the end of training for target outputs having different periods. The colored lines correspond to the two learning rules from (a), while the gray line is the loss computed for an untrained RNN. (c) The normalized alignment between the vector of readout weights and the vector of feedback weights during training with RFLO learning. (d) The loss function during training with for BPTT and RFLO, as well as versions of RFLO in which locality is enforced without random feedback (magenta) or random feedback is used without enforcing locality (cyan).

Figure 2—figure supplement 1. An RNN with sign-constrained synapses comporting with Dale’s law attains performance similar to an unconstrained RNN.

Figure 2—figure supplement 2. An RNN trained to perform the task from Figure 2 with RFLO learning on recurrent and readout weights outperforms an RNN in which only readout weights or only recurrent weights are trained.