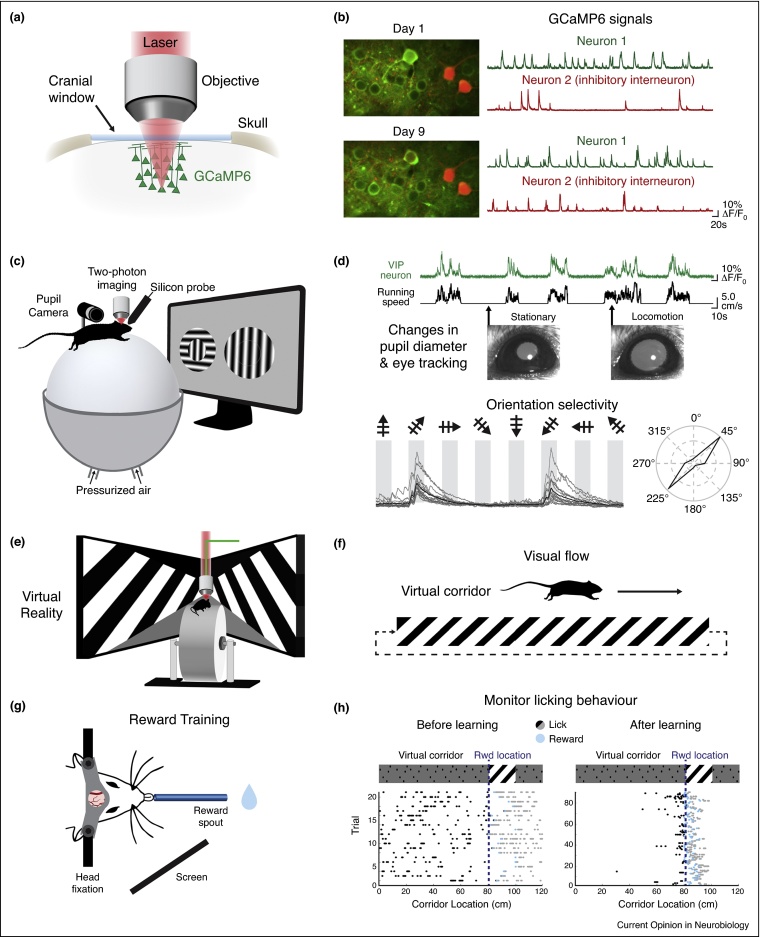

Figure 1.

Experimental procedures for recording neuronal activity and correlated behavioural parameters in head-fixed awake behaving rodents. (a) Schematic of a cranial window preparation above primary visual cortex (V1) that allows chronic two-photon imaging of neurons labelled with a genetically encoded calcium indicator (GCaMP6). (b) GCaMP6-labelled neurons (green) can be imaged over multiple days and even weeks (example field of view from imaging Day 1 and 9). Relative changes in fluorescence (ΔF/F0) over time are used as a proxy read-out of neuronal activity. Signals from genetically defined subpopulations of cells can be isolated via a second fluorescent marker (e.g. tdTomato expression in somatostatin expressing inhibitory interneurons, shown here in red). (c) Head-fixed rodents can freely move on an air-supported styrofoam ball that acts as a spherical treadmill while calcium imaging and/or electrophysiological recordings are performed. Optical computer mice are used to assess running speed. Pupil diameter and eye-tracking can be recorded with cameras. In this configuration, animals can navigate an open virtual reality environment or view a visual stimulus where left/right behavioural choices can be made with motor movements. (d) Neuronal activity can be correlated with running speed and changes in pupil diameter, used as a measure of arousal. GCaMP6 signal from a V1 VIP expressing interneuron is shown in green and animal's speed in black. As traditionally done in anaesthetised animals, neuronal activity in V1 can be correlated with visual stimulation in the form of passively viewed stimuli, such as drifting gratings displayed on a screen. Bottom panel shows single trials (grey) and average response (black) of a GCaMP6-labelled neuron to different oriented gratings. Polar plot shows the amplitude of calcium transients in response to each orientation, normalised to the maximum response. (e) Head-fixed rodents can be placed in a virtual environment: animals run as if on a linear treadmill and, with surrounding screens, can navigate virtual corridors with defined wall patterns. Note that the spherical treadmill can be replaced by a cylindrical wheel where an optical encoder attached to the central axle records speed. (f) Using a virtual reality environment, the visual flow experienced by the animal can be measured and manipulated. An animal navigating along a virtual corridor creates visual flow: the experimenter can manipulate this coupling to create a mismatch between the visual flow and the animal's movement. (g) Experience-dependent neuronal changes in V1 can be studied using head-fixed animals learning visually guided tasks. Water deprived animals learn to lick during key experimental cues and goal-directed behaviours in order to receive water rewards through a spout. (h) Licking behaviour is monitored by a lick sensor on the spout during task performance. Example of a task in which the animal must lick at a certain reward location demarcated by a visual cue (oriented grating) along a virtual track. Each dot represents a lick: prereward licking (black dots), rewarded-licking at the right visual cue (blue dots) and postreward licks (grey dots). With learning, licking behaviour becomes tightly coupled to the location of the rewarded visual cue along the track.