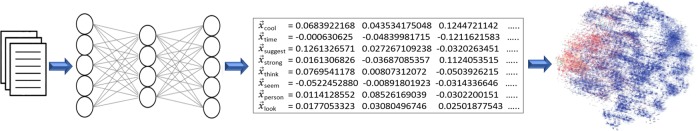

Fig. 1.

Use of the machine learning technique (Skip-gram) Word2vec to create word embeddings by processing a large body of texts through a two-layer neural network. The weights in the first layer of the network constitute the resulting vectors and specify positions in a high dimensionality space (a word-embedding). A 2-dimensional projection of the 99% most frequent words in English (N = 42,234) of this space is shown above (blue = nouns; red = verbs; orange = adjectives; aqua = prepositions)