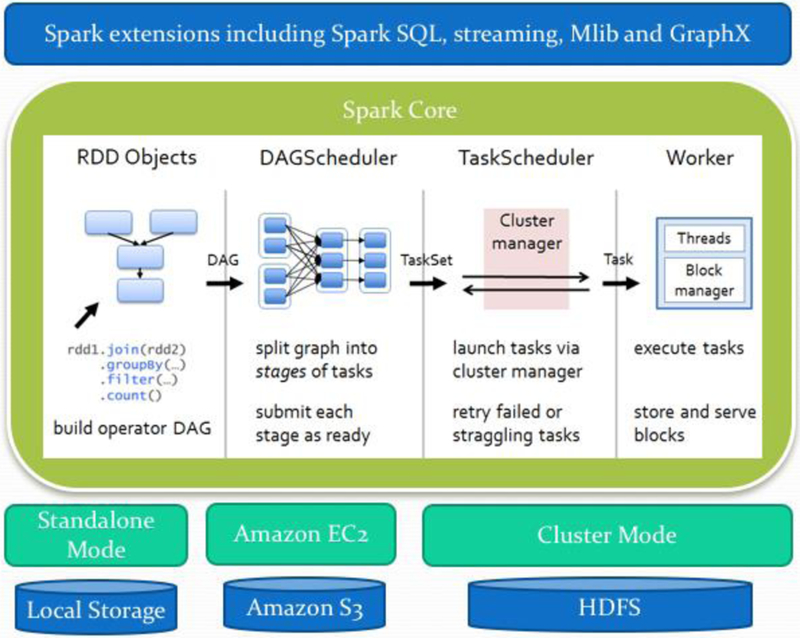

Fig. 2.

Illustration of the spark stack with its components. Spark offers a functional programming API to manipulate Resilient Distributed Datasets (RDDs). RDDs represent a collection of items distributed across many compute nodes that can be manipulated in parallel. Spark Core is a computational engine responsible for scheduling, distribution and monitoring applications which consists of many computational tasks across worker machine(s) on a computation machine/cluster.