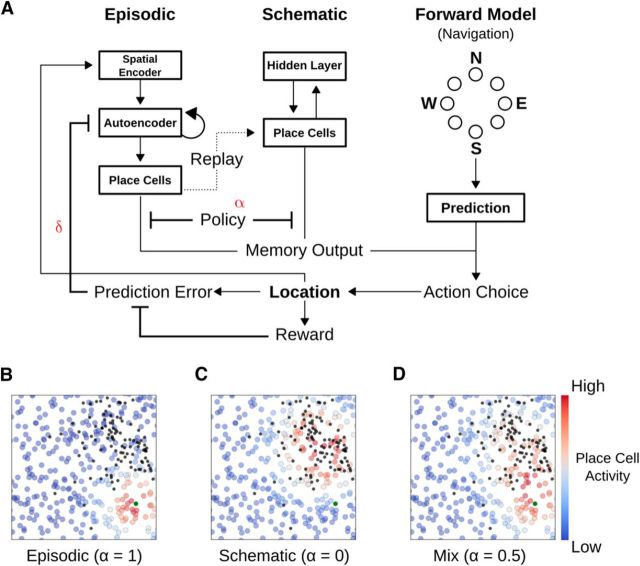

Figure 2.

A neural network model of a foraging agent with two memory systems. A, Illustration of the neural network model. To perform the task, the agent was equipped with both episodic and schematic memory stores, as well as a navigation network. The episodic network consisted of a spatial encoder, autoencoder, and place cells, whereas the schematic network was a two-layer network (specifically, an RBM). Recall outputs from these memory systems were fed into the navigation network, which chose actions such that the agent's subsequent position was most probably congruent with the memory systems' encoded reward location. Computed prediction errors influenced the strength of encoding of the agent's current position, with encoding occurring in an online, continuous fashion. B–D, Examples of recalled place cell activities for the different types of memory. A policy unit, α, determined the relative influence of the episodic and schematic systems to the overall memory output on the place cells. Each colored circle represents a place cell, with the color showing the activity level. B, Episodic memory output. High levels of α promoted episodic output, which produced place cell activities congruent with the most recently found reward (large green circle). C, Schematic memory output. Schematic outputs (small α) produced place cell representations congruent with the statistics of all previously learned reward locations. Black dots represent previously found rewards. D, Mixed memory output. A mix (α = 0.5) produced a blend of the two memory systems in place cell activity.