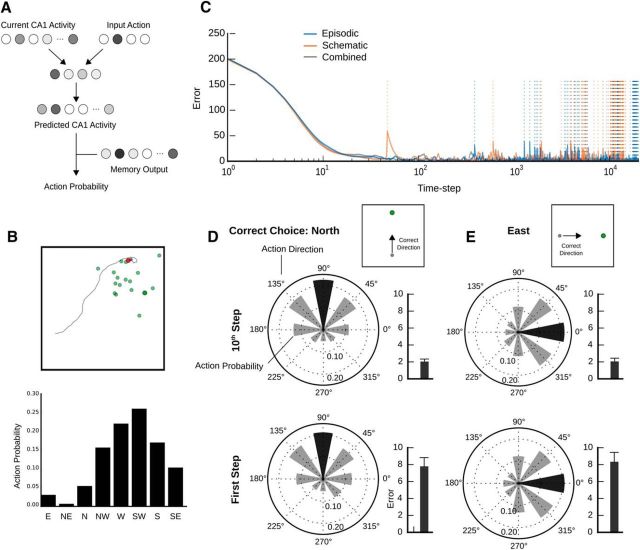

Figure 3.

Navigation by the foraging agent. A, Illustration of the forward model. The agent's forward model takes in current place cell activity, as well as a potential action choice, and outputs the predicted place cell state if the action were to be taken. B, An example path showing the agent navigating to a goal (red circle). The predicted state from the forward model is compared with the memory output to determine the probability of that action being taken, leading the agent to navigate a path (black line) directly to its goal. This goal may or may not be in the same position as the actual current reward location (large green dot) but will always depend on previous reward locations (small green dots). Bottom, Probability of the next action to be taken by the agent. C, Plot of forward model error during learning. As the agent wanders through space searching for rewards, the learning error for the forward model prediction decreases rapidly, but experiences sharp spikes whenever the agent is reinitiated in a new location (dotted lines). D, E, Tests of the navigation system. Despite the increase in learning error observed when the agent is initialized in new positions, it is still able to appropriately weight the probability of actions. This holds even in the first step after a learning error spike (bottom).