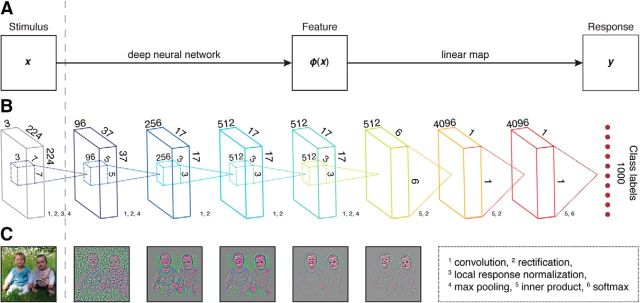

Figure 1.

DNN-based encoding framework. A, Schematic of the encoding model that transforms a visual stimulus to a voxel response in two stages. First, a deep (convolutional) neural network transforms the visual stimulus (x) to multiple layers of feature representations. Then, a linear mapping transforms a layer of feature representations to a voxel response (y). B, Schematic of the deep neural network where each layer of artificial neurons uses one or more of the following (non)linear transformations: convolution, rectification, local response normalization, max pooling, inner product, and softmax. C, Reconstruction of an example image from the activities in the first five layers.