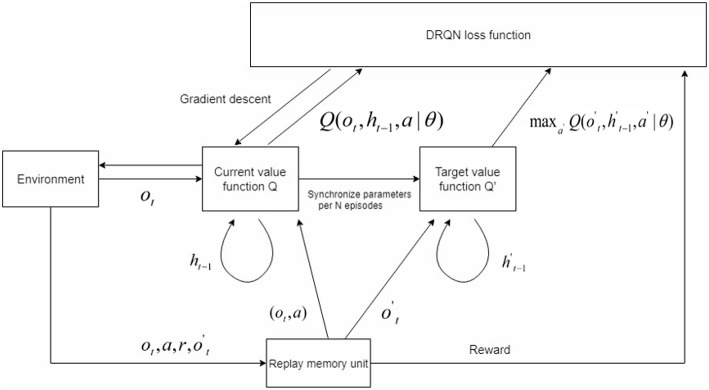

Figure 1.

The modified DRQN model. The value function was divided into two categories: the current value function Q and target value function Q′. The parameters in Q were assigned to Q′ per N episodes. The state contained two elements: ot gained from the current environment and ht−1 gained from former information. The agent performed action a using a specific policy, and the sequence (ot, a, r, ot′) was stored in the replay memory unit. We used a prioritized experience replay memory unit here. During training, the sequence was randomly chosen from the replay memory unit. We trained the network using gradient descent to make the current value function Q approach Q′ given a specific sequence. The loss function was shown in Equation (4).