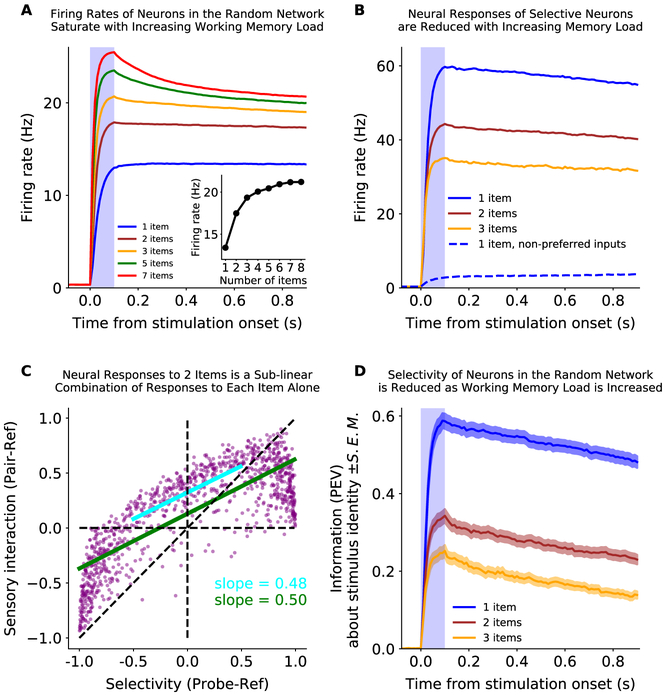

Figure 4: The effect of working memory load on neural responses.

(A) The average overall firing rate of neurons in the random network increases with memory load and saturates at the capacity limit of the network. Inset: The mean firing rate during the second half of the delay period as a function of initial load (‘number of items’). (B) The firing rate of selective neurons in the random network is reduced when inputs are added to other sub-networks. Selective neurons (N=71, 6.9% of the random network) were classified as having a greater response to a preferred input than to other, non-preferred, inputs into other sensory networks (preferred is blue, solid; non-preferred is blue, dashed; see Methods for details). The response to a preferred stimulus (blue) is reduced when it is presented with one or two items in other sub-networks (brown and yellow, respectively). (C) Divisive-normalization-like regularization of neural response is observed across the entire random network. The response of neurons in the random network to two inputs in two sub-networks is shown as a function of the response to one input alone. The x-axis is the ‘selectivity’ of the neurons, measured as the response to the ‘probe’ input into sub-network 2 relative to the ‘reference’ input into sub-network 1. The y-axis is the ‘sensory interaction’ of the neurons, measured as the response to the ‘pair’ of both the ‘probe’ and the ‘ref’ inputs, relative to the ‘ref’ alone. A linear fit to the full distribution (green) or the central tendency (blue) shows a positive y-intercept (0.13 and 0.32 for full and central portion) and a slope of 0.5, indicating the response to the pair of inputs is an even mixture of the two stimulus inputs alone. (D) The information about the identity of a memory decreases with memory load. Information was measured as the percent of variance in the firing rate of neurons in the random network explained by input identity (see Methods for details). Shaded region is S.E.M.