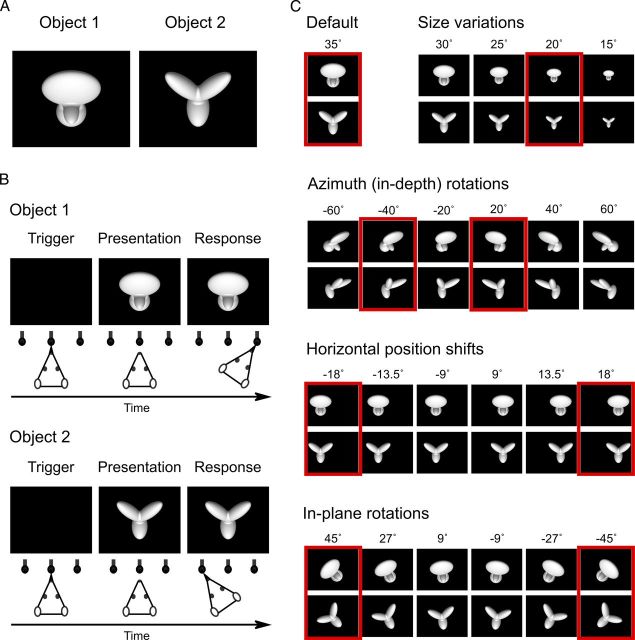

Figure 1.

Visual stimuli and behavioral task. A, Default views (0° in-depth and in-plane rotation) of the two objects that rats were trained to discriminate during Phase I of the study (each object default size was 35° of visual angle). B, Schematic of the object discrimination task. Rats were trained in an operant box that was equipped with an LCD monitor for stimulus presentation and an array of three sensors. The animals learned to trigger stimulus presentation by licking the central sensor and to associate each object identity to a specific reward port/sensor (right port for Object 1 and left port for Object 2). C, Some of the transformed views of the two objects that rats were required to recognize during Phase II of the study. Transformations included the following: (1) size changes; (2) azimuth in-depth rotations; (3) horizontal position shifts; and (4) in-plane rotations. Azimuth rotated and horizontally shifted objects were also scaled down to a size of 30° of visual angle; in-plane rotated objects were scaled down to a size of 32.5° of visual angle and shifted downward of 3.5°. Each variation axis was sampled more densely than shown in the figure: sizes were sampled in 2.5° steps; azimuth rotations in 5° steps; position shifts in 4.5° steps; and in-plane rotations in 9° steps. This yielded a total of 78 object views. The red frames highlight the subsets of object views that were tested in bubbles trials (Fig. 2).