Abstract

Although the human brain may have evolutionarily adapted to face-to-face communication, other modes of communication, e.g., telephone and e-mail, increasingly dominate our modern daily life. This study examined the neural difference between face-to-face communication and other types of communication by simultaneously measuring two brains using a hyperscanning approach. The results showed a significant increase in the neural synchronization in the left inferior frontal cortex during a face-to-face dialog between partners but none during a back-to-back dialog, a face-to-face monologue, or a back-to-back monologue. Moreover, the neural synchronization between partners during the face-to-face dialog resulted primarily from the direct interactions between the partners, including multimodal sensory information integration and turn-taking behavior. The communicating behavior during the face-to-face dialog could be predicted accurately based on the neural synchronization level. These results suggest that face-to-face communication, particularly dialog, has special neural features that other types of communication do not have and that the neural synchronization between partners may underlie successful face-to-face communication.

Introduction

Theories of human evolution converge on the view that our brain is designed to cope with problems that occurred intermittently in our evolutionary past (Kock, 2002). Evidence further indicates that during evolution, our ancestors communicated in a face-to-face manner characterized by a behavioral synchrony via facial expressions, gestures, and oral speech (Boaz and Almquist, 1997). Plausibly, many of the evolutionary adaptations of the brain for communication involve improvements in the efficiency of face-to-face communication. Today, however, other communication modes, such as telephone and e-mail, increasingly dominate the daily lives of many people (RoAne, 2008). Modern technologies have increased the speed and volume of communication, whereas opportunities for face-to-face communication have decreased significantly (Bordia, 1997; Flaherty et al., 1998). Thus, it would be interesting to determine the unique neural mechanistic features of face-to-face communication relative to other types of communication.

Two major features distinguish face-to-face communication from other types of communication. First, the former involves the integration of multimodal sensory information. The partner's nonverbal cues such as orofacial movements, facial expressions, and gestures can be used to actively modify one's own actions and speech during communication (Belin et al., 2004; Corina and Knapp, 2006). Moreover, infants show an early specialization of the cortical network involved in the perception of facial communication cues (Grossmann et al., 2008). Alteration of this integration can result in interference in speech perception (McGurk and MacDonald, 1976).

Another major difference is that face-to-face communication involves more continuous turn-taking behaviors between partners (Wilson and Wilson, 2005), a feature that has been shown to play a pivotal role in social interactions (Dumas et al., 2010). Indeed, turn-taking may reflect the level of involvement of a person in the communication. Research on nonverbal communication has shown that the synchronization of the brain activity between a gesturer and a guesser was affected by the level of involvement of the individuals involved in the communication (Schippers et al., 2010).

Despite decades of laboratory research on a single brain, the neural difference between face-to-face communication and other types of communication remains unclear, as it is difficult for single-brain measurement in a strictly controlled laboratory setting to reveal the neural features of communication involving two brains (Hari and Kujala, 2009; Hasson et al., 2012). Recently, Stephens et al. (2010) showed that brain activity was synchronized between the listener and speaker when the speaker's voice was aurally presented to the listener. Furthermore, Cui et al. (2012) established that functional near-infrared spectroscopy (fNIRS) can be used to measure brain activity simultaneously in two people engaging in nonverbal tasks, i.e., fNIRS-based hyperscanning. Thus, the current study used fNIRS-based hyperscanning to examine the neural features of face-to-face verbal communication within a naturalistic context.

Materials and Methods

Participants

Twenty adults (10 pairs, mean age: 23 ± 2) participated in this study. There were four male–male pairs and six female–female pairs, and all pairs were acquainted before the experiment. The self-rated acquaintance level did not show significant differences between the partners (t(18) = −0.429, p = 0.673). Written informed consent was obtained from all of the participants. The study protocol was approved by the ethics committee of the State Key Laboratory of Cognitive Neuroscience and Learning, Beijing Normal University.

Tasks and procedures

For each pair, an initial resting-state session of 3 min served as a baseline. During this session, the participants were required to keep still with their eyes closed, relax their mind, and remain as motionless as possible (Lu et al., 2010).

Four task sessions immediately followed the resting state session. The four tasks were as follows: (1) face-to-face dialog (f2f_d), (2) face-to-face monologue (f2f_m), (3) back-to-back dialog (b2b_d), and (4) back-to-back monologue (b2b_m). It was assumed that the comparison between f2f_d and b2b_d would reveal the neural features specific to multimodal sensory information integration, and that the comparison between f2f_d and f2f_m would reveal the neural features specific to continuous turn-taking during communication. b2b_m served as a control. The sequence of the four task sessions was counterbalanced across the pairs. For each task session, there were two 30 s resting-state periods located at the beginning and ending phases to allow the instrument to reach a steady state. The overall procedures were video recorded.

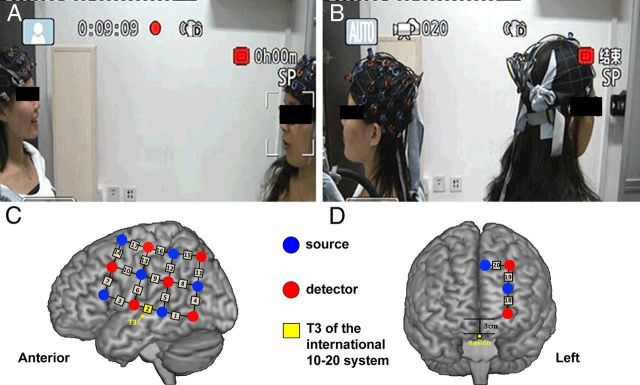

The pairs of participants sat face-to-face during f2f_d and f2f_m (Fig. 1A); during b2b_d and b2b_m, they sat back-to-back and could not see each other (Fig. 1B). Two hot news topics were used during f2f_d and b2b_d and the participants were asked to talk with each other about the topic for 10 min. The sequence of the two topics was counterbalanced across the pairs. An assessment of the familiarity level of the topics was performed using a five-point scale (1 representing the lowest level, and 5 representing the highest level). No significant differences were found between f2f_d and b2b_d (t(19) = −0.818, p = 0.434) or between partners, either during f2f_d (t(18) = −0.722, p = 0.48) or b2b_d (t(18) = −0.21, p = 0.836). Additionally, the participants were allowed to use gestures and/or expressions if they so chose during the dialogs.

Figure 1.

Experimental procedures. A, Face-to-face communication. B, Back-to-back communication. C, The first optode probe set placed on the frontal, temporal, and parietal cortices. D, The second optode probe set placed on the dorsal lateral prefrontal cortex.

Immediately after f2f_d and b2b_d, the participants were required to assess the quality of their communication using the five-point scale described above. A significant difference in the assessment scores between f2f_d and b2b_d (t(9) = 2.449, p = 0.037) was found for the quality of the communication, but no significant difference in the assessment scores was found between the two partners for either f2f_d (t(18) = 1.342, p = 0.196) or b2b_d (t(18) = 1.089, p = 0.291). These results suggested that f2f_d represented a higher quality of communication than b2b_d and that the two participants of the pair had comparable opinions about the quality of their communication.

During f2f_m and b2b_m, one of the participants was required to narrate his/her life experiences to his/her partner for 10 min, while the partner was required to keep silent during the entire task and not to perform any nonverbal communication. The sequence of narrators was balanced across the pairs. To ensure the listeners attended to the speakers' speech during these tasks, the participants were required to repeat the key points of the speaker's monologue immediately after the task. All of the participants were able to repeat the key points adequately.

fNIRS data acquisition

The participants sat in a chair in a silent room during the fNIRS measurements, which were conducted using an ETG-4000 optical topography system (Hitachi Medical Company). A group of customized optode probe sets was used. The probe was placed only on the left hemisphere because it is well established that the left hemisphere is dominant for the language function and that the left inferior frontal cortex (IFC) and inferior parietal cortex (IPC) form the most thoroughly studied nodes of the putative mirror neuron system for joint actions, including verbal communication (Rizzolatti and Craighero, 2004; Stephens et al., 2010).

Two optode probe sets were used on each participant in each pair. Specifically, one 3 × 4 optode probe set (six emitters and six detector probes, 20 measurement channels, and 30 mm optode separation) was used to cover the left frontal, temporal, and parietal cortices. Channel 2 (CH2) was placed just at T3, in accordance with the international 10-20 system (Fig. 1C). Another probe set (two emitters and two detectors, 3 measurement channels) was placed on the dorsal lateral prefrontal cortex (Fig. 1D). The probe sets were examined and adjusted to ensure the consistency of the positions between the partners of each pair and across the participants.

The absorption of near-infrared light at two wavelengths (695 and 830 nm) was measured at a sampling rate of 10 Hz. Based on the modified Beer–Lambert law, the changes in the oxyhemoglobin (HBO) and deoxyhemoglobin concentrations were obtained for each channel. Because previous studies showed that HBO was the most sensitive indicator of changes in the regional cerebral blood flow in fNIRS measurements, this study only focused on the changes in the HBO concentration (Cui et al., 2012).

Imaging data analysis

Synchronization.

During preprocessing, the initial and final periods of the data were removed, leaving 500 s of task data. Wavelet transform coherence (WTC) was used to assess the relationships between the fNIRS signals generated by a pair of participants (Torrence and Compo, 1998). As previously indicated (Cui et al., 2012), WTC can be used to measure the cross-correlation between two time series as a function of both frequency and time (for more details about WTC, see Grinsted et al., 2004). We used the wavelet coherence MatLab package (Grinsted et al., 2004). Specifically, for each CH from each pair of participants during f2f_d, two HBO time series were obtained simultaneously. WTC was applied to the two time series to generate a 2-D coherence map. According to Cui et al. (2012), the coherence value increases when there are cooperative tasks between partners, but decreases when there are no tasks, i.e., resting-state condition. Based on the same rationale, the average coherence value between 0.01 and 0.1 Hz was then calculated to remove the high- and low-frequency noise. Finally, the coherence value was time-averaged. The same procedure was applied to the other conditions (f2f_m, b2b_d, b2b_m, and resting state).

The averaged coherence value in the resting-state condition was subtracted from that of the communication conditions, and the difference was used as an index of the neural synchronization increase between the partners. For each channel, after converting the synchronization increase into a z value, we performed a one-sample t test on the z value across the participant pairs and generated a t-map of the neural synchronization [p < 0.05, corrected by false discovery rate (FDR)]. The t-map was smoothed using the spline method.

Validation of the synchronization.

To verify that the neural synchronization increase was specific for the pairs involved in the communication, the data for the 20 participants were randomly paired so that each participant was paired to a new partner who had not communicated with him/her during the task. The analytic procedures described above were applied to these new pairs. It was assumed that no significant synchronization increase would be found for any of the four communication conditions.

Contribution to the synchronization.

The CHs that showed significant synchronization increases during f2f_d compared with the other types of communication were selected for further analysis to examine whether face-to-face interactions between the partners contributed to the neural synchronization. First, to identify the video frames corresponding to the coherence time points, the time course of the coherence values was downsampled to 1 Hz. Second, for each pair of participants, the videos for f2f_d and b2b_d were analyzed as follows: the time points of the video showing the interactions between partners, i.e., turn-taking behavior, body language (including orofacial movements, facial expressions, and gestures), were marked; and the coherence values that corresponded and those that did not correspond to these time points were separately averaged to obtain two indexes, one for synchronization that occurred during the interaction (SI) and another for synchronization that did not occur during the interaction (SDI). Finally, SI and SDI were compared across the pairs using a two-sample t test for each of the two tasks.

Prediction of communicating behavior.

The predictability of communicating behavior on the basis of neural synchronization during f2f_d was examined. Equal numbers of SI and SDI data points were randomly selected from the identified CHs. The coherence value was used as the classification feature, whereas the SI and SDI marks were used as the classification labels. Fisher linear discrimination analysis was used and validated with the leave-one-out cross-validation method. Specifically, for a total of N samples, the leave-one-out cross-validation method trains the classifier N times; each time, a different sample is omitted from the training but is then used to test the model and compute the prediction accuracy (Zhu et al., 2008). For the outputs, the sensitivity and specificity indicated the proportions of SI and SDI that were correctly predicted, whereas the generalization rate indicated the overall proportions of SI and SDI that were correctly predicted.

Results

Synchronization during communication

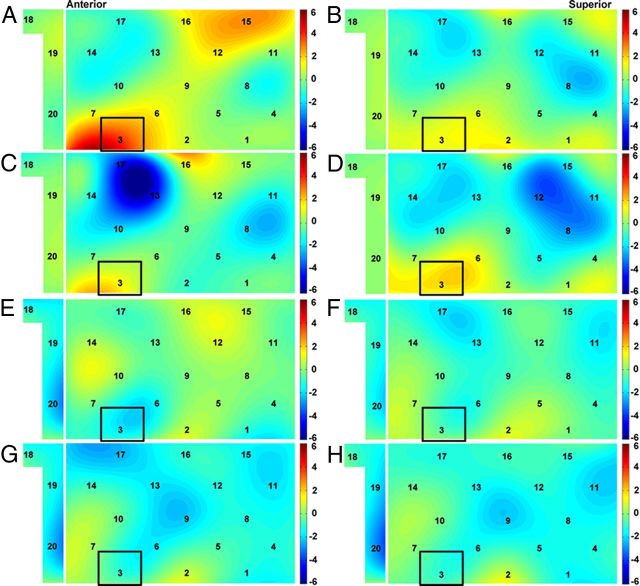

During f2f_d, a higher synchronization was found in CH3 than during the resting-state condition, suggesting a neural synchronization increase in CH3 during f2f_d (Fig. 2A). As shown in Figure 1C, CH3 covered the left IFC.

Figure 2.

Neural synchronization increase. t-maps are for the original pairs (A–D), random pairs (E–H), face-to-face dialog (A, E), face-to-face monologue (B, F), back-to-back dialog (C, G), and back-to-back monologue (D, H). The warm and cold colors indicate increases and decreases in neural synchronization, respectively. The black rectangle highlights CH3, showing a significant increase in neural synchronization during the face-to-face dialog.

No significant neural synchronization increase was found during b2b_d, f2f_m, or b2b_m (Fig. 2B–D). Thus, the increase of neural synchronization in the left IFC (i.e., CH3) was specific for the face-to-face communication.

A further analysis of CH3, which showed a significant neural synchronization increase during f2f_d, showed that the synchronization increase during f2f_d differed significantly from that during b2b_d (t(9) = 4.475, p = 0.002), but did not differ from that during f2f_m (t(9) = 1.547, p = 0.156) or b2b_m (t(9) = 1.85, p = 0.097) after an FDR correction at the p < 0.05 level.

In addition, during b2b_d, a lower synchronization was found in CH13 than during the resting-state condition (Fig. 2C). However, the synchronization of CH13 during b2b_d did not differ significantly from any other condition after an FDR correction at the p < 0.05 level (f2f_d: t(9) = 2.877, p = 0.018; f2f_m: t(9) = 1.198, p = 0.262; b2b_m: t(9) = −0.474, p = 0.647).

Validation of the synchronization

No CHs showed a significant increase in neural synchronization between the randomly paired participants under any of the four communication conditions (Fig. 2E–H).

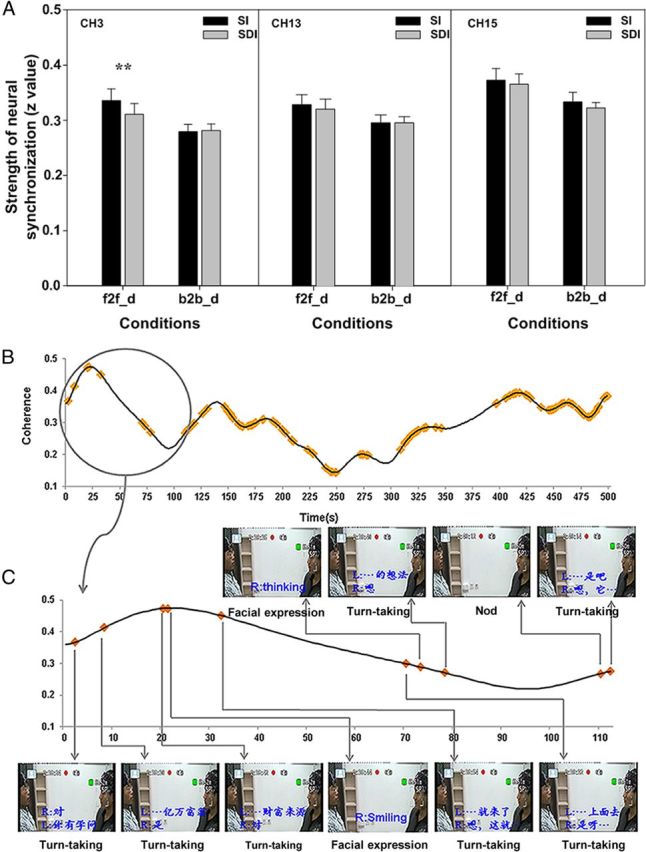

Contribution to the synchronization

To specify which characteristics of f2f_d contributed to the neural synchronization in the left IFC (i.e., CH3), we further examined the time course of the coherence value for each pair of participants. Two raters independently coded the SI and SDI for the video of each pair. The intraclass reliability was 0.905 for f2f_d and 0.932 for b2b_d. Further statistical tests of the coherence value showed a significant difference between SI and SDI during f2f_d (t(9) = 3.491, p = 0.007) but not during b2b_d (t(9) = −0.363, p = 0.725), indicating that the synchronization in the left IFC was primarily contributed to by the face-to-face interaction rather than simply the verbal signal transmission (Fig. 3A). Figure 3B illustrates the distribution of SI across the time course for a randomly selected pair of participants. Figure 3C focuses on a portion of the time course and presents the recorded video images corresponding to SI, with most of the SI events being distributed around the peak or along the increasing portion of the time course of the coherence value.

Figure 3.

Contributions of nonverbal cues and turn-taking to the neural synchronization during the face-to-face and back-to-back dialogs. A, Statistical comparisons between SI and SDI. Error bars indicate SE. **p < 0.01. B, Distribution of SI (yellow points) across the entire time course of coherence values in a randomly selected pair of participants. C, A portion of the time course and the corresponding video images recorded during the experiment. The dialog content at that point is transcribed in blue. R and L, Right and left persons, respectively. The type of communication behavior is indicated in black below the image.

Two validation analyses were conducted. First, the coherence value in CH3 was randomly split into two parts for each participant pair. A paired two-sample t test was then conducted to evaluate the difference between the two parts; no significant difference was found (t(9) = −0.513, p = 0.62). Second, two additional CHs, i.e., CH13, which covered the premotor area, and CH15, which covered the left IPC, were also examined using the above procedure, and no significant difference between SI and SDI was found during either f2f_d (CH13: t(9) = 1.004, p = 0.342; CH15: t(9) = 1.252, p = 0.242) or b2b_d (CH13: t(9) = 0.067, p = 0.948; CH15: t(9) = 1.104, p = 0.298). These results suggested that the synchronization increase in this region was primarily contributed to by the face-to-face interaction rather than simply the verbal signal transmission.

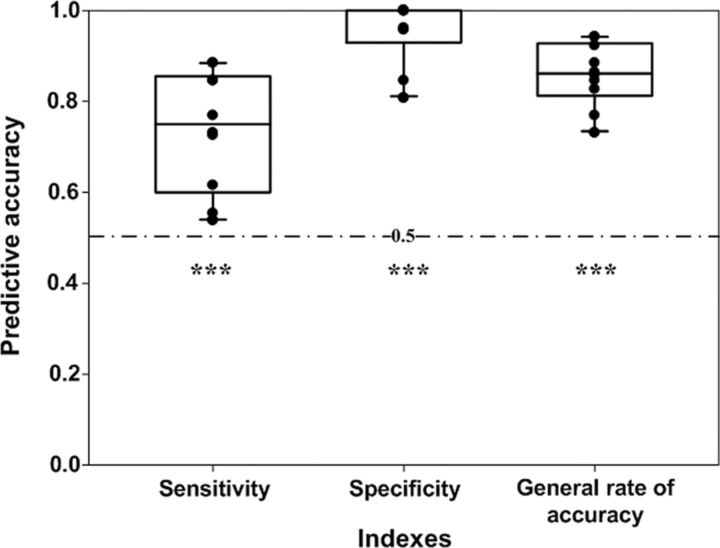

Prediction of communicating behavior

The leave-one-out cross-validation showed that the average accuracy of the prediction of communicating behavior during f2f_d was 0.74 ± 0.13 for the sensitivity, 0.96 ± 0.07 for the specificity, and 0.86 ± 0.07 for the generalization rate. The statistical tests showed that all three indexes exceeded the chance level (0.5) (sensitivity: t = 5.745, p < 0.0001; specificity: t = 20.294, p < 0.0001; generalization rate: t = 16.162, p < 0.0001; Fig. 4). These results suggested that the neural synchronization could accurately predict the communicating behavior.

Figure 4.

Prediction accuracy for communication behavior based on the neural synchronization of CH3 during face-to-face dialog. Each black point denotes one pair of participants. The dashed line indicates the chance level (0.5). Error bars are SE. ***p < 0.001.

Discussion

The current study examined the unique neural mechanistic features of face-to-face communication compared with other types of communication. The results showed a significant increase in the neural synchronization between the brains of the two partners during f2f_d but not during the other types of communication. Behavioral coupling between partners during communication, such as the structural priming effect, has been well documented: the two partners involved in a communication will align their representations by imitating each other's choice of grammatical forms (Pickering and Garrod, 2004). Recent studies suggest that behavioral synchronization between partners may rely on the neural synchronization between their brains (Hasson et al., 2012). It was found that successful communication between speakers and listeners resulted in a temporally coupled neural response pattern that decreased if the speakers spoke a language unknown to the listeners (Stephens et al., 2010). Moreover, neural synchronization between brains was confirmed in nonverbal communication protocols (Dumas et al., 2010; De Vico Fallani et al., 2010; Schippers et al., 2010; Cui et al., 2012). Thus, it can be concluded that the level of neural synchronization between brains is associated with behavioral synchronization and underlies successful communication.

The present findings extend previous evidence by showing significant neural synchronization in the left IFC during f2f_d but not the other types of communication. Compared with b2b_d, f2f_d involved verbal signal transmission and also nonverbal signal transmission, including orofacial movements, facial expressions, and/or gestures. This multimodal information would facilitate the alignment of behavior between partners at various levels of communication, resulting in higher-level neural synchronization during f2f_d (Belin et al., 2004; Corina and Knapp, 2006).

One possible explanation for this facilitation effect is the function of the action–perception system (Garrod and Pickering, 2004; Rizzolatti and Craighero, 2004; Hari and Kujala, 2009). Previous evidence has shown that the left IFC, in addition to several other brain regions, is the site where mirror neurons are located (Rizzolatti and Arbib, 1998). The mirror neurons respond to observations of an action, to a sound associated with that action, or even to observations of mouth-communicative gestures (Kohler et al., 2002; Ferrari et al., 2003). In the current study, no CHs that covered the left IPC showed a significant synchronization increase during f2f_d. This result indicated that the left IFC might be involved in such an action–perception system (Nishitani et al., 2005) and also that it might specifically provide a necessary bridge for human face-to-face communication (Fogassi and Ferrari, 2007).

Further analysis revealed that the difference between f2f_d and b2b_d was primarily based on the direct interactions between partners, i.e., turn-taking and body language, rather than simply on verbal signal transmission. This finding is consistent with previous evidence regarding the acquisition of communication. Research on infant language development has found that the native language is acquired through interactions with caregivers (Goldstein and Schwade, 2008). Interactions between caregivers and infants can help maintain proximity between the caregivers and the infants and reinforce the infants' earliest prelinguistic vocalizations. Thus, f2f_d offers important features for the acquisition of communication that b2b_d does not (Goldstein et al., 2003; Goldstein and Schwade, 2008).

The importance of turn-taking behavior during communication was further confirmed by the comparison between f2f_d and f2f_m, i.e., the left IFC showed a significant neural synchronization increase during f2f_d but not during f2f_m. This finding extended the previous evidence of neural synchronization in unidirectional emitter/receiver communication to dynamic bidirectional transmission communication and suggested that turn-taking, in addition to other types of interactions during f2f_d, contributes significantly to the neural synchronization between partners during real-time dynamic communication.

Based on the features of neural synchronization, two communication behaviors that included or that did not include interactive communication, such as turn-taking and body language, could be successfully predicted. This finding further validates that during face-to-face communication, multimodal sensory information integration and turn-taking behavior contribute to the neural synchronization between partners, and that communicating behavior can be predicted above chance level based on the neural synchronization. These results can also provide a potential approach for helping children with communication disorders through neurofeedback techniques (i.e., a brain–computer interface).

It has been suggested that the human brain is evolutionarily adapted to face-to-face communication (Boaz and Almquist, 1997; Kock, 2002). However, such technologies as telephone and e-mail have changed the role of traditional face-to-face communication. The current study showed that, compared with other types of communication, face-to-face communication is characterized by a significant neural synchronization between partners based primarily on multimodal sensory information integration and turn-taking behavior during dynamic communication. These findings suggest that face-to-face communication has important neural features that other types of communication lack, and also that people should take more time to communicate face-to-face.

Footnotes

This work was supported by the National Natural Science Foundation of China (31270023), National Basic Research Program of China (973 Program; 2012CB720701), and Fundamental Research Funds for the Central Universities.

The authors declare no financial conflicts of interest.

References

- Belin P, Fecteau S, Bédard C. Thinking the voice: neural correlates of voice perception. Trends Cogn Sci. 2004;8:129–135. doi: 10.1016/j.tics.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Boaz NT, Almquist AJ. Biological anthropology: a synthetic approach to human evolution. Upper Saddle River, NJ: Prentice Hall; 1997. [Google Scholar]

- Bordia P. Face-to-face versus computer-mediated communication: a synthesis of the experimental literature. J Bus Commun. 1997;34:99. [Google Scholar]

- Corina DP, Knapp H. Special issue: review sign language processing and the mirror neuron system. Cortex. 2006;42:529–539. doi: 10.1016/s0010-9452(08)70393-9. [DOI] [PubMed] [Google Scholar]

- Cui X, Bryant DM, Reiss AL. NIRS-based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. Neuroimage. 2012;59:2430–2437. doi: 10.1016/j.neuroimage.2011.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Vico Fallani F, Nicosia V, Sinatra R, Astolfi L, Cincotti F, Mattia D, Wilke C, Doud A, Latora V, He B, Babiloni F. Defecting or not defecting: how to read human behavior during cooperative games. PLoS One. 2010;5:e14187. doi: 10.1371/journal.pone.0014187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas G, Nadel J, Soussignan R, Martinerie J, Garnero L. Inter-brain synchronization during social interaction. Plos One. 2010;5:e12166. doi: 10.1371/journal.pone.0012166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari PF, Gallese V, Rizzolatti G, Fogassi L. Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur J Neurosci. 2003;17:1703–1714. doi: 10.1046/j.1460-9568.2003.02601.x. [DOI] [PubMed] [Google Scholar]

- Flaherty LM, Pearce KJ, Rubin RB. Internet and face-to-face communication: not functional alternatives. Communication Quarterly. 1998;46:250–268. [Google Scholar]

- Fogassi L, Ferrari PF. Mirror neurons and the evolution of embodied language. Curr Direct Psychologic Sci. 2007;16:136. [Google Scholar]

- Garrod S, Pickering MJ. Why is conversation so easy? Trends Cogn Sci. 2004;8:8–11. doi: 10.1016/j.tics.2003.10.016. [DOI] [PubMed] [Google Scholar]

- Goldstein MH, Schwade JA. Social feedback to infants' babbling facilitates rapid phonological learning. Psychol Sci. 2008;19:515–523. doi: 10.1111/j.1467-9280.2008.02117.x. [DOI] [PubMed] [Google Scholar]

- Goldstein MH, King AP, West MJ. Social interaction shapes babbling: testing parallels between birdsong and speech. Proc Natl Acad Sci U S A. 2003;100:8030–8035. doi: 10.1073/pnas.1332441100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grinsted A, Moore J, Jevrejeva S. Application of the cross wavelet transform and wavelet coherence to geophysical time series. Nonlinear Process Geophys. 2004;11:561–566. [Google Scholar]

- Grossmann T, Johnson MH, Lloyd-Fox S, Blasi A, Deligianni F, Elwell C, Csibra G. Early cortical specialization for face-to-face communication in human infants. Proc Biol Sci. 2008;275:2803–2811. doi: 10.1098/rspb.2008.0986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hari R, Kujala MV. Brain basis of human social interaction: from concepts to brain imaging. Physiol Rev. 2009;89:453–479. doi: 10.1152/physrev.00041.2007. [DOI] [PubMed] [Google Scholar]

- Hasson U, Ghazanfar AA, Galantucci B, Garrod S, Keysers C. Brain-to-brain coupling: a mechanism for creating and sharing a social world. Trends Cogn Sci. 2012;16:114–121. doi: 10.1016/j.tics.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kock N. Evolution and media naturalness: a look at e-communication through a Darwinian theoretical lens. In: Applegate L, Galliers R, DeGross JL, editors. Proceedings of the 23rd International Conference on Information Systems; Atlanta, GA: Association for Information Systems; 2002. pp. 373–382. [Google Scholar]

- Kohler E, Keysers C, Umiltà MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Lu CM, Zhang YJ, Biswal BB, Zang YF, Peng DL, Zhu CZ. Use of fNIRS to assess resting state functional connectivity. J Neurosci Methods. 2010;186:242–249. doi: 10.1016/j.jneumeth.2009.11.010. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Nishitani N, Schürmann M, Amunts K, Hari R. Broca's region: from action to language. Physiology. 2005;20:60–69. doi: 10.1152/physiol.00043.2004. [DOI] [PubMed] [Google Scholar]

- Pickering MJ, Garrod S. Toward a mechanistic psychology of dialogue. Behav Brain Sci. 2004;27:169–190. doi: 10.1017/s0140525x04000056. discussion 190–226. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Arbib MA. Language within our grasp. Trends Neurosci. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- RoAne S. Face to face: how to reclaim the personal touch in a digital world. New York: Fireside; 2008. [Google Scholar]

- Schippers MB, Roebroeck A, Renken R, Nanetti L, Keysers C. Mapping the information flow from one brain to another during gestural communication. Proc Natl Acad Sci U S A. 2010;107:9388–9393. doi: 10.1073/pnas.1001791107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens GJ, Silbert LJ, Hasson U. Speaker-listener neural coupling underlies successful communication. Proc Natl Acad Sci U S A. 2010;107:14425–14430. doi: 10.1073/pnas.1008662107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torrence C, Compo GP. A practical guide to wavelet analysis. Bull Am Meteorologic Soc. 1998;79:61–78. [Google Scholar]

- Wilson M, Wilson TP. An oscillator model of the timing of turn-taking. Psychon Bull Rev. 2005;12:957–968. doi: 10.3758/bf03206432. [DOI] [PubMed] [Google Scholar]

- Zhu CZ, Zang YF, Cao QJ, Yan CG, He Y, Jiang TZ, Sui MQ, Wang YF. Fisher discriminative analysis of resting-state brain function for attention-deficit/hyperactivity disorder. Neuroimage. 2008;40:110–120. doi: 10.1016/j.neuroimage.2007.11.029. [DOI] [PubMed] [Google Scholar]