Abstract

The execution of motor behavior influences concurrent visual action observation and especially the perception of biological motion. The neural mechanisms underlying this interaction between perception and motor execution are not exactly known. In addition, the available experimental evidence is partially inconsistent because previous studies have reported facilitation as well as impairments of action perception by concurrent execution. Exploiting a novel virtual reality paradigm, we investigated the spatiotemporal tuning of the influence of motor execution on the perception of biological motion within a signal-detection task. Human observers were presented with point-light stimuli that were controlled by their own movements. Participants had to detect a point-light arm in a scrambled mask, either while executing waving movements or without concurrent motor execution (baseline). The temporal and spatial coherence between the observed and executed movements was parametrically varied. We found a systematic tuning of the facilitatory versus inhibitory influences of motor execution on biological motion detection with respect to the temporal and the spatial congruency between observed and executed movements. Specifically, we found a gradual transition between facilitatory and inhibitory interactions for decreasing temporal synchrony and spatial congruency. This result provides evidence for a spatiotemporally highly selective coupling between dynamic motor representations and neural structures involved in the visual processing of biological motion. In addition, our study offers a unifying explanation that reconciles contradicting results about modulatory effects of motor execution on biological motion perception in previous studies.

Introduction

Numerous theories have postulated a tight link between the perception and execution of actions, suggesting that both share common mechanisms. A part of the neural substrate of such common coding (Prinz, 1997; Hommel et al., 2001) might be formed by the “mirror neuron system.” Mirror neurons have been observed in premotor and parietal cortex of macaques (Gallese et al., 1996; Rizzolatti and Craighero, 2004) and are characterized by activation during both action observation and action execution. Comparable neural mechanisms might exist in the human cortex, for example, in the inferior frontal gyrus (IFG), the inferior parietal lobule (IPL), the medial temporal gyrus (MTG), and the superior temporal sulcus (STS), and it has been postulated that they play a critical role in action understanding (Iacoboni et al., 2001; Buccino et al., 2004a; Hamilton et al., 2006; Thornton and Knoblich, 2006; Lestou et al., 2008) and potentially also in social cognition (Gallese et al., 2004).

Consistent with a bidirectional relationship between action perception and execution, behavioral studies have shown disturbed arm movements caused by simultaneous observations of incongruent arm movements of others (Kilner et al., 2003). Likewise, self-generated movements influence the sensations of movements executed by others. However, the relevant literature on this “perceptual resonance” (Schütz-Bosbach and Prinz, 2007) is inconsistent, reporting facilitation of visual (Wohlschläger, 2000; Craighero et al., 2002; Miall et al., 2006) and even of auditory (Repp and Knoblich, 2007) sensations by concurrent motor execution, as well as demonstrating action-induced deterioration of perceptual judgments (Müsseler and Hommel, 1997; Hamilton et al., 2004; Jacobs and Shiffrar, 2005; Zwickel et al., 2010). Apart from methodological differences between these studies, this raises the question whether the same mechanism might account for facilitatory and inhibitory influences of action execution on perception.

Popular theories postulate that action observation involves an internal simulation of the observed behavior exploiting dynamic motor representations (Wolpert and Miall, 1996; Wolpert et al., 2003; Erlhagen et al., 2006; Kilner et al., 2007). Common to these models is the assumption that the cortex represents the dynamic evolution of actions in a form that permits predictions about the actual associated sensory signals. As a consequence, the influence of motor execution on biological motion perception should depend critically on the temporal and spatial congruency between observed and executed behavior. In the presence of such congruency, the perceived sensory signals can interact synergistically, resulting in a facilitation of action recognition compared with perception without concurrent motor behavior. Conversely, in the presence of substantial delays or strong spatial incongruence, the predictions derived from motor execution can contradict and thus compete with the actual sensory input. This effectively would impair visual recognition, resulting in inhibitory interactions between execution and perception.

To test this hypothesis, we developed a novel experimental paradigm combining real-time full-body tracking with online generation of biological motion stimuli, making the visual biological motion stimuli directly dependent on the actual behavior of the observer. We tested systematically the influence of time delays and spatial transformations between executed and observed movements on the detection of biological motion. We found consistent evidence for a well-defined spatiotemporal tuning of the influence of motor execution on biological motion perception, in which facilitation occurred only for sufficient spatial and temporal coherence between both actions, whereas substantial degrees of incoherence resulted in inhibition.

Materials and Methods

Participants.

Forty-nine participants took part in the experiments: seventeen in experiment 1 (11 female, 6 male; mean age, 27.3 years; age range, 20.4–36.4 years), 15 in experiment 2 (7 female, 8 male; mean age, 24 years; age range, 20.42–28.42 years), and 17 in experiment 3 (13 female, 4 male; mean age, 24.42; age range, 19.58–32.83 years). All participants had normal or corrected vision and no motor impairment that would influence their arm movements. They were naive with regard to the purpose of the study, and strongly right-handed according to the Edinburgh Handedness Inventory (Oldfield, 1971) (all laterality quotients above +80). They received payment for their participation.

Virtual reality setup.

We recorded observers' movements using a Vicon 612 motion capture system (Vicon) with eight cameras at a sampling frequency of 120 Hz. Five reflecting markers were attached to the participant's arm with double-sided adhesive tape. These markers corresponded to the major joints and the centers of the adjacent limbs (upper and lower arm). Commercial software was used to reconstruct and label these markers with spatial three-dimensional reconstruction errors below 1.5 mm. We developed custom software to read out the marker positions in real time and to generate the visual stimulus with a closed-loop delay of ∼30 ms.

Visual stimuli.

We presented waving arms as point-light stimuli (Johansson, 1973) consisting of five black signal dots on a gray background. These stimuli were embedded in a camouflaging noise mask. The noise dots were identical to the signal dots and were created by “scrambling” of point-light stimuli (Cutting et al., 1988), in which the trajectories were derived from the waving movements in previous trials. Scrambling was accomplished by retaining the original dot trajectories but randomizing the average position of each individual dot and its start phase within one cycle of the periodic movement. Scrambling destroys the global structure of the point-light movements but preserves average local motion energy. To match the statistical properties of the noise points optimally with those of the target point lights, we generated separate sets of scrambled stimuli for each test condition.

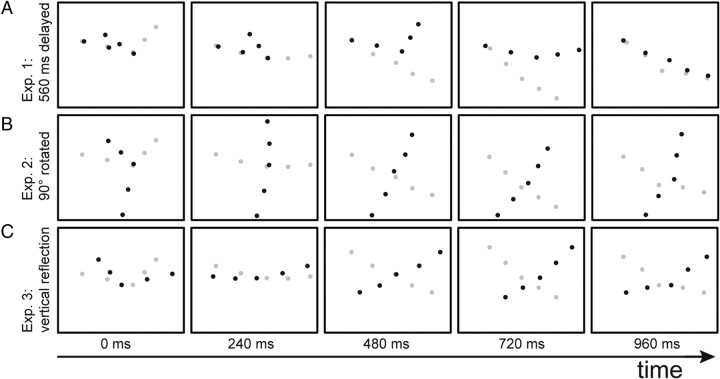

Approximately 60–66% of the trials were signal trials that contained the point-light arm and the noise mask. In the remaining trials, only the noise mask was presented, in which the number of noise dots was increased by five to balance the number of dots in trials with and without the target arm. The number of noise dots varied between 15 and 125 in seven (experiment 1) or five (experiments 2 and 3) equidistant steps. The noise mask subtended 25.4 × 19.2° of visual angle. The target arm was controlled by the actual movement of the observer, including different transformations. It was presented at random positions within the mask with a size of 7.2 × 5.7°. In a subset of trials, the actually executed movement was subject to additional transformations (inclusion of delays and spatial alterations) before the two-dimensional movements of the point lights were determined by projection from the marker trajectories. These spatial and temporal transformations of the visual stimulus compared with the executed arm movement are illustrated schematically in Figure 1. The black dots indicate the displayed motion, and the gray dots show the actual position of the subject's arm.

Figure 1.

Applied stimulus transformations. Detection target (point-light stimulus with five dots showing a waving arm) is illustrated by the black dots. Gray dots indicate the true arm movement. A, Time delay (560 ms) between visual stimulus and actual executed movement (see experiment 1). B, Visual stimulus rotated by 90° counterclockwise in the image plane (experiment 2). C, Arm movement reflected vertically (experiment 3).

Experimental procedure.

Subjects sat in front of a projection screen that was placed 1.5 m in front of them. Each experiment started with a short training period during which the subjects familiarized themselves with point-light stimuli by watching a waving arm, which was based on recorded movements from previous trials. Subsequently, participants practiced to wave their arms with a constant frequency of ∼30 full waving cycles per minute. A single waving movement lasted for ∼2 s, matching approximately the presentation time of the visual stimuli.

The main experiment comprised a sequence of alternating test and baseline blocks. In the test blocks, subjects simultaneously waved their right arm (respectively, the left arm in experiment 3) with the trained frequency while observing the visual stimuli. Each trial started with a green fixation point on a uniform gray background. After 500 ms, the fixation point turned black, serving as cue for the initiation of the waving movement. The stimulus itself appeared 1000 ms after movement onset and lasted for 2000 ms while the participants continuously moved their arms. Arm movements of the target trials were recorded for a post hoc analysis and for the generation of the stimuli in the baseline blocks. In the baseline blocks, subjects observed the same stimuli, this time without executing any concurrent motor behavior.

The instruction for the detection task was the same during all blocks and for all experiments. Participants had to detect any waving point-light arm regardless of whether they thought the displayed limb to be associated with their own motion or not. They learned to imagine the stimulus as representing a waving movement of someone sitting in front of them, his back being oriented toward them. They did not know that they steered the visual stimulus, and only ∼40–50% of the subjects became aware of this fact during the experiment. The participants answered verbally to the question, whether they had observed any waving arm movement in the stimulus or not, and the consecutive trial started immediately after the experimenter had noted the response.

The degree to which the individual participants became aware of the fact that they controlled the stimulus was assessed using the following protocol after the experiment. First, the experimenter encouraged the participant to report spontaneously his impressions of the experiment. If the participant did not report any sensation of a connection between the executed motion and the visual stimulus, the experimenter started to ask the following questions: (1) “Did you have the impression that your performance varied during blocks?” (2) “Was it easier for you to detect the arm with or without waving your arm simultaneously?” (3) “Can you explain why?” Finally, participants were directly asked the following: (4) “Did you have the impression that you tried to match your arm movement to the stimulus or that somehow the stimulus corresponded to your own movement?” Based on the answers to these questions, participants were divided into three groups. Participants that did not report the impression that they controlled the stimulus were classified as “nonrecognizers.” Otherwise, they were asked additionally when during the experiment they became aware that they controlled the stimulus. Based on this response, the remaining subjects were divided into “early recognizers” (those who became aware of this fact in the first half of the experiment) and “late recognizers” (those who became aware of the stimulus control in the second half of the experiment). The results of these three subgroups were first analyzed separately to test whether the awareness of the possibility to control the stimulus had any influence on the result. In this way, we can rule out that the observed differences might reflect high-level cognitive strategies that participants apply once they notice that they are in control of the visual stimulus.

Assessment of detection performance.

Detection performance was assessed separately for each subject. Every testing and baseline condition was assigned one noise tolerance value (NTV), indicating the maximum number of noise dots in the mask that on average would lead to >75% correct detections. To calculate the NTV, in a first step, d′ values were computed separately for the seven (experiment 1) and five (experiments 2 and 3) tested noise levels. The measured d′ values were normalized within each individual subject by dividing them by the maximum value over all conditions. The resulting normalized values were fitted by a logistic function f. The noise tolerance value was then defined as the number of noise dots that fulfilled the equation f(NTV) = 0.75.

Finally, to aid the comparison between the different conditions and to assess facilitating versus impeding effects, we computed a recognition index (RI) from the NTV of each condition. We defined this index as the logarithm of the ratio of the NTVs for the testing condition and the baseline condition without motor execution:

Positive values of this index indicate a facilitation of action recognition by the concurrent motor execution, whereas negative values correspond to interference between action execution and biological motion detection.

Results

Experiment 1: time delays between observed and executed action

In this experiment, we tested the influence of temporal synchrony between the visually presented and the executed actions by introduction of delays between the visual stimulus and the executed motor acts.

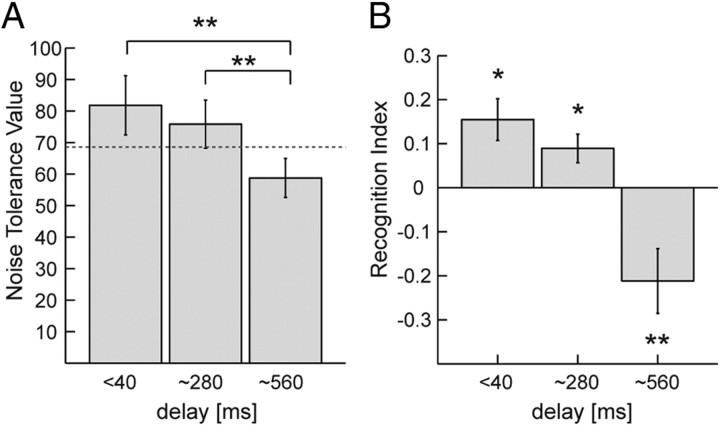

The results of the visual detection task are illustrated in Figure 2. A shows the detectability, in terms of the NTV, as a function of the delay. The dashed line indicates the average NTV for trials without concurrent motor execution, providing a baseline for the assessment of facilitating and impeding influences of motor execution on action perception.

Figure 2.

Influence of time delays on the facilitation of biological motion perception by concurrent motor execution. A, NTV, resulting in 75% correct detections, as function of the temporal delay between the visual stimulus and the executed movements. Error bars indicate SEs. *p < 0.05; **p < 0.01, significant pairwise differences. The dashed line indicates the noise tolerance value for trials without motor execution (baseline). B, Recognition index computed from the NTVs whose sign indicates facilitation versus interference between visual perception and motor execution. *p < 0.05; **p < 0.01, significantly different from zero. Error bars indicate SEs.

To rule out a possible influence of the conscious awareness of participants that they controlled the stimuli by their own movements, we performed an additional mixed-factor ANOVA that included the additional between-subject factor awareness (nonrecognizers, late recognizers, and early recognizers). The NTV decayed significantly with the delay (ANOVA, F(2,28) = 11.812, p < 0.001). Specifically, the NTV for the long delay (560 ms) was significantly lower than for the smaller delays (p < 0.001 for both cases, Bonferroni's corrected) and for the baseline condition (p = 0.038, Bonferroni's corrected). This decay occurred independent of the participant's awareness, supported by a nonsignificant interaction between delay and awareness in the mixed-factor ANOVA (p = 0.796). In addition, the comparison of the different awareness groups using a Kruskal–Wallis test did not reveal significant differences between the NTVs between three groups, for neither the test nor the baseline condition (all p > 0.05).

A more compact way to illustrate these results—the RI for the three testing conditions—is depicted in Figure 2B. The RIs show a significant decay with increasing delay between the executed action and the visual stimulus (ANOVA, F(2,28) = 14.816, p = 0.001). Also for the RI, we failed to find significant differences between the subgroups with different levels of awareness of being in control of the visual stimulus, indicated by a nonsignificant interaction between delay and the awareness in the mixed-factor ANOVA (p = 0.569; Kruskal–Wallis tests, all p > 0.5; post hoc comparisons, all p = 1.0, Bonferroni's corrected). (For an illustration of the RIs of the individual subgroups, see supplemental information, available at www.jneurosci.org as supplemental material). Visual stimuli with small delays relative to the executed action are characterized by significant facilitation of biological motion detection by action execution (RI > 0; one-sample, two-tailed t test for delay < 40 ms: t(16) = 2.85, p = 0.012, and for 280 ms: t(16) = 2.68, p = 0.016). However, for large delays (560 ms), self-generated movements interfered significantly with the visual perception of biological motion, indicated by a significant negative RI (one-sample, two-tailed t test, t(16) = −2.91, p = 0.01).

Summarizing, concurrent motor execution facilitated the visual detection of biological motion stimuli only if the stimulus motion was in approximate synchrony with the motor behavior. In contrast, the introduction of substantial delays between the observed and the executed movements resulted in a reduction of detection performance compared with perception without concurrent motor task. This indicates that synchrony is a crucial factor for the facilitation of biological motion recognition by action execution.

Experiment 2: rotation in the image plane

With the second experiment, we addressed the question how the influence of self-generated movements on biological motion detection is modulated by varying the congruence of the spatial frame of reference of the visual stimulus and the body axis of the observer. This question was motivated by previous studies showing that the perception of biological motion is strongly altered by rotations of the stimulus in the image plane (Sumi, 1984; Pavlova and Sokolov, 2000; Grossman and Blake, 2001), suggesting that perception might depend on the retinal frame of reference (Troje, 2003). To test the influence of the spatial congruency between visual stimulus and the executed motor behavior parametrically, we introduced various degrees of spatial mismatch by rotating the visual stimulus by 45, 90, and 135° counterclockwise in the image plane.

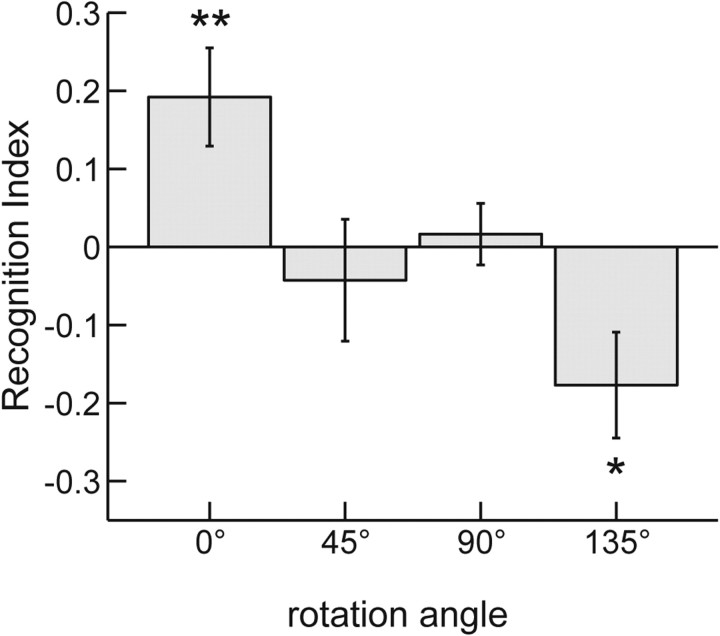

The RI as a function of the rotation angle is shown in Figure 3. The RI decays strongly and significantly with the rotation angle (ANOVA, F(3,42) = 6.376, p = 0.001). Consistent with experiment 1, without rotation (0°), we found significant facilitation, i.e., a positive RI (one-sample, two-tailed t test, t(14) = 3.056, p = 0.009). For intermediate rotation angles of 45° or 90°, the RI did not differ significantly from zero, indicating neither facilitation nor interference. However, for the rotation angle 135°, we observed a significant negative RI (one-sample, two-tailed t test, t(14) = −2.610, p = 0.021), implying interference between motor execution and the visual detection of biological motion. Like in the previous experiment, the degree of participants' awareness of the stimulus control did not have any significant influence on the results. We failed to find differences between the groups with different levels of awareness (Kruskal–Wallis test, all p > 0.1; post hoc pairwise comparisons, all p > 0.8, Bonferroni's corrected) (for an illustration of this result, see supplemental information, available at www.jneurosci.org as supplemental material).

Figure 3.

Influence of rotations of the stimulus in the image plane. The RI is shown in dependence of the rotation angle of the visual stimulus. Error bars indicate SEs. **p < 0.01; *p < 0.05, significant positive and negative values of the RI. The facilitation observed for spatial coherence (0°) ceases for larger rotation angles, and the largest rotation angle even induces interference between motor execution and visual detection.

We failed to observe a significant dependence of the NTV on the rotation angle (ANOVA, F(3,42) = 1.615, p = 0.2) in the baseline condition (see supplemental information, available at www.jneurosci.org as supplemental material). This implies that, for waving arms, there is no “inversion effect,” which is an increased recognizability of upright stimuli, as has been repeatedly observed for complete point-light walkers. In addition, this observation rules out that the observed rotation dependence in the presence with concurrent motor execution can be explained by the classical inversion effect in biological motion. Instead, this rotation dependence seems to be induced by the difference in the alignment between the spatial frames of reference between the visual stimulus and the frame of reference of motor execution.

Summarizing, this experiment shows that not only temporal but also spatial congruency between the visual stimulus and the executed movement seems to be a key factor for a facilitatory influence of motor execution on biological motion detection.

Experiment 3: switching of the corresponding body side

Previous experiments might be confounded by the possibility that subjects might be able to match simple features of executed and observed movements, such as the rhythm or the turning points. Such simple matching strategies would not imply a specific processing or even simulation of body movements, as assumed by many theories for action recognition by motor resonance (Gallese et al., 1996; Buccino et al., 2004a; Rizzolatti and Craighero, 2004; Borroni et al., 2008). To exclude such simple feature matching strategies, we tested whether the observed facilitation of visual detection by motor execution was critically dependent on whether the body sides of executed and perceived movements were matched. Simple strategies, such as the detection of turning points, would still work if the stimulus is mirror-reflected about its vertical axis. If, however, the observed facilitation exploits a mechanism that establishes a spatial matching between executed and observed movement, facilitation should be abolished if, for example, the movement is executed with the right arm while the participants visually perceive a left arm. To test the importance of the matching of body side, we compared the responses for normal visual stimuli and stimuli that were reflected about the vertical axis (cf. Fig. 1).

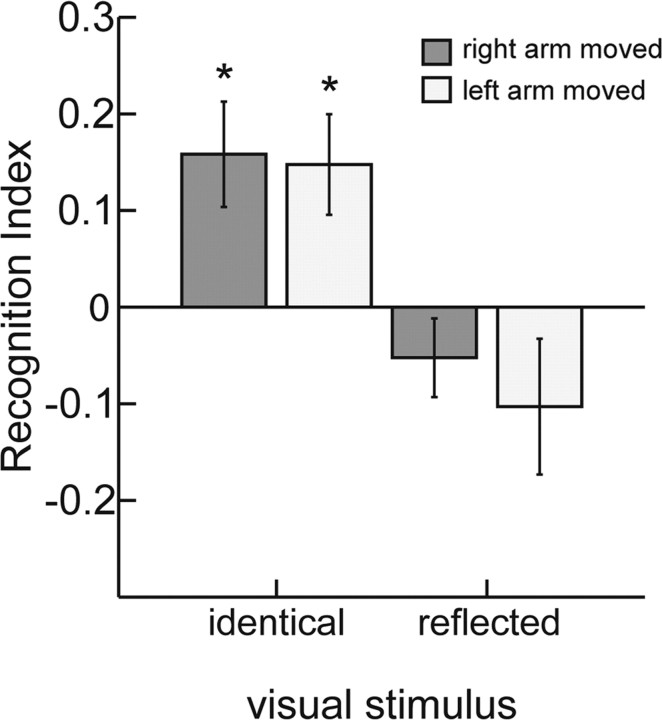

To test for potential biases for body side, we tested motor execution for both body sides. Participants had to execute movements with either the right or the left arm. For both execution conditions, an equal number of visual stimuli was presented that showed the arm movement on the same body side (identical) and on the opposite side (reflected). Reflection of the visual stimuli should destroy the spatial congruency between motor execution and the visual stimulus. In addition, it should abolish the influence of proprioceptive feedback from the motor execution, because this feedback should be specific for the body side.

The RIs from this experiment are shown in Figure 4. A mixed-factor ANOVA revealed only a significant main effect of reflection (F(1,14) = 23.158, p < 0.001). Neither the side of the moving arm (right or left) (F(1,14) = 0.695, p = 0.418) nor the degree of awareness of the participants concerning the stimulus control had a significant influence. Moreover, none of the interactions with those factors was significant (all p > 0.05). This implies that the effects for right and left arm movements were comparable. In addition, we failed to find any significant differences in a kinematic analysis of the executed movements of the right and the left arm. For both sides, we found significant facilitation (positive RI, one-sample, two-tailed t test: right arm, t(16) = 2.909, p = 0.01; left arm, t(16) = 2.838, p = 0.012) for the conditions in which the body side of the visual stimulus matched the side of the moving arm. At the same time, the recognition indices for the trials with mirror-reflected visual stimuli were not significantly different from zero. Once more, a Kruskal–Wallis tests failed to reveal significant differences in detection performance between the different awareness groups (all p > 0.08; for illustration, see supplemental information, available at www.jneurosci.org as supplemental material).

Figure 4.

Influence of matching of the body side. Right versus left arm movements were presented visually either on the correct side (identical) or on the opposite body side (reflected). Error bars indicate SEs. *p < 0.05, significant positive and negative values of the RI. Facilitation is observed for either body side, but only if the stimulus shows a movement of the comparable body side.

The result of this experiment confirms the importance of spatial congruency between the visual stimulus and executed movement for visual perception facilitation. Furthermore, it rules out some simple matching strategies that are not specifically related to the structure of the human body as explanation for the observed modulatory effects. The fact that we observed this crucial role of spatial congruency for movements with either body side supports the hypothesis that motor and visual representations of human actions are coupled in a spatially highly selective manner.

Discussion

Our experiments show a temporally and spatially highly selective influence of ongoing motor behavior on the recognition of biological motion. Using a novel psychophysical paradigm that was suitable for the online control of biological motion stimuli by the actual movements of the observer, we demonstrated that biological motion detection is facilitated by concurrent motor execution only if the observed and the executed action were in approximate temporal synchrony and spatially congruent. Degradation of the spatiotemporal coherence resulted in a decay of the facilitation and often even in interference between action execution and visual detection. In addition, we were able to measure quantitatively the temporal and spatial accuracy that is required for facilitatory interaction: an increase of the noise tolerance by the concurrent motor task was only observed for time delays below 300 ms and spatial rotations of <45°.

Our results provide support for the theory that action recognition involves an interaction between dynamic representations in vision and motor control (Erlhagen et al., 2006; Kilner et al., 2007). Alternatively, the present results may be accounted for by the theory that a single dynamic representation is shared between perception and motor execution (Schaal et al., 2003; Wolpert et al., 2003; de Vignemont and Haggard, 2008). In both cases, predictions derived from dynamical states of the motor behavior modulate visual representations in a spatiotemporally specific manner. For small time delays and spatial congruency, the predicted sensory signals closely match the incoming sensory information, resulting in a facilitation of the visual recognition of the observed motor pattern. In the presence of strong time delays (for example, half of a waving cycle), however, the predicted perceptual information would be anti-cyclic with the incoming sensory information. In this case, the signal derived from motor execution would compete with the sensory input, impairing the recognition of the visual pattern. Similarly, strong spatial transformations might produce predictive signals that incompatible with the sensory input, also resulting in interference between action execution and recognition.

In the literature, different neural structures have been discussed as possible substrate of internal forward models, which might form the basis of the modulation of sensory processing by motor execution. It has been suggested that the relevant circuit includes the mirror neuron system and specifically the premotor and prefrontal cortex and the superior temporal sulcus (Iacoboni et al., 2001; Kilner et al., 2007). Alternatively, it has been discussed that the cerebellum and the posterior parietal cortex might play a central role in the prediction of sensory consequences from ongoing movements (Miall, 2003).

As possible neural substrate for a common representation of the perception and execution of motor action in humans, some studies have postulated the “action observation network”, which includes the IFG, the IPL, the MTG, as well as the STS (Iacoboni et al., 2001; Buccino et al., 2004a,b; Thornton and Knoblich, 2006; Lestou et al., 2008). In addition, it has been argued that, specifically, the extrastriate body area, IFG, and areas in the central sulcus of the left hemisphere are involved in the manipulation of perception by concurrent motor execution (Hamilton et al., 2006).

To disentangle which of those potential correlates are critically involved in the observed facilitation and interference of action execution on action perception, additional investigations are required. Interesting insights might be derived from neurological patients with lesions in these relevant areas.

Most previous studies on motor–visual coupling in biological motion perception have provided only partial control of the exact spatiotemporal relationship between the visual stimulus and the executed motor behavior: This potentially explains the inconsistency with respect to the observation of facilitation versus interference in the previous literature. In addition to this possibility, differences might also be accounted for by differences between the experimental paradigms used in these previous studies. An example is a study by Zwickel et al. (2010) who measured the modulation of directed hand movements by a simultaneously presented moving dot on a screen. These authors failed to find facilitatory interactions between observed and executed actions and of a dependence of the interaction on the similarity between executed on observed movements. A possible explanation for this discrepancy is that the point-light arms in our study might have been more efficient for the activation of dynamic body representations than a single moving dot.

The result that the detection results were independent of the fact whether the participants noticed that they controlled the visual stimulus shows that the interaction between motor representations and visual recognition occurs automatically, without the requirement of conscious processes, such as the imagination of the executed movements (Jeannerod and Frak, 1999). Likewise, the observed effects seem to be independent of the attribution of agency for the observed action to oneself or another agent. In addition, post hoc kinematic analyses failed to reveal significant differences for the waving amplitude and the mean velocity based on the hand trajectories between subjects of the three awareness groups (Kruskal–Wallis tests, all p > 0.05). Post hoc pairwise comparisons confirmed that none of the groups differed significantly in either amplitude or velocity of the hand from the others (all p > 0.05). This provides additional evidence against the hypothesis that awareness of the stimulus control had a critical influence on our results.

Our observation of a crucial dependence of the action perception on temporal congruency seems consistent with other studies showing that synchrony also modulates the self-attribution of observed body movements (Leube et al., 2003; Tsakiris et al., 2005; Farrer et al., 2008) and the perceived tickling sensation induced by tactile stimuli (Blakemore et al., 1999). It has been argued that the cerebellum might play a critical role in the control and perception of the timing of movements (Ivry, 1996; O'Reilly et al., 2008).

Moreover, we demonstrated, for the first time, that a decrease of spatiotemporal coherence can transform facilitatory interactions into and interference between visual action recognition and action execution. An interesting question for additional studies seems to be how biological motion perception is influenced by temporal mismatches for which the visual stimulus precedes the actual movement of the participants (negative delay). In this case, visual perception would predict the future trajectory of the arm. Such experiments would be possible using repetitive movements for which the future trajectory can be predicted from previous movement cycles.

The observed dependency of the facilitation of biological motion detection on spatial congruency between the observed and the executed movement is not simply a consequence of the inversion effect that has been described for biological motion recognition, i.e., the fact that point-light walkers are more difficult to perceive if they are rotated in the image plane (Sumi, 1984; Pavlova and Sokolov, 2000; Troje, 2003). Even without concurrent motor task, the detection of point-light arms failed to show an inversion effect, because detection rates were not significantly different between the different rotation conditions. This result is in good accordance with studies that failed to find an inversion effect for the recognition of arms in static pictures (Reed et al., 2006). The lack of an inversion effect for arms makes sense ecologically: walking typically takes place in an inertial frame of reference, resulting in the majority of visual stimuli, presenting the complete human body upright during locomotion. In contrast, because of the large range of possible shoulder movements, arms can appear with a large range of rotations against the vertical axis. If the visual system represents the statistical properties of the environment (e.g., as a result of learning or potentially also innate priors), it is thus not surprising that upright and inverted walking are not represented equally well, whereas a whole range of rotated arms is similarly effective in eliciting visual motion recognition. As a consequence, a plausible explanation of our results is that, although the detection of biological motion perception might be based on a retinal frame of reference, the potential top-down feedback from motor representations is tuned with respect to the relationship between the body axis and the vertical direction in the retinal frame.

The sensitivity of the interaction for the orientation of the visual stimulus seems consistent with several electrophysiological results, showing a view dependence of action-selective neurons in the STS (Perrett et al., 1989; Barraclough et al., 2009) and area F5 of the macaque monkey (Caggiano et al., 2011). Furthermore, studies with human participants have shown view dependence of visual adaptation effects for action stimuli (Barraclough et al., 2009) and also for reaction times in grasping experiments (Craighero et al., 2002).

An interesting question for future research is to investigate differences between goal-directed and non-goal-directed movements with a paradigm similar to ours, which allows to control the spatiotemporal matching between the visual stimulus and executed movements. Most work about mirror neurons so far has focused on goal-directed actions. If mirror neurons form a crucial part of the circuits involved in the observed facilitation of action detection, the detection of goal-directed actions might be facilitated even more than for non-goal-directed actions as used in our studies.

Finally, our experimental paradigm is limited by the fact that it does not permit to determine the role of proprioceptive feedback for the observed motor–visual interactions (Farrer et al., 2003; Balslev et al., 2007). A more detailed clarification of this question would be possible either with sophisticated devices supporting a mainly passive execution of the same movements (Shadmehr and Mussa-Ivaldi, 1993) or by studies with deafferentiated patients with a lack of proprioception. These questions offer interesting perspectives for future research.

Footnotes

This work was supported by the EC FP6 Project COBOL, the EC FP7 Projects SEARISE (Grant FP7-ICT-215866), TANGO (Grant FP7-249858-TP3), and AMARSi (Grant FP7-ICT-248311), as well as Deutsche Forschungsgemeinschaft Grant GI 305/4-1 and the Hermann and Lilly Schilling Foundation. We thank C. L. Roether for supporting discussions as well as J. Scharm and T. Hirscher for help with the data collection. We are grateful to two anonymous reviewers for their constructive comments on this manuscript.

References

- Balslev D, Cole J, Miall RC. Proprioception contributes to the sense of agency during visual observation of hand movements: evidence from temporal judgments of action. J Cogn Neurosci. 2007;19:1535–1541. doi: 10.1162/jocn.2007.19.9.1535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barraclough NE, Keith RH, Xiao D, Oram MW, Perrett DI. Visual adaptation to goal-directed hand actions. J Cogn Neurosci. 2009;21:1806–1820. doi: 10.1162/jocn.2008.21145. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Frith CD, Wolpert DM. Spatio-temporal prediction modulates the perception of self-produced stimuli. J Cogn Neurosci. 1999;11:551–559. doi: 10.1162/089892999563607. [DOI] [PubMed] [Google Scholar]

- Borroni P, Montagna M, Cerri G, Baldissera F. Bilateral motor resonance evoked by observation of a one-hand movement: role of the primary motor cortex. Eur J Neurosci. 2008;28:1427–1435. doi: 10.1111/j.1460-9568.2008.06458.x. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Riggio L. The mirror neuron system and action recognition. Brain Lang. 2004a;89:370–376. doi: 10.1016/S0093-934X(03)00356-0. [DOI] [PubMed] [Google Scholar]

- Buccino G, Lui F, Canessa N, Patteri I, Lagravinese G, Benuzzi F, Porro CA, Rizzolatti G. Neural circuits involved in the recognition of actions performed by nonconspecifics: an FMRI study. J Cogn Neurosci. 2004b;16:114–126. doi: 10.1162/089892904322755601. [DOI] [PubMed] [Google Scholar]

- Caggiano V, Fogassi L, Rizzolatti G, Pomper JK, Thier P, Giese MA, Casile A. View-based encoding of actions in mirror neurons of area F5 in macaque premotor cortex. Curr Biol. 2011;21:144–148. doi: 10.1016/j.cub.2010.12.022. [DOI] [PubMed] [Google Scholar]

- Craighero L, Bello A, Fadiga L, Rizzolatti G. Hand action preparation influences the responses to hand pictures. Neuropsychologia. 2002;40:492–502. doi: 10.1016/s0028-3932(01)00134-8. [DOI] [PubMed] [Google Scholar]

- Cutting JE, Moore C, Morrison R. Masking the motions of human gait. Percept Psychophys. 1988;44:339–347. doi: 10.3758/bf03210415. [DOI] [PubMed] [Google Scholar]

- de Vignemont F, Haggard P. Action observation and execution: what is shared? Soc Neurosci. 2008;3:421–433. doi: 10.1080/17470910802045109. [DOI] [PubMed] [Google Scholar]

- Erlhagen W, Mukovskiy A, Bicho E. A dynamic model for action understanding and goal-directed imitation. Brain Res. 2006;1083:174–188. doi: 10.1016/j.brainres.2006.01.114. [DOI] [PubMed] [Google Scholar]

- Farrer C, Franck N, Paillard J, Jeannerod M. The role of proprioception in action recognition. Conscious Cogn. 2003;12:609–619. doi: 10.1016/s1053-8100(03)00047-3. [DOI] [PubMed] [Google Scholar]

- Farrer C, Bouchereau M, Jeannerod M, Franck N. Effect of distorted visual feedback on the sense of agency. Behav Neurol. 2008;19:53–57. doi: 10.1155/2008/425267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Blake R. Brain activity evoked by inverted and imagined biological motion. Vision Res. 2001;41:1475–1482. doi: 10.1016/s0042-6989(00)00317-5. [DOI] [PubMed] [Google Scholar]

- Hamilton A, Wolpert D, Frith U. Your own action influences how you perceive another person's action. Curr Biol. 2004;14:493–498. doi: 10.1016/j.cub.2004.03.007. [DOI] [PubMed] [Google Scholar]

- Hamilton AF, Wolpert DM, Frith U, Grafton ST. Where does your own action influence your perception of another person's action in the brain? Neuroimage. 2006;29:524–535. doi: 10.1016/j.neuroimage.2005.07.037. [DOI] [PubMed] [Google Scholar]

- Hommel B, Müsseler J, Aschersleben G, Prinz W. The theory of event coding (TEC): a framework for perception and action planning. Behav Brain Sci. 2001;24:849–878. doi: 10.1017/s0140525x01000103. discussion 878–937. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Koski LM, Brass M, Bekkering H, Woods RP, Dubeau MC, Mazziotta JC, Rizzolatti G. Reafferent copies of imitated actions in the right superior temporal cortex. Proc Natl Acad Sci U S A. 2001;98:13995–13999. doi: 10.1073/pnas.241474598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ivry RB. The representation of temporal information in perception and motor control. Curr Opin Neurobiol. 1996;6:851–857. doi: 10.1016/s0959-4388(96)80037-7. [DOI] [PubMed] [Google Scholar]

- Jacobs A, Shiffrar M. Walking perception by walking observers. J Exp Psychol. 2005;31:157–169. doi: 10.1037/0096-1523.31.1.157. [DOI] [PubMed] [Google Scholar]

- Jeannerod M, Frak V. Mental imaging of motor activity in humans. Curr Opin Neurobiol. 1999;9:735–739. doi: 10.1016/s0959-4388(99)00038-0. [DOI] [PubMed] [Google Scholar]

- Johansson G. Visual perception of biological motion and a model for its analysis. Percept Psychophys. 1973;14:201–211. [Google Scholar]

- Kilner JM, Paulignan Y, Blakemore SJ. An interference effect of observed biological movement on action. Curr Biol. 2003;13:522–525. doi: 10.1016/s0960-9822(03)00165-9. [DOI] [PubMed] [Google Scholar]

- Kilner JM, Friston KJ, Frith CD. Predictive coding: an account of the mirror neuron system. Cogn Process. 2007;8:159–166. doi: 10.1007/s10339-007-0170-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lestou V, Pollick FE, Kourtzi Z. Neural substrates for action understanding at different description levels in the human brain. J Cogn Neurosci. 2008;20:324–341. doi: 10.1162/jocn.2008.20021. [DOI] [PubMed] [Google Scholar]

- Leube DT, Knoblich G, Erb M, Grodd W, Bartels M, Kircher TT. The neural correlates of perceiving one's own movements. Neuroimage. 2003;20:2084–2090. doi: 10.1016/j.neuroimage.2003.07.033. [DOI] [PubMed] [Google Scholar]

- Miall RC. Connecting mirror neurons and forward models. Neuroreport. 2003;14:2135–2137. doi: 10.1097/00001756-200312020-00001. [DOI] [PubMed] [Google Scholar]

- Miall RC, Stanley J, Todhunter S, Levick C, Lindo S, Miall JD. Performing hand actions assists the visual discrimination of similar hand postures. Neuropsychologia. 2006;44:966–976. doi: 10.1016/j.neuropsychologia.2005.09.006. [DOI] [PubMed] [Google Scholar]

- Müsseler J, Hommel B. Blindness to response-compatible stimuli. J Exp Psychol. 1997;23:861–872. doi: 10.1037//0096-1523.23.3.861. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- O'Reilly JX, Mesulam MM, Nobre AC. The cerebellum predicts the timing of perceptual events. J Neurosci. 2008;28:2252–2260. doi: 10.1523/JNEUROSCI.2742-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlova M, Sokolov A. Orientation specificity in biological motion perception. Percept Psychophys. 2000;62:889–899. doi: 10.3758/bf03212075. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Harries MH, Bevan R, Thomas S, Benson PJ, Mistlin AJ, Chitty AJ, Hietanen JK, Ortega JE. Frameworks of analysis for the neural representation of animate objects and actions. J Exp Biol. 1989;146:87–113. doi: 10.1242/jeb.146.1.87. [DOI] [PubMed] [Google Scholar]

- Prinz W. Perception and action planning. Eur J Cogn Psychol. 1997;9:129–154. [Google Scholar]

- Reed CL, Stone VE, Grubb JD, McGoldrick JE. Turning configural processing upside down: part and whole body postures. J Exp Psychol Hum Percept Perform. 2006;32:73–87. doi: 10.1037/0096-1523.32.1.73. [DOI] [PubMed] [Google Scholar]

- Repp BH, Knoblich G. Action can affect auditory perception. Psychol Sci. 2007;18:6–7. doi: 10.1111/j.1467-9280.2007.01839.x. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Schaal S, Ijspeert A, Billard A. Computational approaches to motor learning by imitation. Philos Trans R Soc Lond B Biol Sci. 2003;358:537–547. doi: 10.1098/rstb.2002.1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schütz-Bosbach S, Prinz W. Perceptual resonance: action-induced modulation of perception. Trends Cogn Sci. 2007;11:349–355. doi: 10.1016/j.tics.2007.06.005. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Mussa-Ivaldi FA. Adaptive representation of dynamics during learning of a motor task. J Neurosci. 1994;14:3208–3224. doi: 10.1523/JNEUROSCI.14-05-03208.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumi S. Upside-down presentation of the Johansson moving light-spot pattern. Perception. 1984;13:283–286. doi: 10.1068/p130283. [DOI] [PubMed] [Google Scholar]

- Thornton IM, Knoblich G. Action perception: seeing the world through a moving body. Curr Biol. 2006;16:R27–R29. doi: 10.1016/j.cub.2005.12.006. [DOI] [PubMed] [Google Scholar]

- Troje NF. Reference frames for orientation anisotropies in face recognition and biological-motion perception. Perception. 2003;32:201–210. doi: 10.1068/p3392. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Haggard P, Franck N, Mainy N, Sirigu A. A specific role for efferent information in self-recognition. Cognition. 2005;96:215–231. doi: 10.1016/j.cognition.2004.08.002. [DOI] [PubMed] [Google Scholar]

- Wohlschläger A. Visual motion priming by invisible actions. Vision Res. 2000;40:925–930. doi: 10.1016/s0042-6989(99)00239-4. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Miall RC. Forward models for physiological motor control. Neural Netw. 1996;9:1265–1279. doi: 10.1016/s0893-6080(96)00035-4. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Doya K, Kawato M. A unifying computational framework for motor control and social interaction. Philos Trans R Soc Lond B Biol Sci. 2003;358:593–602. doi: 10.1098/rstb.2002.1238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwickel J, Grosjean M, Prinz W. On interference effects in concurrent perception and action. Psychol Res. 2010;74:152–171. doi: 10.1007/s00426-009-0226-2. [DOI] [PMC free article] [PubMed] [Google Scholar]