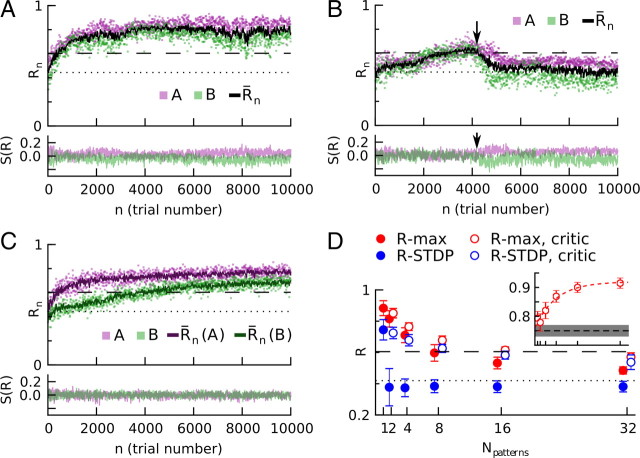

Figure 3.

R-STDP, but not R-max, needs a stimulus-specific reward-prediction system to learn multiple input/output patterns. At each trial, pattern A or B is presented in the input, and the output pattern is compared with the corresponding target pattern. A, R-max can learn two patterns, even when the success signal S(R) for each pattern does not average to zero. Top, Rewards as a function of trial number. Magenta, Pattern A; green, pattern B; black, running trial mean of the reward; dotted line, reward before learning; dashed line, reward obtained with the reference weights (see Materials and Methods). Bottom, Success signals S(R) for stimuli A and B. For clarity, only 25% of the trials are shown. B, R-STDP fails to learn two patterns if the success signal is not stimulus-specific. As long as, by chance, the actual rewards obtained for stimuli A and B are similar [top, first 4000 trials; A (magenta) and B (green) reward values overlap], the mean reward subtraction is correct for both and performance increases. However, as soon as a minor discrepancy in mean reward appears between the two tasks (arrow at ∼4000 trials, magenta above green dots), performance drops to prelearning level (dotted line) and fails to recover. For visual clarity, the figure shows a trial with a relatively late failure. C, R-STDP can be rescued if the success signal is a stimulus-specific reward-prediction error. A critic maintains a stimulus-specific mean-reward predictor (top, dark magenta and dark green lines) and provides the network with unbiased success signals (bottom) for both stimuli. D, Performance as a function of the number of stimuli. A stimulus-specific reward-prediction system makes a significant difference for large numbers of distinct stimulus-response pairs. Filled circles, Success signal based on a simple, stimulus-unspecific trial average; empty circles, stimulus-specific reward-prediction error. R-STDP (blue) fails to learn more than one stimulus/response association without stimulus-specific reward prediction, but performs well in the presence of a critic, staying close to the performance level of the reference weights (dashed line). R-max (red) does not require a stimulus-specific reward prediction, but it leads to increased performance. Points with/without critic are offset horizontally for visibility; they correspond to the ticks of the abscissa. The performance decreases for large number of stimuli/response pairs because as the learned weights become less specialized and closer to the reference weights (see inset), the reference weights' performance becomes the upper bound on the performance. Inset, Normalized scalar product of the learned and reference weights, (shown on the vertical axis, horizontal axis shows the same values as main graph). Only data for R-max with critic is shown. Red dashed line, Exponential fit of the data. Black dashed line and gray area represent the mean and the SD for random, uniformly drawn weights w⃗, respectively. In all panels (except inset of D), the dotted line shows the performance before learning and the dashed line shows the performance of reference weights.