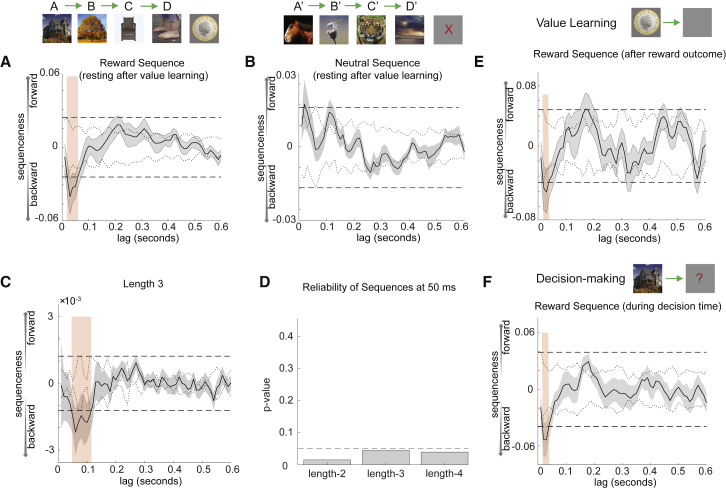

Figure 3.

Only Rewarded Sequences Reverse Direction, and Sequences Form Chains of Four Objects

(A) In value learning, each participant experienced one rewarded sequence and one unrewarded sequence. In the rest period after value learning, the rewarded sequence played backward in spontaneous brain activity.

(B) The unrewarded sequence still trended to playing forward.

(C and D) All other panels in the main text show a sequenceness measure that evaluates single-step state-to-state transitions. Here, we report a related sequenceness measure that evaluates the extra evidence for multi-step sequences, beyond the evidence for single-step transitions (see Method Details for details). This measure describes the degree to which, for example, A follows B with the same latency as B follows C. Sequences of length 3, following the rule-defined order, played out at a state-to-state lag of approximately 50 ms (C). At 50-ms lag, there was significant replay of sequences up to the maximum possible length (D → C → B → A). Dashed line at p = 0.05 (D).

(E) Replay was not limited to the resting period. Reverse replay of the rewarded sequence began during value learning.

(F) Reverse replay of the rewarded sequence was also evident during the decision phase.