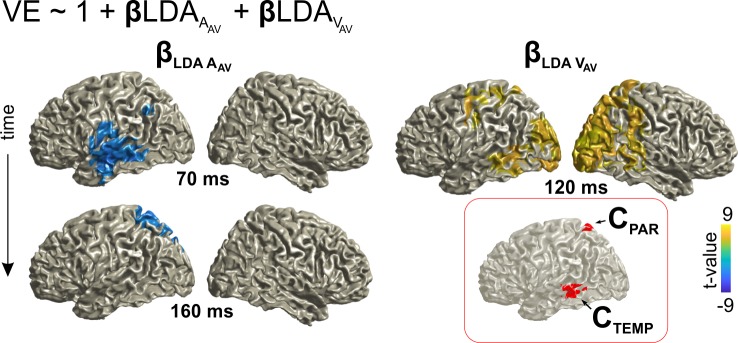

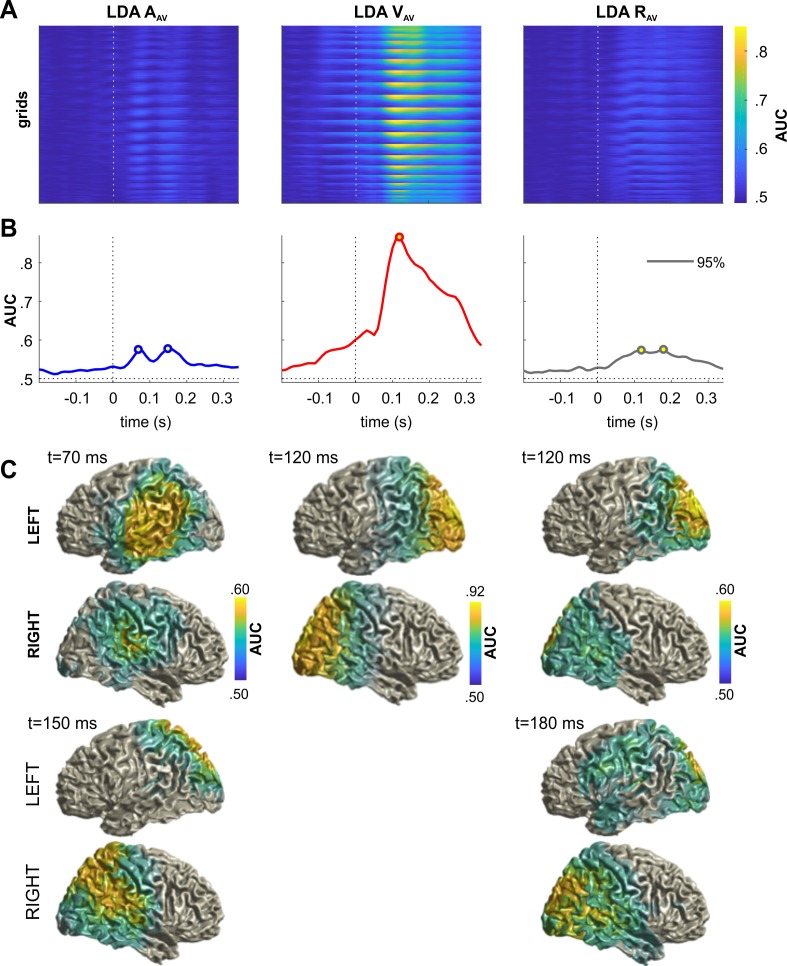

Figure 4. Neural correlates of audio-visual integration within a trial (VE bias).

Contribution of the representations of acoustic and visual information to the single trial bias in the AV trial. Red inset: Grid points with overlapping significant effects for both LDAA_AV and LDAV_AV. Surface projections were obtained from whole-brain statistical maps (at p≤0.05, FWE corrected). See Table 2 for detailed coordinates and statistical results. AAV: sound location in AV trial. VAV: visual location in AV trial. Deposited data: AVtrial_LDA_AUC.mat; VE_beta.mat.