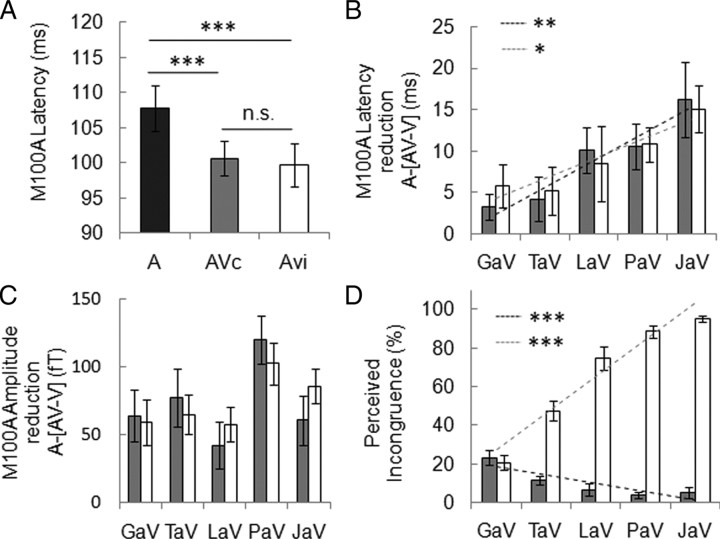

Figure 3.

Facilitation of early auditory response by visual input. A, Auditory evoked response (M100A) latency (A, dark bar) was globally reduced by visual syllables whether they matched the sound (AVc, gray bar) or not (AVi, white bar). B, M100A latency reduction [A-(AV–V)], represented as a function of visual predictability (Fig. 2), shows a significant viseme dependency but no effect of incongruence. M100 latency reduction is proportional to visual predictability in both AVc (black dashed line) and AVi (gray dashed line) combinations. No significant difference between AVc and AVi regression slopes was found. C, M100A amplitude change (positive values correspond to a reduction of M100A in AV–V vs A condition) indicates a significant effect of syllables but no viseme dependency or incongruence effect. D, Perceived incongruence for AVc and AVi combinations. Note that comparisons focus on the visual syllable (for example PaAVc is compared with PaAVi, e.g., PaV/GaA) (supplemental Fig. 1B, available at www.jneurosci.org as supplemental material). Perceived incongruence for AVi pairs correlates positively with visual predictability (gray dashed line), whereas perceived incongruence for AVc pairs correlates negatively with visual predictability (black dashed line, interaction significant). Error bars indicate SEM. *p < 0.05, **p < 0.01, ***p < 0.001.