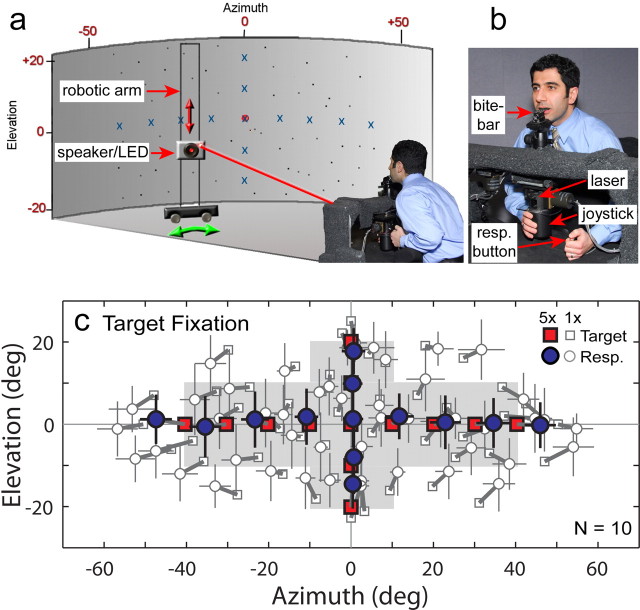

Figure 2.

Experimental setup: all experiments were conducted inside a darkened, echo-attenuated room. a, Subjects faced a black cylindrical screen (shaded) behind which nonvisible auditory targets were presented across the frontal field from a small loudspeaker under robotic control. Three laser LEDs (0° azimuth, red spot; left and right 20°, not shown) projected directly onto the screen and served as reference spots for fixation. b, The head was fixed using a custom bite-bar, and sound location was reported using a visually guided laser pointer mounted on a cylindrical joystick. resp. button, Response button. c, Plot of auditory target locations and mean subject responses during the target fixation task. The eyes were unconstrained and foveally guided the laser pointer. Targets (squares) and associated responses (circles) are connected with thick lines. The targets in the shaded area and the accompanying responses were used to calculate spatial gain, offset, and accuracy (see Figs. 3, 5). “Multisampled” targets (filled squares) were presented five times (5×) in each subject; all others (open squares) were sampled only once (1×). Horizontal and vertical error bars [thin lines through each mean response (Resp.)] are SDs in this and all subsequent figures.