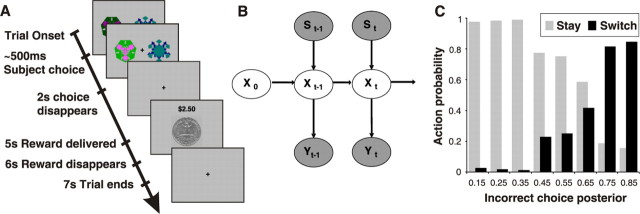

Figure 1.

Reversal task setup and state-based decision model. A, Subjects choose one of two fractals that on each trial are randomly placed to the left or right of the fixation cross. Once the subject selects a stimulus, it increases in brightness and remains on the screen until 2 s after the choice. After an additional 3 s, a reward (winning 25 cents, depicted by a quarter dollar coin) or punishment (losing 25 cents, depicted by a quarter dollar coin covered by a red cross) is delivered, with the total money earned displayed at the top of the screen. One stimulus is designated the correct stimulus, and the choice of that stimulus leads to a monetary reward on 70% of occasions and a monetary loss 30% of the time. Consequently, choice of this correct stimulus leads to accumulating monetary gain. The other stimulus is incorrect, and choosing that stimulus leads to a reward 40% of the time and a punishment 60% of the time, leading to a cumulative monetary loss. After subjects choose the correct stimulus on four consecutive occasions, the contingencies reverse with a probability of 0.25 on each successive trial. Subjects have to infer the reversal took place and switch their choice, and at that point the process is repeated. B, We constructed an abstract-state-based model that incorporates the structure of the reversal task in the form of a Bayesian HMM that uses previous choice and reward history to infer the probability of being in the correct/incorrect choice state. The choice state changes (“transits”) from one period to another depending on (1) the exogenously given chance that the options are reversed (the good action becomes the bad one, and vice versa) and (2) the control (if the subject switches when the actual, but hidden, choice state is correct, then the choice state becomes incorrect, and vice versa). Y, Observed reward/punishment; S, observed switch/stay action; X, abstract correct/incorrect choice state that is inferred at each time step (see Materials and Methods). The arrows indicate the causal relationships among random variables. C, Observed choice frequencies that subjects switch (black) or stay (gray) against the inferred posterior probability of the state-based model that their last choice was incorrect. The higher the posterior incorrect probability, the more likely subjects switch (relative choice frequencies are calculated separately for each posterior probability bin).