Figure 3.

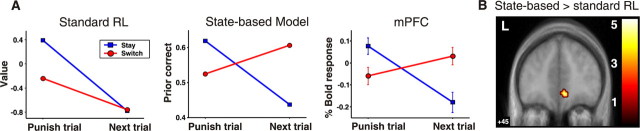

Standard RL and abstract-state-based decision models make qualitatively different predictions about the brain activity after subjects switch their choice. A, Both models predict whether a decision is made to stay after being punished, the next action will have a lower expected value in the next trial (blue line). However, if a decision is made to switch choice of stimulus after being punished, simple RL predicts that the expected value of the new choice will also be low (red line; left) because its value was not updated since the last time it was chosen. In contrast, a state-based decision model predicts that the expected value of the new choice will be high. If the subjects have determined that their choice until now was incorrect (prompted by the last punishment), then their new choice after switching is now correct and has a high expected value (red line; middle). Mean fMRI signal changes (time-locked to the time of choice) in the mPFC (derived from a 3 mm sphere centered at the peak voxel) plotted before and after reversal (right) show that activity in this region is more consistent with the predictions of state-based decision making than that of standard RL. This indicates that the expected reward signal in the mPFC incorporates the structure of the reversal task. B, Direct comparison of brain regions correlating with the prior correct signal from the state-based model compared with the equivalent value signal (of the current choice) from the simple RL model. A contrast between the models revealed that the state-based decision model accounts significantly better for neural activity in the mPFC (6, 45, −9 mm; z = 3.68). L, Left.