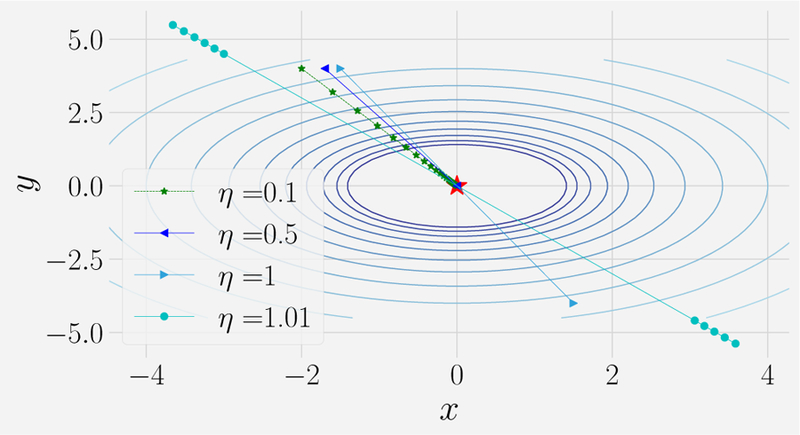

FIG. 7. Gradient descent exhibits three qualitatively different regimes as a function of the learning rate.

Result of gradient descent on surface z = x2 + y2 ‒ 1 for learning rate of η = 0.1, 0.5, 1.01. Notice that the trajectory converges to the global minima in multiple steps for small learning rates (η = 0.1). Increasing the learning rate further (η = 0.5) causes the trajectory to oscillate around the global minima before converging. For even larger learning rates (η = 1.01) the trajectory diverges from the minima. See corresponding notebook for details.