Of the resources available to residency program directors considering applicants to interview, the Medical Student Performance Evaluation (MSPE) is the only one that contains information summarizing the medical student's entire experience throughout undergraduate medical education and potentially contains information on all 6 competencies. This information is derived from the student's preferred rotations and rotations in which the student may have had little interest. Thus, if this information can be conveyed accurately and efficiently, the MSPE can provide a counterweight to overreliance on numerical scores from multiple-choice examinations.

In May 2017, Recommendations for Revising the Medical Student Performance Evaluation was published by the Association of American Medical Colleges (AAMC).1 The goal was to achieve “a level of standardization and transparency that facilitates the residency selection process.”1 For this goal to be achieved, writers of the MSPE would first need to implement the recommendations. Then, readers of the MSPE would need to find these recommendations helpful. Hopefully, facilitation of the residency selection process would result. This article describes the first of these steps. As the process for making changes to a school's MSPE involves discussion and preparation, it is likely that October 2018 MSPEs, for the 2019 graduating class, were the first to fully implement this process.2

The MSPE in 2018

The author surveyed 147 distinct MSPEs released to the Electronic Residency Application Service on October 1, 2018 from US medical schools granting a medical doctorate degree. When regional campuses issued a MSPE that differed in format, these were counted as distinct MSPEs. Osteopathic medical schools and medical schools outside the United States were not included in this survey. The 2018 results were compared to the results of a similar survey from 2015 as the task force began its work.

Four MSPEs (3%) showed no influence of the recommendations of the task force. Two other schools stated they were in the process of revising their assessment systems to modify their MSPE.

The degree of acceptance of the AAMC's Recommendations for Revising the Medical School Performance Evaluation by the 147 remaining MSPEs are as follows:

1. Highlight the 6 ACGME core competencies when possible.

In 2018, 14 MSPEs provided graphic displays based on their medical school's competencies in the summary section. An additional 4 MSPEs have similar displays elsewhere. In contrast, only 1 MSPE provided a graphic display of competency attainment in 2015.

2. Include details on professionalism—both deficient and exemplary performance.

In 2015, only 12% of MSPEs formally commented on professionalism. These were scattered throughout the MSPE or in an appendix.

In 2018, 112 of 147 (76%) MSPEs reported on professionalism near the beginning of the Academic Progress section. An additional 7 MSPEs (5%) highlighted this in another part of the MSPE. The remaining 20% of schools did not seem to have an identifiable section regarding professionalism.

Most 2018 MSPEs included their school's criteria for professionalism. However, these definitions varied widely from school to school. A few individual MSPEs reported examples of deficient or exemplary performance.

3. Replace “Unique Characteristics” with “Noteworthy Characteristics.”

This change reflected the reality that many of the experiences listed as “Unique Characteristics” were often shared by large numbers of medical students and thus not “unique.” Instead, MSPE writers were requested to highlight experiences they felt readers of the MSPE should note.

In 2018, 139 of 147 (95%) MSPEs titled this section “Noteworthy Characteristics” and placed it soon after the identification section. One additional MSPE placed the Noteworthy Characteristics section just prior to the Summary.

4. Limit Noteworthy Characteristics to 3 bulleted items that highlight an applicant's salient experiences and attributes.

Of the 139 MSPEs that included this section near the start of the letter, 128 used a bulleted (or brief paragraph) format and 126 listed 3 points or fewer. Thus, 86% of the 147 MSPEs fully adopted these 2 recommendations regarding Noteworthy Characteristics.

Several MSPEs added a fourth bullet point that recognized selection to Alpha Omega Alpha or Gold Humanism Honor Society. Several MSPEs headlined the bullet points with phrases like “Global Health,” “Research,” or “First Generation,” which helped direct readers to these characteristics. Very few individual letters listed less than 3 noteworthy characteristics.

5. Locate comparative data in the body of the MSPE.

This recommendation sought to help readers contextualize the narrative comments from each rotation with the graphic representation of the individual student relative to the class as a whole. When MSPEs were read on paper, readers could hold the graphic representation found in an appendix separately as they read the narrative comments. This is simply not possible when MSPEs are read in an electronic format. In 2015, only a handful of MSPEs co-located the comparative data with the narrative description in the body of the MSPE.

In 2018, 111 of 147 (76%) MSPEs displayed a graph of the rotation grades co-located with the narrative description of the rotation within the body of the MSPE.

6. Include information on how final grades and comparative data are derived (ie, grading schemes).

In 2018, 104 of 147 (71%) MSPEs included the grading scheme of the rotation in the context of the narrative comments from the rotation. At least 5 schools used the same grading scheme for all clinical rotations. Thus, 89 of 147 (61%) MSPEs adopted both recommendations to co-locate the narrative description, the graphical representation, and the grading schemes within the body of the MSPE.

7. Provide school-wide comparisons if using the final “adjective” or “overall rating” and define terms used.

The recommendation sought to reduce the use of undefined descriptions (so called “code words” such as “outstanding” or “superior”), which would not add clarity unless a reader received many letters from the same school. In 2015, 25% of MSPEs used undefined “code words.”

In 2018, only 10 MSPEs (7%) appeared to use a “code word” without definition. Another 34 MSPEs (23%) provided no school-wide comparison and most of these MSPEs stated clearly they did provide such comparisons. Of the 70% of MSPEs that did provide school-wide comparisons, 53 MSPEs used unequal categories (with graphic displays), 37 divided the class into self-evident categories (usually quartiles), 8 provided graphs comparing the number of honors earned school-wide, and 5 used class rank (eg, “This student ranked 213 out of a class of 214”). Over half of all MSPEs also provided an explanation of the grading scheme used for such ratings. Thus, 70% of MSPEs adopted this recommendation.

Of additional note is that 95% of MSPEs provided some method of comparing the student to the class as a whole. This comparison was placed in the Summary section, the Academic Progress section, or both. Only 8 MSPEs (5%) provided no comparison of the student to the class.

8. Limit the MSPE to 7 single-spaced pages in 12-point font.

To assess acceptance of this recommendation, the number of pages used to describe an individual student data was counted. The Medical School Information section and cover letters were not included in this count. Only letters for students who completed the medical doctorate within the standard time frame for that school (usually 4 years) were used to calculate the number of pages in the MSPE.

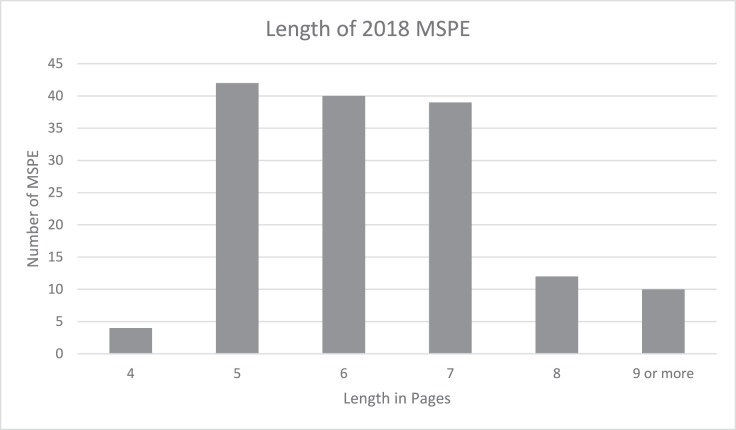

In 2018, the mean length of an MSPE was 6.3 pages with a standard deviation of 1.3. In 2015, the mean number of pages was 9.0 ± 2.3. (P < .001). Thus, 85% of MSPEs complied with the recommendation of the length of the MSPE being 7 pages or less (see Figure).

Figure.

MSPE Page Length in 2018

9. Include 6 sections: Identifying Information, Noteworthy Characteristics, Academic History, Academic Progress, Summary, and Medical School Information.

The vast majority of the MSPE adhered to this basic format. For example, 97% of MSPEs included an identification section.

Within Academic History, 91% of MSPEs noted education gaps, 90% noted remediation, and 90% noted adverse actions. Although not a change from the 2002 Dean's Letter Advisory Committee format, reporting this data has increased since 2015 when these findings were included in 67%, 77%, and 73% of MSPEs, respectively.

Within Academic Progress, 97% commented on foundational sciences and 99% provided paragraph-length comments from individual clinical rotations. One exception was an MSPE that placed these comments in an appendix.

Many MSPEs provided a link to online content describing Medical School Information rather than including it as an appendix.

Observations

The results of this survey suggest that most 2018 MSPE authors are applying the Recommendations for Revising the Medical Student Performance Evaluation. The MSPE has become shorter; more likely to co-locate narratives, graphic comparisons, and grading schemes in the body of the MSPE; and less likely to contain undefined “code words.”

Large numbers of MSPEs for 2019 routinely reported information about gaps, remediation, adverse actions, and professionalism. This finding should reassure MSPE authors, who have adopted these recommendations, that their students are not being disadvantaged by transparency.

Overall, MSPE authors have implemented many of the recommendations. A recent survey of program directors appeared to show support for continuing the process of standardizing the MSPE.3

Many issues for continuing discussions by the medical education community remain. These include the lack of consensus regarding how schools define professionalism. There continues to be disagreement as to what degree of unprofessional behavior should be placed within the MSPE. There may not even be consensus among medical schools regarding the definition of remediation.

Continued discussions of the MSPE as a tool to facilitate the educational handoff of learners from undergraduate medical education to graduate medical education are essential in order to disseminate best practices and further improve the process.

References

- 1.Association of American Medical Colleges. Recommendations for Revising the Medical Student Performance Evaluation (MSPE) 2019 https://www.aamc.org/download/470400/data/mspe-recommendations.pdf Accessed July 3.

- 2.Hook L, Salami AC, Diaz T, Friend KE, Fathalizadeh A, Joshi ART. The revised 2017 MSPE: better, but not “outstanding.”. J Surg Educ. 2018;5(6):e107–e111. doi: 10.1016/j.jsurg.2018.06.014. [DOI] [PubMed] [Google Scholar]

- 3.Brenner JM, Arayssi T, Conigliaro RL, Friedman K. The revised Medical School Performance Evaluation: does it meet the needs of its readers? J Grad Med Educ. 2019;11(4):475–478. doi: 10.4300/JGME-D-19-00089.1. [DOI] [PMC free article] [PubMed] [Google Scholar]