Abstract

Background

High-quality feedback is necessary for learners' development. It is most effective when focused on behavior and should also provide learners with specific next steps and desired outcomes. Many faculty struggle to provide this high-quality feedback.

Objective

To improve the quality of written feedback by faculty in a department of medicine, we conducted a 1-hour session using a novel framework based on education literature, individual review of previously written feedback, and deliberate practice in writing comments.

Methods

Sessions were conducted between August 2015 and June 2018. Participants were faculty members who teach medical students, residents, and/or fellows. To measure the effects of our intervention, we surveyed participants and used an a priori coding scheme to determine how feedback comments changed after the session.

Results

Faculty from 7 divisions participated (n = 157). We surveyed 139 participants postsession and 55 (40%) responded. Fifty-three participants (96%) reported learning new information. To more thoroughly assess behavioral changes, we analyzed 5976 feedback comments for students, residents, and fellows written by 22 randomly selected participants before the session and compared these to 5653 comments written by the same participants 1 to 12 months postsession. Analysis demonstrated improved feedback content; comments providing nonspecific next steps decreased, and comments providing specific next steps, reasons why, and outcomes increased.

Conclusions

Combining the learning of a simple feedback framework with an immediate review of written comments that individual faculty members previously provided learners led to measured improvement in written comments.

Introduction

Effective feedback, which can be written or verbal,1 supports the development of medical students, residents, and fellows. While verbal feedback is ideal, written feedback is very common and efficacious in medical education.2 As with verbal feedback, high-quality written feedback has well-documented characteristics. Effective feedback should be specific and focus on behavior, not the individual.1,3 It should provide reasons why the specific behavior should be improved and communicate concrete next steps.3,4

Despite these best practices, many faculty members provide low-quality written feedback. In 2 separate analyses, less than a quarter of written comments provided by faculty were deemed effective.3,5 Faculty development can address these deficiencies, and previous interventions have resulted in modest gains.6

At our institution, students, residents, and fellows often comment that the feedback they receive is limited and generally not useful. This became evident to residency and fellowship program leadership after clinical competency committees were formed. Committee members struggled to provide learners with substantial recommendations for improvement, given the substandard written feedback that faculty provided. Comments such as “read more” or “continue fellowship” provided no indication about next steps for the learners. Faculty do not review or receive feedback on the quality of the feedback they provide.

We developed a novel feedback framework using essential elements Hattie and Timperley7 derived from their meta-analysis of the feedback literature. They identified feedback as the most important tool used to move learners from their current state of performance to the stated goal, along with essential elements of feedback. According to their analysis, effective feedback must answer the following 3 questions: Where am I going (how do I become a master clinician)? How am I doing now? What are my next steps? We hypothesized that a brief intervention would be sufficient to move our faculty from providing ineffective feedback to contributing more effective feedback aligned with this framework. We studied whether a 1-hour session in which faculty members compare their written feedback to a best-practice framework and practice formulating better feedback using a case could improve the feedback they provide to learners.

Methods

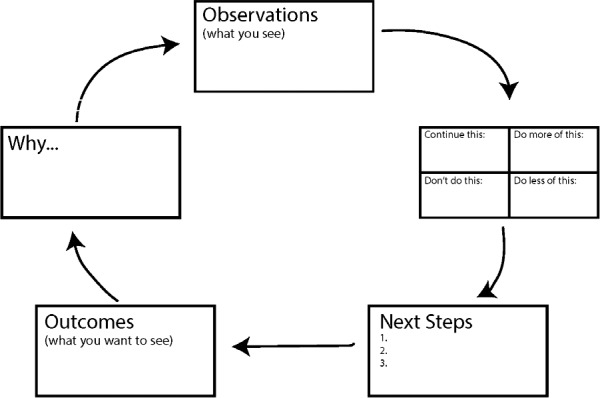

Using the feedback principles framework7 we designed and implemented a faculty development session for members of the University of Wisconsin School of Medicine and Public Health (UWSMPH) Department of Medicine. One-hour sessions occurred during regularly scheduled division meetings to maximize participation. To fit into the typical division meeting structure, and because attention wanes considerably during longer sessions, we limited the intervention to 1 hour. Attendance was voluntary but encouraged by division heads for all teaching faculty. Divisions of general internal medicine, cardiology, hematology/oncology, hospital medicine, nephrology, infectious disease, and pulmonary/critical care participated between August 2015 and June 2018. We began with a presentation of basic learning theory. We then provided a novel 5-step framework for effective written feedback, emphasizing the importance of observation and offering learners specific next steps, expected outcomes, and reasons why those outcomes are desirable (Figure). Faculty participants independently reviewed a summary of their recent written comments to compare their work to the framework and reflect on areas to improve. Then participants reviewed a case description of a learner's behavior, wrote feedback using the framework, and discussed their perspectives with other participants. The session concluded with a discussion of departmental resources for providing high-quality feedback.8 A complete agenda and a sample case used in the sessions is available as online supplemental material.

Figure.

Framework for Effective Feedback

To evaluate the impact of the session, we assessed faculty perceptions of its utility with a 6-item survey that included three 5-point Likert-type responses and 3 open text boxes for comments (created by the authors with no further testing). We also analyzed written feedback from participants before and after attending a session. We coded 5976 comments written by 22 randomly selected faculty members from general internal medicine and hematology/oncology before the session and 5653 written by the same faculty members 1 to 12 months after the session. We selected faculty from these divisions because of their frequent contact with and observation of learners across training levels in inpatient and outpatient settings.

The study team consisted of 3 senior residents, 1 chief resident, and 3 faculty members. Three of 4 team members (A.B.Z., J.S.T., M.K., M.M.M.) coded each evaluation. We blinded coders to the identities of the evaluators and learners as well as to evaluation dates. After coding was complete, 1 study team member (A.B.Z.) calculated kappa coefficients to determine intercoder reliability (NVivo qualitative data analysis software version 11.4.3, QSR International Pty Ltd, Doncaster, Victoria, Australia). Evaluations that did not reach r = 0.7 for each code were adjudicated by 3 team members (A.B.Z., J.S.T., M.K.). We compared presession and postsession changes in percentage of total comments in each of the 4 categories illustrated in the Table.

Table.

Percentage of Total Comments Within Each Feedback Category Among 22 Faculty Participants Before and After the Faculty Development Session

| Subject of Comment | Percentage of Total Comments Before Session (N = 5976) | Percentage of Total Comments After Session (N = 5653) |

| Nonspecific next step | 13.5 | 10.4 |

| Specific next step | 9.9 | 11.5 |

| Reasons why | 1.9 | 3.3 |

| Outcomes | 0.2 | 0.6 |

The University of Wisconsin Education and Social/Behavioral Science Institutional Review Board determined that the project activities did not qualify as research and were exempt from ongoing oversight.

Results

We presented our session to faculty from 7 divisions in the UWSMPH Department of Medicine (n = 157). We sent a postsession survey via e-mail immediately following the workshop to 6 divisions (n = 139). Fifty-five (40%) participants responded to the survey. Sixty-five percent (36 of 55) rated the session extremely or very informative, 96% (53 of 55) learned new information, and 93% (51 of 55) stated that they would change how they write feedback.

Analysis revealed that the number of nonspecific next steps offered to learners declined from presession to postsession (Table). We recorded an increase in the following 3 areas: (1) the number of specific next steps offered, (2) reasons why, and (3) outcomes.

Discussion

Following the session, we saw changes in the content of some of our faculty members' written feedback to learners. Faculty members incorporated more specific feedback linked to desired outcomes and reasons why after the intervention. This is promising considering the brevity of the session.

Other studies looking at brief interventions measured an increase in specific feedback and the amount of feedback given. Salerno and colleagues9 found these improvements after a 90-minute faculty development session with ambulatory preceptors. Holmboe and colleagues6 saw similar results after a 20-minute session with inpatient attendings. Both studies limited their observations to resident teaching. We were able to accomplish similar results, reaching a greater number of faculty across our department and all levels of learners from students to fellows.

As this project was conducted in 1 department at 1 institution, the findings may not be generalizable to other specialties and settings. The survey response rate was low and, thus, may reflect faculty who were more interested or invested in improving their feedback. In addition, the survey was not tested; thus, respondents may not have interpreted the questions as we intended.

Sustaining improvements in feedback may require continued faculty development; however, the type and frequency of booster training is not known. A designated committee continues to review faculty written feedback for application of the session principles and to provide feedback to faculty.

Conclusion

This brief intervention, using a specific framework for effective feedback, faculty review of their own evaluation comments, and immediate practice with the framework, resulted in small but measurable improvement in written feedback by faculty from multiple specialties.

Supplementary Material

References

- 1.Archer JC. State of the science in health professional education: effective feedback. Med Educ. 2010;44(1):101–108. doi: 10.1111/j.1365-2923.2009.03546.x. [DOI] [PubMed] [Google Scholar]

- 2.Bing-You R, Hayes V, Varaklis K, Trowbridge R, Kemp H, McKelvy D. Feedback for learners in medical education: what is known? A scoping review. Acad Med. 2017;92(9):1346–1354. doi: 10.1097/ACM.0000000000001578. [DOI] [PubMed] [Google Scholar]

- 3.Shaughness G, Georgoff PE, Sandhu G, Leininger L, Nikolian VC, Reddy R, et al. Assessment of clinical feedback given to medical students via an electronic feedback system. J Surg Res. 2017;218:174–179. doi: 10.1016/j.jss.2017.05.055. [DOI] [PubMed] [Google Scholar]

- 4.Ramani S, Krackov SK. Twelve tips for giving feedback effectively in the clinical environment. Med Teach. 2012;34(10):787–791. doi: 10.3109/0142159X.2012.684916. [DOI] [PubMed] [Google Scholar]

- 5.Canavan C, Holtman MC, Richmond M, Katsufrakis PJ. The quality of written comments on professional behaviors in a developmental multisource feedback program. Acad Med. 2010;85(suppl 10):106–109. doi: 10.1097/ACM.0b013e3181ed4cdb. [DOI] [PubMed] [Google Scholar]

- 6.Holmboe ES, Fiebach NH, Galaty LA, Huot S. Effectiveness of a focused educational intervention on resident evaluations from faculty. J Gen Intern Med. 2001;16(7):427–434. doi: 10.1046/j.1525-1497.2001.016007427.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77(1):81–112. doi: 10.3102/003465430298487. [DOI] [Google Scholar]

- 8.University of Wisconsin School of Medicine and Public Health. Department of Medicine. The COACH Program: Overview. 2019 www.medicine.wisc.edu/coach Accessed June 5.

- 9.Salerno SM, Jackson JL, O'Malley PG. Interactive faculty development seminars improve the quality of written feedback in ambulatory teaching. J Gen Intern Med. 2003;18(10):831–834. doi: 10.1046/j.1525-1497.2003.20739.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.