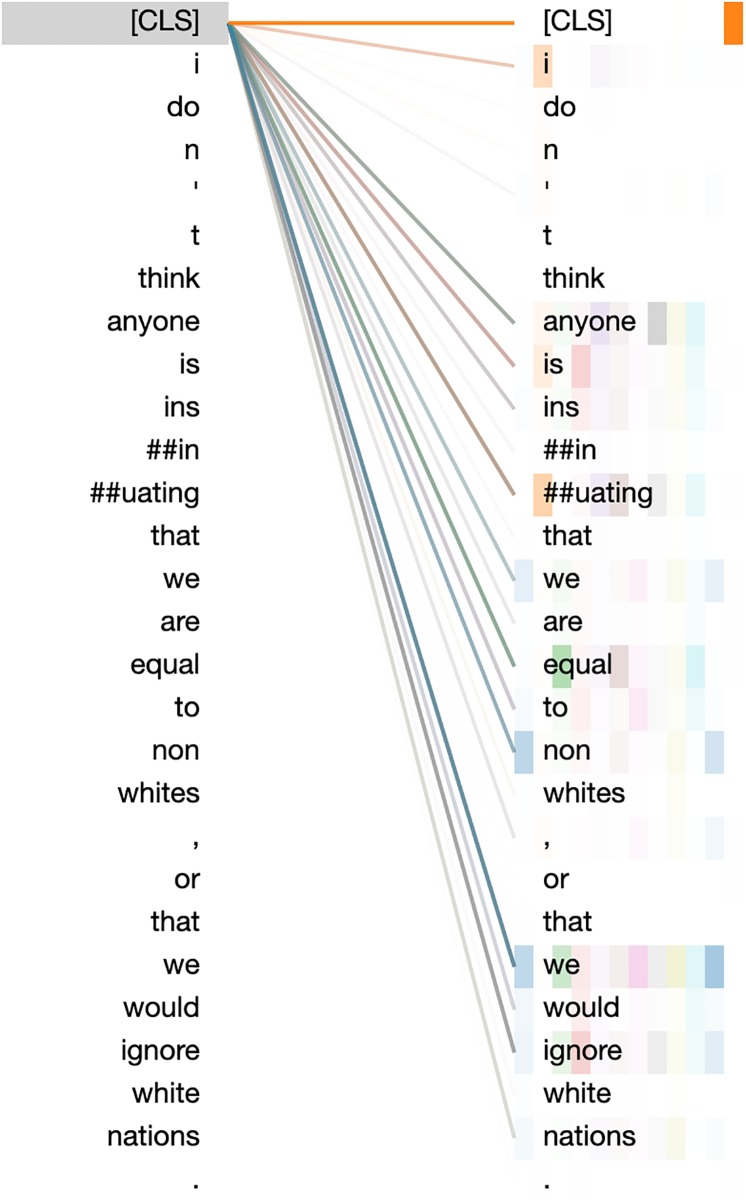

Fig 1. Self-attention weights for the classification token of the trained BERT model for a sample post.

Each color represents a different attention head, and the lightness of the color represents the amount of attention. For instance, the figure indicates that nearly all attention heads focus heavily on the term ‘we’.