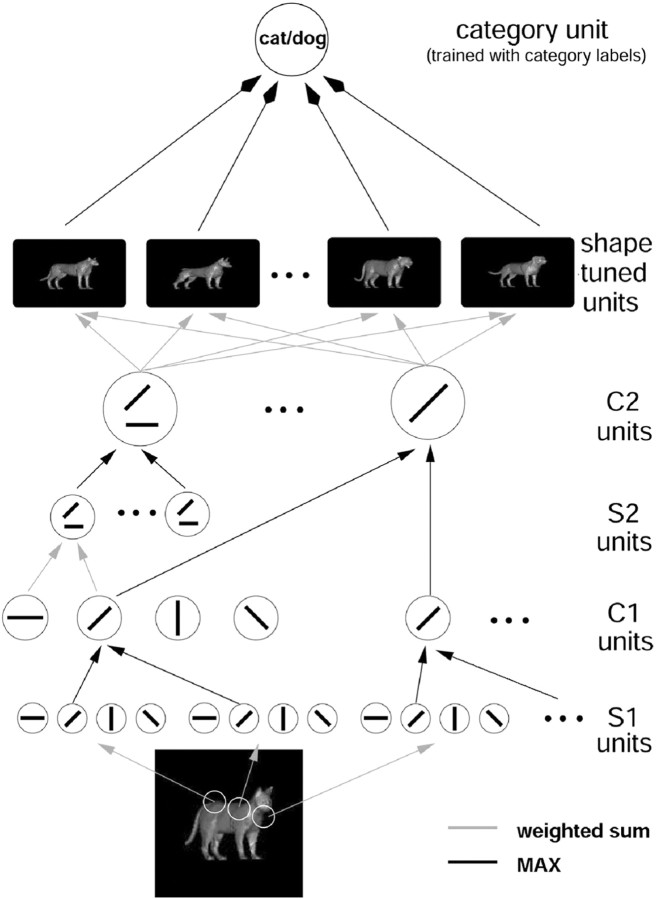

Figure 7.

This figure shows a model of the recognition architecture in cortex (Riesenhuber and Poggio, 1999) that combines and extends several recent models (Fukushima, 1980; Perrett and Oram, 1993; Wallis and Rolls, 1997) and effectively summarizes many experimental findings (for review, see Riesenhuber and Poggio, 2002). It is a hierarchical extension of the classical paradigm (Hubel and Wiesel, 1962) of building complex cells from simple cells. The specific circuitry that we proposed consists of a hierarchy of layers with linear (“S” units, performing template matching, gray lines) and nonlinear units (“C” pooling units, performing a “MAX” operation, black lines, in which the response of the pooling neuron is determined by its maximally activated afferent). These two types of operations provide, respectively, pattern specificity, by combining simple features to build more complex ones, and invariance: to translation, by pooling over afferents tuned to the same feature at different positions, and scale (data not shown), by pooling over afferents tuned to the same feature at different scales. Shape-tuned model units (STU) exhibit tuning to complex shapes but are tolerant to scaling and translation of their preferred shape, like view-tuned neurons found in ITC(cf.Logothetis et al., 1995). STUs can then serve as input to task modules located farther downstream, e.g., in PFC, that perform different visual tasks such as object categorization. These modules consist of the same generic learning process but are trained to perform different tasks. For more information, see http://www.ai.mit.edu/projects/cbcl/hmax.