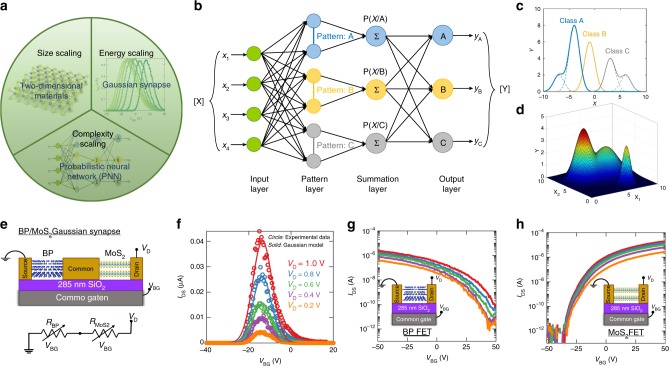

Fig. 1.

Gaussian Synapse based probabilistic neural network (PNN). a Resurrection of three quintessential scaling aspects of computation i.e., complexity scaling through PNNs, size scaling through atomically thin 2D materials and energy scaling through analog Gaussian synapses. b Schematic representation of PNN that comprise of a pattern layer and a summation layer for mapping any input pattern to any number of output classifications. c Gaussian probability density functions (PDFs) facilitating seamless and accurate classification of complex patterns with arbitrarily shaped decision boundaries. d Multivariate Gaussian kernel for mapping higher dimensional functions. e Schematic of two transistor Gaussian synapse based on heterogeneous integration of n-type MoS2 and p-type black phosphorus (BP) back-gated field effect transistors (FETs). The equivalent circuit diagram consists of two variable resistors connected in series. f Transfer characteristics i.e., the drain current () versus back-gate voltage () of the Gaussian synapse for different drain voltages (). Clearly, the experimental data (circles) can be modeled by Gaussian distributions (solid). g Transfer characteristics of p-type BP FET. h Transfer characteristics of n-type MoS2 FET