Abstract

Patients with heart failure (HF) are commonly implanted with cardiac resynchronization therapy (CRT) devices as part of their treatment. Presently, they cannot directly access the remote monitoring (RM) data generated from these devices, representing a missed opportunity for increased knowledge and engagement in care. However, electronic health data sharing can create information overload issues for both clinicians and patients, and some older patients may not be comfortable using the technology (i.e., computers and smartphones) necessary to access this data. To mitigate these problems, patients can be directly involved in the creation of data visualization tailored to their preferences and needs, allowing them to successfully interpret and act upon their health data. We held a participatory design (PD) session with seven adult patients with HF and CRT device implants, who were presently undergoing RM, along with two informal caregivers. Working in three teams, participants used drawing supplies and design cards to design a prototype for a patient-facing dashboard with which they could engage with their device data. Information that patients rated as a high priority for the “Main Dashboard” screen included average percent pacing with alerts for abnormal pacing, other device information such as battery life and recorded events, and information about who to contact with for data-related questions. Preferences for inclusion in an “Additional Information” display included a daily pacing chart, health tips, aborted shocks, a symptom list, and a journal. These results informed the creation of an actual dashboard prototype which was later evaluated by both patients and clinicians. Additionally, important insights were gleaned regarding the involvement of older patients in PD for health technology.

Keywords: heart failure, cardiac resynchronization therapy devices, consumer health informatics, health services for the aged, data visualization, human–computer interaction

Background and Significance

A large and increasing population of patients with heart failure (HF), primarily older adults, are treated with cardiovascular implantable electronic devices (CIEDs) that deliver cardiac resynchronization therapy (CRT). 1 While most CIEDs support both scheduled and on-demand remote monitoring (RM) to transmit device data to the clinic, some action from the patient is usually necessary, including keeping the bedside transmitter plugged in, turned on, and within range. Participation in RM results in improved outcomes (i.e., increased survival), particularly among those who are more adherent. 2 However, research suggests that over half (53%) of patients with RM-capable devices do not participate at all, and, among those who do, most (70.6%) are adherent less than half of the time. 2 This can delay necessary clinical responses to RM data, including device adjustment or changes to drug therapy. 2

Patients with CIEDs desire more information and support related to their device postimplantation 3 ; currently, they do not directly receive the data generated by RM. 4 5 6 Patient advocates have expressed concern that they can track other health data (e.g., with fitness trackers or sleep monitors) but cannot directly monitor the device permanently implanted in their body. 7 Providing access to this data allows a feedback loop from transmission back to patient, 8 increasing awareness of device functioning and potentially promoting higher engagement in care, incentivizing continued RM adherence, and allowing patients to initiate contact with the clinic for a faster response to data, if necessary. Among a magnitude of RM data, there are certain types that are clinically monitored but can be useful to patients, as well. In the case of CRT to treat HF, one example is ventricular pacing, or the proportion of time that the device appropriately delivers stimuli to heart muscle. Pacing proportions of 93% or above are optimal; lower levels signal the need for follow-up. 9

However, before granting patients access to their data, it must be ensured they can meaningfully interpret and apply it. At present, RM data are intended to be reviewed by trained clinicians; they are complex, with hundreds of data elements, and can pose information overload issues even for clinical experts. 10 11 Additionally, most patients with CIEDs are over age 65, 1 and thus may be less adept at using technology and more likely to have impairments that hinder visual perception or comprehension (i.e., age-related cognitive issues, degradation of color perception ability). 12 13 Despite these challenges, health data can benefit older adults by contributing to continued independence and engagement in their care. 14 Thus, older adult patients should be involved in both the early stages of design and in ongoing usability testing of health technologies for which they are the target users. 12 15

One such method of engaging individuals is participatory design (PD), which originated to allow workers with minimal computer expertise to participate in the design of novel workplace technology to ensure it met their needs. 16 17 PD is both design and research—it uses research methods (e.g., ethnographic observations, interviews, analysis) to iteratively construct a design, and participants' interpretation of the process and result is an essential aspect of the methodology. 16 Prior research has shown that PD can successfully engage older adults in design work. 15 18 Though older adults are stereotyped as less technologically aware or competent, this group is truly diverse in their abilities, 15 and PD does not require the participant to be a technology expert, focusing instead on eliciting user needs to create a tailored, meaningful, and useful human–computer interaction. 16 18

Such methodology allows patients to drive the development of visual analytics to include simplified, personalized, and actionable data visualization. 19 20 PD can be particularly useful when creating visual analytics tools, compared with purely quantitative approaches, as it allows for deeper insight and real-time suggestions to overcome design problems. 21 A recent report recommended the use of PD in the medical field when enhancing clinical workflow, noting that data visualization represents an opportunity to improve patient data access. 22 Medical research has successfully utilized PD for a range of health-related innovations (e.g., electronic health record optimization, diabetes maintenance care, apps for use in schizophrenia care). 15 16 23 24 Recent research has also demonstrated the value of PD to elicit content preferences in visual analytics for clinician-facing RM interfaces, though not specific to CIEDs. 25 We have chosen to apply this method to a patient-facing CRT RM interface in the present work.

Objectives

This article describes the process and results of utilizing PD, as part of a larger design study, to engage individuals in patient-driven development of a CRT RM data dashboard intended for use in an electronic health record patient portal or mobile application. We share how the PD approach guided older adults toward applying prior experience with data used in everyday life to create a technology-based representation of their RM data. The present work was executed with the goal of creating a dashboard prototype to be evaluated in future usability testing sessions.

In this article, we address the following questions: how do older adults with HF and their caregivers prefer to visualize key implantable CRT device data, and how do they prioritize this information? We also report our observations from engaging older adults in a PD process for eliciting data visualization preferences.

Methods

We conducted a PD session with nine older adults: seven patients with HF and implanted CRT devices, and two of their informal caregivers. The PD phase of the study is part of a larger iterative design process, preceded by focus groups and later followed by usability testing and a pilot trial delivering real, up-to-date RM data to participants. The methods and materials for this PD session were designed by the User Interface Design expert on the research team and informed by four prior focus groups in which participants shared preferences regarding the content, timing, and delivery method of device data and other health-related information. 26 The materials used in the PD session were contained within these content preferences; however, alternate visual presentations were created for each, as well as blank cards for new ideas. The goal of designing a dashboard contained within MyChart (Epic's personal health record [PHR]) was also informed by focus group findings—participants preferred to receive urgent communication and alerts via text message and phone calls, but MyChart was their choice for detailed health data and educational content.

Setting and Participants

Participants for the PD session were recruited from within the sample of aforementioned focus group participants. During the prior focus group phase, 24 participants (16 patients and 8 informal caregivers) were recruited from a large not-for-profit cardiology clinic in the Midwest. The device clinic, part of ambulatory care, provided a list of adult patients with implantable CRT devices. A research nurse (author S.W.) screened these records for patients with HF with reduced ejection fraction. Patients were contacted by phone by the research nurse and invited to participate in the focus groups along with an informal caregiver of their choosing. During the focus group informed consent process, researchers asked if participants would be willing to participate in a design session to be held later. Those who agreed were later contacted and invited to participate in the group PD session ( n = 9). All participants provided additional informed consent before the design session and received $40 on a reloadable prepaid debit card (ClinCard) for their participation. All study activities were approved by the Parkview Health Institutional Review Board.

At the prior focus groups, participants completed a survey that included: a demographics form, the Altarum Consumer Engagement (ACE) 27 scale, and the Newest Vital Sign (NVS). 28 The 12-item ACE survey tool is a validated measure of patient engagement in which respondents use a 5-point Likert-type scale (1 = strongly disagree, 5 = strongly agree) to self-report health behaviors across three domains: Navigation (comfort/skill using the health system), Commitment (capability to self-manage health), and Informed Choice (seeking out and acting upon health-related information). 27 The NVS assesses health literacy by requiring respondents to interpret a nutrition label; those who correctly answer four or more of six questions are considered to have “adequate” literacy. 28

The PD session included nine participants. Five patients participated individually; two participated as a dyad with their accompanying caregiver. The group was divided into three smaller teams to increase collaboration between individuals and invite divergent ideas, and then brought back together for a group consensus discussion to ensure agreement among all teams. 29 Each of the two dyads was kept together in Teams 1 and 2, respectively, due to their shared experiences. Otherwise, the team members were randomly chosen. Each team was seated at a separate table; tables were arranged in a half circle, facing a large display on the wall.

The session was facilitated by research staff, including three moderators (a research scientist, a user experience research specialist, and a human–computer interaction Master of Science student), two observers (an informatics research coordinator and a research project manager), and a cardiology research nurse.

Materials

Each team was provided with the following to design their dashboard:

○ One large paper board to represent the dashboard with two distinct areas: the Main Dashboard (for high-priority information) and the Additional Information display.

○ A stack of cards containing both blank cards and printed sample content cards.

○ A set of crafting supplies, including colored markers, pencils, sticky notes, and adhesive tape ( Fig. 1 ).

Fig. 1.

Example of materials provided in the participatory design session, including dashboard placeholder, information cards, blank cards, and drawing supplies.

Procedure

The PD session consisted of four main components across approximately 3 hours: a practice design session, an educational review, the main PD activity, and group consensus. The objectives and process were clearly communicated to the participants. The session was audio and video recorded with participant consent.

Practice design session (20 minutes): A session moderator (author R.A.) displayed an image of a car dashboard (chosen because of its universal familiarity) and asked each team to design their ideal car dashboard using the supplies provided. This practice activity was designed to introduce the participants to the format of the main activity, inspire creativity, and function as an ice-breaker for the teams. At the end of the activity, each team was given 2 minutes to present their dashboard to the group.

Review of key HF concepts and percent CRT pacing (10 minutes): The cardiology research nurse (author S.W.) presented an educational PowerPoint to the participants with basic information regarding HF and implantable CRT devices. This presentation included an explanation of percentage pacing, a key data point from RM reports. Ideally, the device should pace more than 93% of the time, and pacing below 85% is cause for concern; a stoplight metaphor (red, yellow, and green) was used to divide percent pacing into alert zones, with 93 to 100% pacing as the green zone, 85 to 92% the yellow zone, and 0 to 84% the red zone. 26 This metaphor has been shown in prior research to be easily understood by similar patient populations in health data design work. 30 The action related to each zone was also explained to patients: the green zone requires no action, and the red zone requires one clear action—calling the device clinic. However, the yellow zone requires self-monitoring potential warning signs indicating worsening of their heart or device function to determine if action is needed.

-

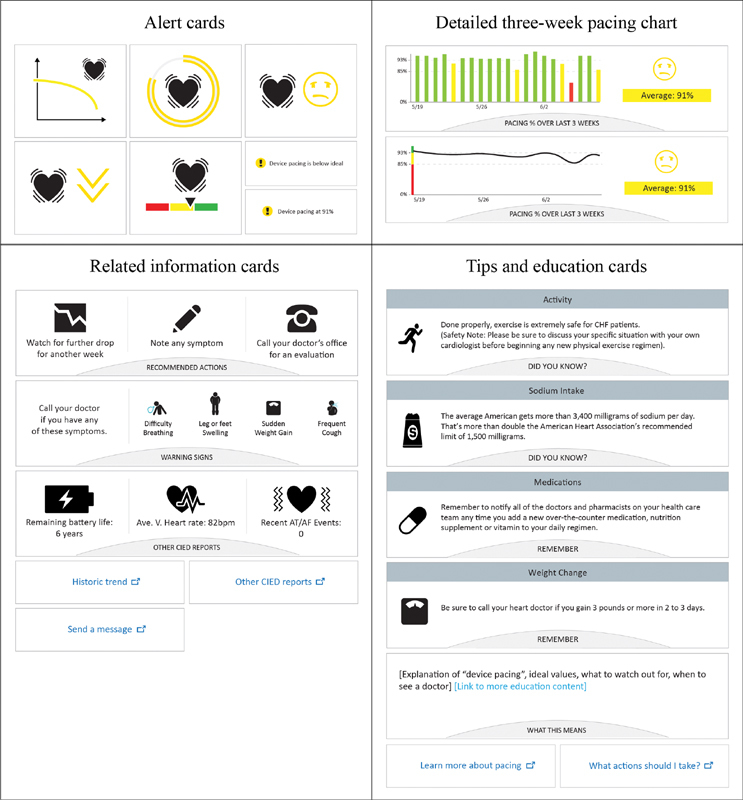

PD activity (∼60 minutes): The session began with instructions (20 minutes). The moderator (R.R.G.) asked participants to assume their 3-week average pacing was 91%, or yellow zone. Of the three zones, it represents the need for the widest range of supplemental data and information for patients to monitor their condition or decide to take action. To provide context for when and how participants might use the dashboard they were designing, they were shown a screenshot of the PHR with which they were already familiar (MyChart) showing where the dashboard, if implemented, would be accessible—on its own tab, among existing resources such as refill requests and test results. Participants were told that the dashboard would have a high-priority content area (“Main Dashboard”) that could be expanded to reveal an “Additional Information” display, as well as link externally to other resources. Among the design materials, there were four different types of printed cards with varying information and visualizations: alerts, detailed pacing charts, related information, and tips and education. All available cards are displayed in Fig. 2 .

Alert cards had a yellow circle with an exclamation point in the bottom left corner. The text on the card said either: “Device pacing is below ideal,” or “Device pacing is at 91%.” There were five different graphics which could indicate the warning, as displayed in Fig. 2 .

Detailed 3-week pacing chart cards showed two options for displaying the trend of CRT pacing data over the course of 3 weeks.

Related information cards included recommended actions, other CIED data reports, and physical warning signs (e.g., shortness of breath), and links to send a message, see additional CIED data, and see a historical trend.

Tips and education cards included four sample educational information cards (“Activity,” “Sodium Intake,” “Medications,” and “Weight Change”), intended to be used together as rotating tips. Other cards included what pacing means and links to additional information patients might want to see to help them understand or decide what to do when their percent pacing was at 91%.

The icons and symbols used on the cards were displayed to participants in the PowerPoint presentation, and the lead moderators (R.R.G. and R.A.) facilitated an open discussion about the icons to ensure general understanding among the group. The only specific instructions given to participants were: (1) to design for a pacing alert of 91%, or yellow zone; and (2) to work within the drawn rectangle boundaries of the Main Dashboard and the Additional Information display. Blank cards were provided so that participants could create custom text and graphics based on their preferences, with the goal of designing the most intuitive representations. Further, participants were encouraged to write directly on the cards to modify them if desired. Once all the groups completed their designs (∼30 minutes), participants were encouraged to walk around and look at the other teams' dashboards, ask questions, and discuss for about 5 minutes.

Group consensus process (20 minutes): Following the design activity, all teams were invited to share their designs, with the goal of reaching group consensus toward a single design. The group consensus process utilized principles from nominal group technique (NGT) 31 to converge separately constructed ideas and prioritize as a group. Group consensus techniques have been applied in prior PD research 29 and several examples of modified NGT exist that were applied toward idea generation in health care research. 32 33 In this case, idea generation involved small PD teams instead of individual “silent generation” typical to NGT, to accommodate for the participants' lack of prior experience with RM data. The moderators led a round-robin presentation of each team's dashboards, followed by group discussion clarifying choices and finally prioritizing content for a consolidated dashboard. The discussion included the visual representation of the content chosen and its placement in either the Main Dashboard (top section of the foam board) or Additional Information display (larger bottom section). Moderators addressed only content and placement choices that were not consistent among all teams and invited each team to provide their rationale so the others could discuss and reevaluate.

Fig. 2.

Cards available to participants.

Results

Participant and Team Characteristics

Participants were seven older adults with HF (five males, four females) and two informal caregivers (one male, one female). They were predominantly white ( n = 8), and their average age was 67 (see Table 1 ). Most were retired, had completed at least some college, and rated their ability to use a computer/the Internet as “average” or “good” ( n = 7).

Table 1. Participant and team characteristics.

| Team 1 | Team 2 | Team 3 | Total | ||

|---|---|---|---|---|---|

| Participant | Patient | 2 | 2 | 3 | 7 |

| Informal caregiver | 1 | 1 | 0 | 2 | |

| Average age | 67 | 65 | 69 | 67 | |

| Gender | Male | 1 | 1 | 3 | 5 |

| Female | 2 | 2 | 0 | 4 | |

| Race | Caucasian | 3 | 3 | 2 | 8 |

| No answer | 0 | 0 | 1 | 1 | |

| Employment | Retired | 3 | 3 | 1 | 7 |

| Employed full time | 0 | 0 | 1 | 1 | |

| Unable to work | 0 | 0 | 1 | 1 | |

| Education level | Trade/some college | 3 | 2 | 2 | 7 |

| High school | 0 | 1 | 0 | 1 | |

| No answer | 0 | 0 | 1 | 1 | |

| Ability to use computer/Internet | Good | 1 | 2 | 0 | 3 |

| Average | 2 | 1 | 1 | 4 | |

| Very poor/poor | 0 | 0 | 2 | 2 | |

| NVS Score | Adequate literacy | 2 | 3 | 2 | 7 |

| Possibility of limited literacy | 0 | 0 | 0 | 0 | |

| High likelihood of limited literacy | 1 | 0 | 1 | 2 | |

| ACE measure | Average commitment | Low | Low | Low | Low |

| Average informed choice | Medium | Medium | Low | Low | |

| Average navigation | Medium | Medium | Medium | Medium |

Abbreviations: ACE, Altarum Consumer Engagement; NVS, Newest Vital Sign.

As seen in Table 1 , teams were fairly evenly matched with regard to health engagement and health literacy. All three teams scored “low” in Commitment and “medium” in Navigation on the ACE, while Teams 1 and 2 scored “medium” in Informed Choice and Team 3 scored “low,” with the sample scoring “low” overall. Though two individual participants' scores indicated a possibility of “limited” health literacy as scored by the NVS, all three teams overall demonstrated “adequate” health literacy. Together, this characterizes a sample that is likely appropriately aware of how to interpret simple health data and is moderately comfortable navigating the health care system, but that may struggle with self-care, following a care plan, and actively seeking and acting upon health information.

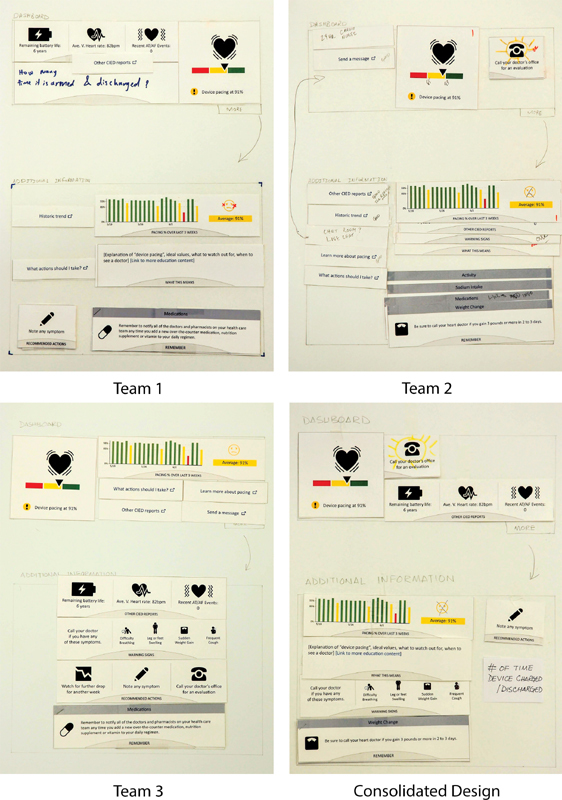

Participatory Design Session

The PD session resulted in three team dashboards and one group consensus dashboard ( Fig. 3 ). Though the dashboards differed in prioritization and customization/creation of cards, the teams were ultimately able to agree on a finalized set of preferences ( Table 2 ). For the Main Dashboard, consensus included: a pacing alert, a button to call their physician with a flashing indicator if the doctor has recommended contact, and device-related reports (including remaining battery life, heart rate, and recorded therapy events). In the Additional Information display, participants agreed to include a daily pacing chart, a journal in which to note symptoms, a log of aborted shocks (charges/discharges), warning signs, an explanation of pacing, and rotating tips. The following sections describe details about team preferences and the consensus process.

Fig. 3.

Consolidated design achieved through consensus from all teams.

Table 2. Content and placement preferences finalized through consolidation.

| Content/feature | Placement | Group agreement |

|---|---|---|

| Pacing alert | Main Dashboard | Unanimous |

| a Call doctor (with blinking alert if doctor requests checking in) | Main Dashboard | Consensus reached |

| Other CIED reports (including remaining battery life, heart rate, and recorded therapy events) | Main Dashboard | Consensus reached |

| Daily pacing chart | Additional Information display | Consensus reached |

| Note symptom (journal) | Additional Information display | Consensus reached |

| a Times device charged / discharged (log of aborted shocks) | Additional Information display | Consensus reached |

| Explanation of device pacing | Additional Information display | Consensus reached |

| Warning signs | Additional Information display | Consensus reached |

| Rotating tips | Additional Information display | Unanimous |

| Reference links and link to send a message (to provider) | Additional Information display (not shown on consolidated design board) | Consensus reached |

Abbreviations: CIED, cardiovascular implantable electronic device; PD, participatory design.

New ideas suggested by the patients in PD session.

Alert for 91% Pacing (Yellow Zone)

All three teams selected the alert for 91% pacing to be displayed in the Main Dashboard with the same graphic (heart icon over red, yellow, and green bars) and the text “Device pacing at 91%.” Team 1 suggested that the colors should be in reverse order (i.e., green on the left instead of red). Team 2 wrote the numbers “85” and “93” on the bar between the color segments.

Detailed Daily Pacing Chart

All three teams preferred the bar chart for percent pacing over the past 3 weeks over the graph with the trend line. Team 3 placed the detailed daily pacing chart on the Main Dashboard, but Teams 1 and 2 placed this in the Additional Information display, with a participant from Team 2 explaining, “To me it was more of a history… Our team wanted to keep on the [main] dashboard what we would use and want to use right away.” Teams 1 and 2 also crossed out the sad face emoji, as participants felt it was too negative.

Related Information Cards

Team 1 placed the “Other CIED reports” card (which showed remaining battery life, heart rate, and a log of recent events) in the Main Dashboard; Team 2 and 3 placed it in the Additional Information display, with a participant on Team 2 describing, “We left it at the bottom because we did not want the top [Main Dashboard] to get too busy.” However, all teams agreed to place this card in the Main Dashboard during the group consensus process, as space was available.

Both Teams 2 and 3 selected “Warning signs” (an at-a-glance list of HF symptoms to watch for) for the Additional Information display; however, Team 1 did not select it at all. During the consensus process, Team 1 agreed to place “Warning signs” in the Additional Information display after the group discussed the rationale that it could serve as a reminder to oneself or a caregiver.

Similarly, Teams 2 and 3 included the link to “Send a Message” on their Main Dashboard, while Team 1 did not include it at all. Although it was not physically placed on the consolidated board due to the session ending, the teams discussed including this on the Additional Information display. All three teams also agreed to add reference links to the Additional Information dashboard.

Tips and Education Cards

Although Team 3 was the only team to not include the “Explanation of ‘device pacing’…” card on their board, all teams agreed to include this card in the Additional Information display during the consensus process. As described in the “Methods” section, the educational tips were presented as one item with randomly rotating content in the form of several example content cards: “Sodium Intake,” “Medications,” “Weight Change,” and “Activity.” Team 1 stacked all four cards on top of each other and stapled them, Team 2 staggered the cards like tabs on their board, and Team 3 placed one card on their board to represent a card shown at a given time during the rotation (shown in Fig. 3 ). Importantly, all three teams agreed that the rotating tip cards should be included in the Additional Information dashboard.

Custom and Participant-Generated Cards

Teams 2 and 3 wanted the option to start a call to their doctor from within the Main Dashboard by adding a blinking notification to appear if the doctor requests checking in after reviewing detailed RM data; all teams agreed to this suggestion. Team 1 drew two ideas (“24-hour cardio nurse” and “Chat room / live chat”) on their board. A discussion on these features during consensus concluded that it would be sufficient and perhaps more helpful to know if the doctor recommends communication, with a way to place a phone call to the appropriate clinical team easily.

Team 1 utilized a blank card to add the data “How many times it armed and discharged” to the Main Dashboard section. One participant from the team mentioned she feels a vibration when the device is getting ready to deliver a shock, and that she would like to be able to review this data to confirm this and identify possible reasons based on her actions and schedule. Team 1 folded the recommended actions card so that the “Note Symptoms” section was available. They changed the label “Recommended Actions” to an actionable link where they could enter symptoms in the portal. This feature was included in the group consensus board, based on one participant who noted that a log would help her recall events during her annual follow-up, where she is often asked what she was doing on a past day and is unable to recall.

Group Consensus

A summary of all content and placement preferences finalized through the group consensus process is provided in Table 2 .

Discussion

How do Older Adults with HF and Their Caregivers Prefer to Visualize Key Implantable CRT Device Data, and How do They Prioritize this Information?

The findings suggest that patients make sense of the pacing alert in context of good and bad values, as indicated by the selection of the red, yellow, and green bar over the other graphics choices. Consistent with prior research, 26 30 participants were able to easily make sense of the use of the stoplight metaphor within this health data visualization context. The bar chart was unanimously chosen over the trend line to show longitudinal comparison, possibly because the bar chart more clearly shows data from discrete days. In a prior study designing wellness visualizations among older adults, participants were familiar with and could quickly comprehend data displayed in both bar graphs and line graphs, 34 but also preferred explicit colors rather than gradual shadings, aligning with our participants' preferences for the distinct bar colors. 34

Participants also emphasized the importance of outlook. For example, the “sad” emoji was rejected due to the negative emotion attached. Emotional connotations associated with emojis may vary demographically and are not yet fully understood. 35 Therefore, it is important to understand how an emoji makes target users feel before implementing it in the design of important medical data—visualizations should support a positive outlook, as this affects quality of life in HF patients. 36 Additionally, when possible, numbers (“91% pacing”) may be more helpful than words alone (“below ideal”). This aligns with patients' concerns about existing patient-facing RM reports, which say “essentially normal” instead of providing actual data. 37

Context that helps patients understand what to do with their data is important. Over the course of treatment, patients had experienced different situations that guided their information needs, such as life-saving high-voltage shocks without a warning or shocks in error when not necessary. 26 While findings from both the earlier focus groups and the PD session suggest that content preferences are primarily driven by experiences, patients desire information to understand how their device works in relation to their activities so they may prepare for any intervention. For example, the participants desired to log what they are doing when the device charges and aborts a shock, which could help them better understand their device and heart functioning with regard to day-to-day activities. Participants agreed that a reminder of the warning signs of worsening HF symptoms was helpful in conjunction with the pacing alert in the yellow zone to help them know what to look for. Educational information to support the data, such as an explanation of ideal device pacing values or when to call a doctor, were important information to have accessible in the Additional Information display.

Our participants desired to instantly connect with their providers if needed, as indicated by the link to “Send a Message” (Teams 2 and 3) and the flashing button to call the doctor's office, an original idea from Team 2 that was included as a priority feature after consensus. Facilitating faster clinical response to device issues or cardiac problems indicated by device data is an important outcome of adherence to RM, 2 and further increasing this response time is a proposed benefit of giving patients access to their own data; our participants recognized this potential benefit, as indicated by their choice to include this feature.

Can Participatory Design Elicit Health Data Visualization Preferences of Older Adults and Engage Them in the Design Process?

In this study, we provided participants with materials to build a dashboard in the PD process, rather than starting with preliminary dashboards to work from; typically, user-centered design of patient-facing dashboards involves an initial mock-up of some kind. 38 39 40 We found that the participants, working together in groups, were able to generate a set of dashboards and collaboratively decide on a prototype design without such preliminary prompting. Our study reinforces that including patients and informal caregivers in the creation of an initial set of dashboards may increase the level of user involvement in the design process overall. 18

Consistent with the manner in which prior researchers determined that PD was feasible among older adults, 15 18 we observed that our methodology was generally well-received by our participants. Working in a team with others who have similar backgrounds may generate more dynamic and thoughtful conversations, versus working one-on-one with a professional designer who does not have HF or a CIED. Although all participants were involved in the prior focus groups that informed the design session materials, the hands-on PD process also generated new ideas, beyond those that emerged in the focus groups, suggesting that the method of creating a dashboard may have opened up new considerations. Participating in the focus group prior to this activity also may have accelerated their exploration in this new territory, allowing them to build upon their prior ideas.

Participants did not choose to draw their own graphics and images. It is possible that due to the novelty of the percent pacing data, participants may have not felt confident enough about the subject matter to draw their own representations. During the practice activity (car dashboard), participants freely drew the buttons, gauges, and other information, but in the main PD design activity, participants used the alert cards available and did not draw new visualizations, though they did make suggestions (i.e., adding numbers on the horizontal color scale and reversing the order of the colors left to right).

A potential disadvantage to this method was that, similar to focus groups, there may have been participants (or teams) who were more outspoken than others. For example, the group consensus board has many similarities to Team 1's board, and was very streamlined compared with Team 2's board. This may suggest that Team 2 yielded their ideas to others, and Team 1 was more convincing of their ideas. In group PD, it is possible that some voices may not be heard or included as much as others, and that the consensus may not accurately reflect the preferences of some individual participants.

Implications

The purpose of the PD session was to inform the design of a dashboard in the next phase of the study. These findings were translated into a digital interface mockup to be tested in a series of 10 one-on-one usability testing sessions, leading to a high-fidelity prototype for a pilot trial. Beyond the design features integrated into the interactive dashboard for this future pilot trial, we believe there are valuable findings related to how older adults may visualize complex device data, the type of health information they would like to receive to support their understanding and decision making, and the details of the group PD process, resulting in the following implications:

Implications for health data visualization for older adults:

Simplify complex data by comparing the current value/status to a reference range; include numbers instead of generalized statements.

Provide opportunity for patients to generate contextual information from their lived experiences that helps them and their providers make sense of the data.

Support data with relevant educational and actionable information, such as what symptoms to look for and when to call the doctor.

-

Include messaging or another way of contacting the provider to ensure patients feel connected, particularly with alerts or potentially worrisome information.

Implications for group PD methods:

PD with others who experience a similar health condition facilitates and stimulates discussion and ideas for optimizing patient-driven visual analytics.

Group PD may result in designs which represent the more outspoken members, and care should be taken to ensure that all members feel heard in the design process.

Older adults may prefer to verbally describe their ideas and share supporting stories and experiences, rather than draw them on paper. A possible team design approach could include gathering ideas from participants while a research team member sketches and incorporates feedback from participants.

Finally, the ability to have access to a CRT data dashboard within MyChart (or other online PHR) can potentially improve patient experience through a centralized hub of health information. Patient selections of supplementary features such as option to call a doctor, note symptoms for future reference, and message the clinic attest to the need for integration between various components of health-related data and communication.

Study Limitations

The materials provided to patients were designed by the User Interface Design expert on the research team and reflected findings from the focus groups to inform content needs; thus, possibilities of alternate visualizations and graphics were not exhaustive. Although participants were encouraged to draw their own visualizations, they essentially used the graphics provided. Therefore, our findings are somewhat constrained to what participants chose from the materials available.

PD is generally conducted as an iterative process involving multiple sessions situated to address specific and sequential design goals. 16 41 The PD session presented in this article belongs to a more comprehensive iterative design study and thereby introduces the limitation of lacking full context from conception to validation.

Additionally, this was a relatively short session with only nine individuals total, seven of which were patients. The sample was overwhelmingly white ( n = 8) and at least partially college educated ( n = 7), and thus does not necessarily represent viewpoints of patients with different cultural or educational backgrounds, the latter of which may be particularly relevant with regard to interpreting data. Most participants ( n = 7) also demonstrated adequate health literacy and self-reported at least average computer and Internet ability; patients with limited health or technology literacy may have differing preferences or capabilities that were not captured. While participants did not report issues with the use of color (i.e., stoplight metaphor) in visualizations, there are many older adults with vision impairments that include difficulty distinguishing between colors; their preferences may differ 12 13 and this viewpoint was not represented in our sample.

The design session was grounded solely on a cautionary alert zone “yellow” (between 85 and 93% pacing), presenting a scenario where health status is less than ideal while no clear action is recommended. Although it would be ideal to have participants design their preferred dashboard for each alert zones, the scenario prompted participants to consider the widest range of content needed to interpret and monitor changes in their health, and thereby maximize the utilization of the dashboard in all possible scenarios.

Conclusion

Our research demonstrates how PD can be successfully used among older adults with CIEDs to elicit a group consensus on their visualization preferences for complex device data resulting from RM. Using patient preferences for information captured in prior focus group sessions, PD effectively established prioritization and visual presentation needs of older adults with HF as part of a larger iterative user-centered design process. This informed design of a meaningful health dashboard affords the possibility for evaluation of acceptance and impact on health outcome to follow. Patients prioritized RM data points and additional related information, and provided insight as to how they preferred to visualize them (i.e., colors, words, and graphics used). These results constitute important qualitative feedback about a data set to which patients have not previously had access but may stand to benefit from, if it is properly tailored and presented to them. By empowering patients with HF in this way, the locus of control starts to shift from system to patient, supporting a true shared decision-making model that promotes improved clinical and economic outcomes. The PD process used in this research provides a framework for executing design work among similar patient populations (i.e., other older adults or individuals with other implanted devices that generate data).

Clinical Relevance Statement

Given the complexity of implantable cardiac device data from remote monitoring, the results from this participatory design session provide insight into what data points patients would prefer to access and how they would prefer to visualize them. The impact of this patient-centered, participatory research has the potential to inform the design of patient-facing tools that increase engagement in managing heart failure, enhance self-care and medication adherence, and increase adherence to remote monitoring. Importantly, this use of the PD process among older adults with HF to elicit health data visualization preferences provides a framework for executing design work among similar patient populations.

Multiple Choice Questions

-

When presenting data from implanted cardiac devices, patients believe it is important to also include:

Reminders of warning signs and symptoms.

Sad emojis to indicate a worsening condition.

Detailed educational information on the main display screen.

No information about battery life or shocks, to avoid creating anxiety.

Correct Answer: The correct answer is option a. The results of this study suggest that patients would like reminders of what symptoms to look for (a), particularly when receiving data from their device indicating their condition may be worsening. The sad emojis (b) were rejected by the participants because of the negative emotion associated with the emojis. Participants appreciated educational information (c), but chose to use the brief rotating tip cards and reference links informed by the prior focus groups and place them in the Additional Information section, citing a desire to keep the main screen limited to high-priority, frequent-use data. Participants also very much desired to know about the battery life and therapeutic activity of their device (d).

-

The team-based approach to participatory design culminated with a consolidation process where the teams reached consensus on a visual display. Which of the following is a concern regarding this process?

Participants make up their own content instead of using the content provided for the session.

The objectives of the session are too complex for participants to understand.

Not all participants' voices may be represented due to the nature of the group dynamics.

Participants do not follow the rules for staying within the boundaries of the display.

Correct Answer: The group participatory design session was successful in that teams worked well together, contributed to the process, and understood the rules. Even though they were encouraged to do so, participants did not make up much of their own content and visualizations and deferred to the cards. Participants demonstrated understanding of the process and goals of the session. There were no issues with following the rules (boundaries) as instructed by the session moderators. Therefore, answers a, b, and d are incorrect. During the consolidation process, while the teams reached consensus, there may have been some participants or teams who were more outspoken, and others who agreed to consensus even though their choices were not represented in the final display. Therefore, the correct answer is option c. Not all participants' voices may be represented due to the nature of the group dynamics.

Acknowledgment

We thank the participants for their contribution to this research study.

Funding Statement

Funding This work was supported by Biotronik SE & Co. KG.

Conflict of Interest T.T. declares the following: Research grants, Medtronic, Inc., Biotronik, Janssen Pharmaceuticals, and iRhythm Technologies, Inc. M.M. declares the following: Compensation for services, Zoll Medical Corporation; Equity interests/stock options—Non-public, Murj Inc./Viscardia; Equity interests/stock options—Public, iRhythm Technologies; Research grants, Medtronic, Inc., Biotronik, Janssen Pharmaceuticals; and Indiana University Trustee. R.A., R.R.G., C.D., R.H., S.W., A.C., and E.M. declare no conflicts of interest. Dr. Mirro reports grants from Biotronik, Inc, during the conduct of the study; grants from Agency for Healthcare Research and Quality (AHRQ), Medtronic plc, Janssen Scientific Affairs, consulting fees / honoraria from iRhythm Technologies, Inc., Zoll Medical Corporation, and nonpublic equity/stock interest in Murj, Inc./Viscardia outside the submitted work. Dr. Mirro's relationships with academia include serving as trustee of Indiana University. Dr. Toscos reports grants from Biotronik, Inc, during the conduct of the study; grants from Medtronic plc, Janssen Scientific Affairs, and iRhythm Technologies, Inc, outside the submitted work.

Protection of Human and Animal Subjects

This research was conducted in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects. All procedures were reviewed and approved by Parkview Health's Institutional Review Board.

References

- 1.Zhan C, Baine W B, Sedrakyan A, Steiner C. Cardiac device implantation in the United States from 1997 through 2004: a population-based analysis. J Gen Intern Med. 2008;23 01:13–19. doi: 10.1007/s11606-007-0392-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Varma N, Piccini J P, Snell J, Fischer A, Dalal N, Mittal S. The relationship between level of adherence to automatic wireless remote monitoring and survival in pacemaker and defibrillator patients. J Am Coll Cardiol. 2015;65(24):2601–2610. doi: 10.1016/j.jacc.2015.04.033. [DOI] [PubMed] [Google Scholar]

- 3.Serber E R, Finch N J, Leman R B et al. Disparities in preferences for receiving support and education among patients with implantable cardioverter defibrillators. Pacing Clin Electrophysiol. 2009;32(03):383–390. doi: 10.1111/j.1540-8159.2008.02248.x. [DOI] [PubMed] [Google Scholar]

- 4.Daley C N, Chen E M, Roebuck A E et al. Providing patients with implantable cardiac device data through a personal health record: a qualitative study. Appl Clin Inform. 2017;8(04):1106–1116. doi: 10.4338/ACI-2017-06-RA-0090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Daley C, Allmandinger A, Heral L. Engagement of ICD patients: direct electronic messaging of remote monitoring data via a personal health record. EP Lab Dig. 2015;15(05):1,6–10. [Google Scholar]

- 6.Skov M B, Johansen P G, Skov C S, Lauberg A.No news is good news: remote monitoring of implantable cardioverter-defibrillator patientsIn: CHI '15 Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. New York: ACM;2015:827–836

- 7.Staden A.Patients crusade for access to their medical device data 2012. Available at:https://www.npr.org/sections/health-shots/2012/05/28/153706099/patients-crusade-for-access-to-their-medical-device-data. Accessed April 15, 2019

- 8.Daley C, Toscos T, Mirro M. Data integration and interoperability for patient-centered remote monitoring of cardiovascular implantable electronic devices. Bioengineering (Basel) 2019;6(01):25. doi: 10.3390/bioengineering6010025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Koplan B A, Kaplan A J, Weiner S, Jones P W, Seth M, Christman S A. Heart failure decompensation and all-cause mortality in relation to percent biventricular pacing in patients with heart failure: is a goal of 100% biventricular pacing necessary? J Am Coll Cardiol. 2009;53(04):355–360. doi: 10.1016/j.jacc.2008.09.043. [DOI] [PubMed] [Google Scholar]

- 10.Longo D R, Schubert S L, Wright B A, LeMaster J, Williams C D, Clore J N. Health information seeking, receipt, and use in diabetes self-management. Ann Fam Med. 2010;8(04):334–340. doi: 10.1370/afm.1115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Levine M, Richardson J E, Granieri E, Reid M C. Novel telemedicine technologies in geriatric chronic non-cancer pain: primary care providers' perspectives. Pain Med. 2014;15(02):206–213. doi: 10.1111/pme.12323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lorenz A, Oppermann R. Mobile health monitoring for the elderly: designing for diversity. Pervasive Mobile Comput. 2009;5:478–495. [Google Scholar]

- 13.Salvi S M, Akhtar S, Currie Z. Ageing changes in the eye. Postgrad Med J. 2006;82(971):581–587. doi: 10.1136/pgmj.2005.040857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Le T, Chi N C, Chaudhuri S, Thompson H J, Demiris G. Understanding older adult use of data visualizations as a resource for maintaining health and wellness. J Appl Gerontol. 2018;37(07):922–939. doi: 10.1177/0733464816658751. [DOI] [PubMed] [Google Scholar]

- 15.Lindsay S, Brittain K, Jackson D, Ladha C, Ladha K, Olivier P.Empathy, participatory design and people with dementiaIn: CHI '12 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York: ACM;2012:521–530

- 16.Spinuzzi C. The methodology of participatory design. Tech Commun (Washington) 2005;52(02):163–174. [Google Scholar]

- 17.Clemensen J, Larsen S B, Kyng M, Kirkevold M. Participatory design in health sciences: using cooperative experimental methods in developing health services and computer technology. Qual Health Res. 2007;17(01):122–130. doi: 10.1177/1049732306293664. [DOI] [PubMed] [Google Scholar]

- 18.Rogers Y, Paay J, Brereton M, Vaisutis K L, Marsden G, Vetere F.Never too old: engaging retired people inventing the future with MaKey MaKeyIn: CHI '14 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York: ACM;2014:3913–3922

- 19.Caban J J, Gotz D. Visual analytics in healthcare--opportunities and research challenges. J Am Med Inform Assoc. 2015;22(02):260–262. doi: 10.1093/jamia/ocv006. [DOI] [PubMed] [Google Scholar]

- 20.Huang D, Tory M, Aseniero B A et al. Personal visualization and personal visual analytics. IEEE Trans Vis Comput Graph. 2015;21(03):420–433. doi: 10.1109/TVCG.2014.2359887. [DOI] [PubMed] [Google Scholar]

- 21.Mayr E, Smuc M, Risku H. Many roads lead to Rome: mapping users' problem-solving strategies. Inf Vis. 2011;10(03):232–247. [Google Scholar]

- 22.Woods S S, Evans N C, Frisbee K L. Integrating patient voices into health information for self-care and patient-clinician partnerships: Veterans Affairs design recommendations for patient-generated data applications. J Am Med Inform Assoc. 2016;23(03):491–495. doi: 10.1093/jamia/ocv199. [DOI] [PubMed] [Google Scholar]

- 23.Wang T D, Wongsuphasawat K, Plaisant C, Shneiderman B. Extracting insights from electronic health records: case studies, a visual analytics process model, and design recommendations. J Med Syst. 2011;35(05):1135–1152. doi: 10.1007/s10916-011-9718-x. [DOI] [PubMed] [Google Scholar]

- 24.Terp M, Laursen B S, Jørgensen R, Mainz J, Bjørnes C D. A room for design: Through participatory design young adults with schizophrenia become strong collaborators. Int J Ment Health Nurs. 2016;25(06):496–506. doi: 10.1111/inm.12231. [DOI] [PubMed] [Google Scholar]

- 25.Ghods A, Caffrey K, Lin Bet al. 2018; PP(99):1

- 26.Rohani Ghahari R, Holden R J, Flanagan M et al. Using cardiac implantable electronic device data to facilitate health decision making: a design study. Int J Ind Ergon. 2018;64:143–154. [Google Scholar]

- 27.Duke C C, Lynch W D, Smith B, Winstanley J. Validity of a new patient engagement measure: the Altarum Consumer Engagement (ACE) Measure™. Patient. 2015;8(06):559–568. doi: 10.1007/s40271-015-0131-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Weiss B D, Mays M Z, Martz W et al. Quick assessment of literacy in primary care: the Newest Vital Sign. Ann Fam Med. 2005;3(06):514–522. doi: 10.1370/afm.405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hwang A S, Truong K N, Mihailidis A.Using participatory design to determine the needs of informal caregivers for smart home user interfacesIn2012. 6th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops; IEEE, May 21, 2012:41–48

- 30.Caine K E, Zimmerman C Y, Schall-Zimmerman Zet al. DigiSwitch: design and evaluation of a device of older adults to preserve privacy while monitoring health at home In: IHI'10–Proceedings of the 1 st ACM International Health Informatics Symposium. New York: ACM; 2010:153–162

- 31.Delbecq A L, Van de Ven A H, Gustafson D H.Group techniques for program planning: A guide to nominal group and Delphi processesScott Foresman;197544–69. [Google Scholar]

- 32.Kendal S E, Milnes L, Welsby H, Pryjmachuk S; Co-Researchers' Group.Prioritizing young people's emotional health support needs via participatory research J Psychiatr Ment Health Nurs 20172405263–271. [DOI] [PubMed] [Google Scholar]

- 33.Rayment J, Lanlehin R, McCourt C, Husain S M. Involving seldom-heard groups in a PPI process to inform the design of a proposed trial on the use of probiotics to prevent preterm birth: a case study. Res Involv Engagem. 2017;3(01):11. doi: 10.1186/s40900-017-0061-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Le T, Reeder B, Yoo D, Aziz R, Thompson H J, Demiris G. An evaluation of wellness assessment visualizations for older adults. Telemed J E Health. 2015;21(01):9–15. doi: 10.1089/tmj.2014.0012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Brants W, Sharif B, Serebrenik A.Assessing the meaning of emojis for emotional awareness–a pilot studyPaper presented at: 2nd International Workshop on Emoji Understanding and Applications in Social Media Co-located with The Web Conference 2019. May 13–17,2019; San Francisco

- 36.Heo S, Lennie T A, Okoli C, Moser D K. Quality of life in patients with heart failure: ask the patients. Heart Lung. 2009;38(02):100–108. doi: 10.1016/j.hrtlng.2008.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mirro M, Daley C, Wagner S, Rohani Ghahari R, Drouin M, Toscos T. Delivering remote monitoring data to patients with implantable cardioverter-defibrillators: does medium matter? Pacing Clin Electrophysiol. 2018;41(11):1526–1535. doi: 10.1111/pace.13505. [DOI] [PubMed] [Google Scholar]

- 38.Hartzler A L, Izard J P, Dalkin B L, Mikles S P, Gore J L. Design and feasibility of integrating personalized PRO dashboards into prostate cancer care. J Am Med Inform Assoc. 2016;23(01):38–47. doi: 10.1093/jamia/ocv101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dekker-van Weering M GH, Vollenbroek-Hutten M M.Development of a telemedicine service that enables functional training for stroke patients in the home environmentIn Proceedings of the 3rd 2015 Workshop on ICTs for Improving Patients Rehabilitation Research Techniques. ACM; October2015:109–112

- 40.Couture B, Lilley E, Chang F et al. Applying user-centered design methods to the development of an mHealth application for use in the hospital setting by patients and care partners. Appl Clin Inform. 2018;9(02):302–312. doi: 10.1055/s-0038-1645888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Reddy A, Lester C A, Stone J A, Holden R J, Phelan C H, Chui M A. Applying participatory design to a pharmacy system intervention. Res Social Adm Pharm. 2018;pii:S1551-7411(17):30866-5. doi: 10.1016/j.sapharm.2018.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]