Abstract

How do we maintain visual stability across eye movements? Much work has focused on how visual information is rapidly updated to maintain spatiotopic representations. However, predictive spatial remapping is only part of the story. Here I review key findings, recent debates, and open questions regarding remapping and its implications for visual attention and perception. This review focuses on two key questions: when does remapping occur, and what is the impact on feature perception? Findings are reviewed within the framework of a two-stage, or dual-spotlight, remapping process, where spatial attention must be both updated to the new location (fast, predictive stage) and withdrawn from the previous retinotopic location (slow, post-saccadic stage), with a particular focus on the link between spatial and feature information across eye movements.

Keywords: visual stability, retinotopic trace, spatiotopic, predictive remapping, feature-binding, attentional pointers

1. Introduction

We make rapid, saccadic eye movements several times each second, with the resulting input to our visual system being a series of discrete, eye-centered snapshots. Yet the world does not appear to “jump” with each eye movement. Even though visual input is initially coded relative to the eyes, in “retinotopic” coordinates, we perceive objects in stable world-centered, “spatiotopic” locations. Most theories of visual stability across eye movements involve some sort of updating, or “remapping”, signal that helps align visual input from before and after a saccade [1–13]. Here we review what is currently known – and unknown – about this remapping process and its implications for visual perception.

The goals of this review paper are threefold: First, to offer a brief review of the current state of the literature regarding spatial remapping across saccades. Second, to describe a unified theory of remapping (the dual-spotlight theory), which focuses on the periods both immediately before and after a saccade. Third, to highlight new research on an often-overlooked aspect of remapping: the implications for non-spatial processing; i.e. perception of visual features and objects.

2.1. Spatial remapping across saccades

Predictive spatial remapping.

A seminal finding that has driven much of the past few decades of research was the discovery that certain visually responsive neurons begin to remap their activity in anticipation of an eye movement [1]. That is, in the 100–200ms before a saccade is executed, a neuron may respond to a stimulus presented outside its current receptive field, if it falls in its “future field” – where the receptive field would be after the eye movement, even though the eyes have not yet moved (Fig. 1). Predictive remapping has been found in many visual areas in monkeys [1–4], along with analogous results in human fMRI [5], ERP [6], and behavior [7]. Visual sensitivity and feature selective tuning are also predictively enhanced at the saccade target [14–16], i.e. the future fovea, but here we primarily focus on remapping of peripheral locations.

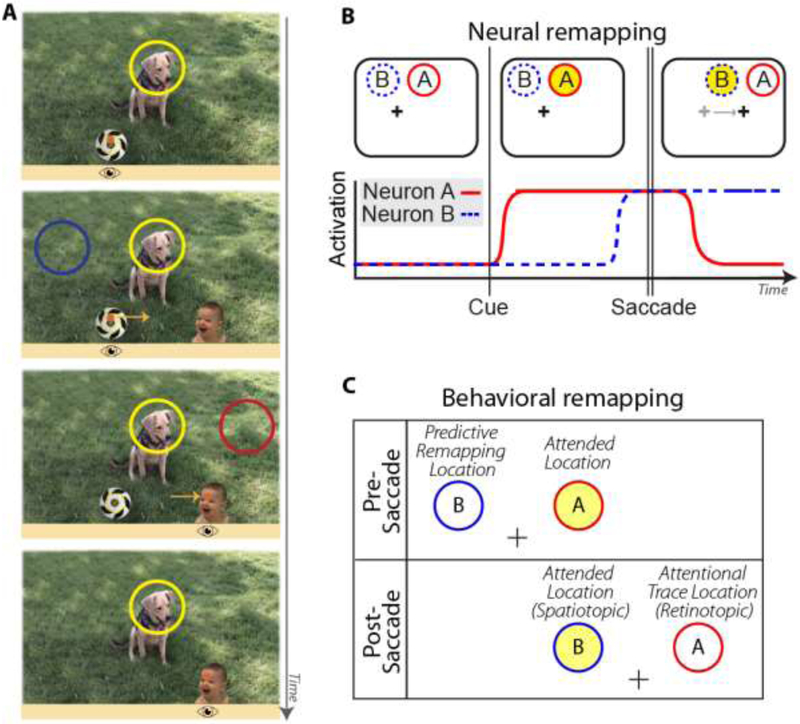

Figure 1. Dual-spotlight model of attentional remapping.

A: Real-world example illustrating sustained covert attention at a spatiotopic task-relevant location (dog), while saccading from soccer ball to baby. Orange dot indicates current fixation location (horizontal position also shown with cartoon eye icon below image); light orange arrows indicate planned or recently completed saccade trajectory. When viewer is initially fixating on soccer ball but attending to dog, the attended location (yellow circle) is in the upper right visual field. Saccading to the baby moves the dog into the upper left visual field. The dog’s spatiotopic position remains stable, but its retinotopic position has changed. From top to bottom, panels indicate early pre-saccade, later pre-saccade (predictive remapping period), early post-saccade (retinotopic trace period), later post-saccade. Red and blue circles correspond to other locations that may be attended during remapping, as defined in B,C. B: Hypothetical responses of two visual neurons with different spatial receptive fields. Yellow circle represents to-be-attended spatiotopic location. Before the saccade, the attended location falls within Neuron A’s receptive field; after the saccade, it falls in Neuron B’s. “Predictive remapping” is when Neuron B begins to respond in anticipation of the saccade. “Retinotopic attentional trace” is when Neuron A continues to respond for a period of time after the eye movement. Thus there is a period of time where both spatiotopic and retinotopic locations are facilitated. C: Corresponding locations for a behavioral study.

A recent source of controversy has been whether this predictive remapping is truly “forward” remapping, in the original sense of receptive fields shifting to the future field, or whether receptive fields instead demonstrate “convergent remapping”, shifting instead toward the saccade target [8, 9]. Other ongoing debates center on whether remapping is best thought of as a process by which receptive fields themselves shift, or rather reflects a shifting of “attentional pointers” [10], with at least one recent study suggesting that the remapping of attentional state may be dissociable from spatial receptive field remapping [11].

These important debates (reviewed elsewhere, including [12]) tend to focus on the nature of predictive spatial remapping; but the underlying assumption is that remapping does occur predictively, and that this predictive updating of visual information helps ensure visual stability once the eyes land. However, some recent evidence has challenged this notion, demonstrating that remapping may not be as fast or efficient as previously thought [13, 17–25], at least during remapping of spatial attention, and thus a full understanding of visual stability across saccades and remapping processes needs to take into account the period immediately after eye movements as well.

The post-saccadic retinotopic attentional trace

In a human behavioral task, Golomb and colleagues had participants sustain spatial attention at a spatiotopic location over a working memory delay, finding that attentional facilitation erroneously lingered at the retinotopic location of the cue for a brief time (100–200ms) after an eye movement before updating to the correct spatiotopic location [13]. This “retinotopic attentional trace” has been subsequently reported across a variety of behavioral tasks [17–20], in addition to both fMRI and ERP [21, 22], and model simulations [23], and is consistent with the idea that visual representations are natively coded in retinotopic coordinates [24] and updated imperfectly [25].

2.2. A unified (pre- and post-saccade) theory of spatial remapping

At first glance, the finding that spatial attention gets updated slowly and is still unstable after an eye movement seems to directly conflict with the evidence for predictive remapping. However, the two findings only conflict if we assume that remapping requires an instantaneous switch, or a single spotlight of attention that shifts from one location to the other. Instead, we propose an alternative theory of remapping that accounts for both effects (Fig. 1) and is rooted in our understanding of neural properties. This “dual-spotlight” theory of remapping is a conceptual theory that describes the remapping of attention, as it is primarily based off studies using behavioral tasks with an attentional component. As noted above, it is still an open question whether the remapping of attention reflects a novel form of remapping [11], and the dual-spotlight theory does not claim that attention is the sole mechanism of remapping; that said, the main implications of this theory – in terms of unifying pre- and post-saccade findings and the potential impact on feature perception – are worth considering in the broader context of remapping as well.

Fast arrival, slow departure: A dual-spotlight theory of attentional remapping.

When a saccade triggers the remapping process, attended locations are updated in two ways: a “turning on” of the new location, and a “turning off” of the old location. Importantly, these two processes do not have to occur synchronously, such that the first may be more rapid and the second slower or delayed in time. As a result, there can be a period of time where attentional facilitation is simultaneously at both locations. Preliminary behavioral evidence for the dual-spotlight remapping theory comes from demonstrations that both predictive remapping and the retinotopic trace can co-exist [19], and that during the few hundred ms following a saccade, facilitation ramps up at the spatiotopic location and ramps down at the retinotopic location, without spreading to intermediate locations [18]. Of course, it is possible that what appears to be dual spotlights could actually be a single spotlight that updates with variable timing across trials, given that the above studies rely on data averaged across trials. However, another set of studies using a different approach revealed a pattern of post-saccade feature-binding errors characteristic of split attention [26, 27; described more in the sections below], providing even more compelling evidence for the dual-spotlight account.

Moreover, the spatiotemporal dynamics of a two-stage or dual-spotlight process are consistent with known neural mechanisms. Neural investigations of covert shifts of attention (with the eyes fixated) have demonstrated that neurons in early visual cortex begin to facilitate the new location before disengaging from the previously attended location [28], and a similar pattern has been found across a saccade, where a new neuron whose receptive field is brought into the attended location ramps up before a previously active neuron ramps down [11]. Visual stability across saccades is also thought to be supported by two distinct sources of feedback with differing time scales: a rapid, predictive corollary discharge signal, and an oculomotor proprioceptive signal that stabilizes more slowly after a saccade [29]. Indeed, a recent computational model of remapping incorporating both of these signals naturally accounts for both the pre-saccadic predictive remapping and post-saccadic retinotopic trace effects [30].

While a number of open questions remain about the precise spatio-temporal dynamics and mechanisms underlying this theory (Box 1), what seems clear is that attentional remapping is not simply a single updating process with a variable time delay, but that the “turning on” and “turning off” processes are at least somewhat separable, which can result in a dynamic period where spatial attention is temporarily at both locations. In the next section, we explore the broader perceptual implications of such a process.

Box 1: Open questions about the dual-spotlight theory.

The dual-spotlight theory posits that spatial attention needs to be both updated to the new location (fast, predictive stage) and withdrawn from the previous retinotopic location (slow, post-saccadic stage) with each eye movement. Of the many open questions that remain to be addressed, a number center on the question: Are the updating and withdrawing two independent processes, or two components of a single process? While the findings discussed above seem to largely rule out the possibility that remapping is a single-spotlight updating process that’s simply variable in time, the degree of their independence remains to be seen. One possibility is that both the predictive component and the retinotopic trace component are automatically triggered together by the same updating signal(s), but the processes themselves overlap in time; this explanation seems favored by at least one computational model [30]. The other extreme is that the two processes are completely independent mechanisms, such that they can vary independently over time and are independently susceptible to individual, trial-by-trial, and/or task context differences. Intermediate possibilities include the idea that predictive spatiotopic remapping may be a task-dependent, active process, whereas the retinotopic trace is more of an automatic, passive decay of neural activity; this account was favored by the initial retinotopic trace findings that early spatiotopic facilitation was variable across experiments and found primarily when the spatiotopic location was task-relevant, whereas the retinotopic trace was found regardless of task demands [13, 17, see also 62]. Interestingly, however, other recent papers have failed to find early retinotopic effects in certain tasks [63]; the effect seems particularly controversial with inhibition of return [20, 64, 65]. Yao and colleagues also failed to find any post-saccadic performance costs stemming from interference from a retinotopic trace distractor, though they also failed to find pre-saccadic interference from distractors at the predictive remapping location, claiming that attention can update very rapidly, at least under certain situations (e.g., an intriguing difference is that their saccades were highly predictable in both space and time; [66]). Intriguingly, a recent study also found that in monkey area V4, the remapping of attention occurred in a manner somewhat consistent with the dual-spotlight theory, with new neurons becoming active before the old neurons disengaged, though this entire “attentional handoff” occurred before the saccade in their task [11]. Thus, it is possible that the efficiency of remapping (for one or both stages) may be dependent on factors such as cognitive control, motivation, reward, predictable context, and/or attentional demands.

2.3. Implications for non-spatial processing: feature and object perception

Saccadic remapping is by definition a spatial problem; the shifts induced by eye movements change the spatial perspective of the viewer, causing a mismatch between pre- and post-saccadic retinotopic positions. But in the real world, our visual systems don’t just process spatial information in isolation; visual scenes are full of rich feature and object information. What happens to these non-spatial representations during spatial remapping induced by saccades?

Are object features also remapped?

One of the greatest unresolved debates in the remapping literature is whether information about an object’s features and identity is remapped alongside the spatial signal, or whether remapping is purely a spatial process, such that feature and object information must be re-processed and re-bound to an updated spatial pointer after remapping [e.g., 10]. In the perceptual literature, spatiotopic transfer of visual aftereffects was initially touted as strong evidence in favor of predictive remapping of features [31], then contested [32–34]. However, more recent studies have again found support for spatiotopic aftereffects [35, 36], including the idea that these build up over time [37]. Evidence for spatiotopic transsaccadic feature integration has similarly been mixed [38–45]. Other behavioral studies have examined whether an object presented prior to an eye movement preserves its integrity across the saccade [46, 47]. Interestingly, a common source of discrepancy across all of these behavioral studies is whether effects are found in retinotopic or spatiotopic coordinates after a saccade [12]; given that the dual-spotlight theory predicts spatial pointers temporarily coexisting at both locations, if features are indeed remapped, perhaps this perceived discrepancy is not so unexpected after all.

Neurally, evidence for feature remapping has been ambiguous as well. A recent fMRI adaptation study used a similar approach to the behavioral tilt adaptation studies noted above, finding evidence for both retinotopic and feature-specific spatiotopic adaptation after a saccade [48]. Using multivariate pattern analysis of fMRI data, Lescroart, Kanwisher, and Golomb [49] investigated more directly whether stimulus category information could be decoded from remapped responses and failed to find evidence of automatic remapping of stimulus content, though this study also raises doubt about the type of remapping detectable with fMRI [5] and if it is the same as the predictive remapping signal in neurons (or behavior, for that matter). Intriguingly, an EEG study applying a similar approach did find evidence in support of remapping of stimulus content [50], though they tested the case of peripheral-to-fovea remapping, which might involve special processing [51, 52]. While neurophysiology could potentially provide the most direct evidence, this question has remained largely untested with this methodology; two recent studies have attempted to address this question, reaching opposite conclusions: Yao and colleagues found that remapped memory trace responses in MT do not contain information about motion direction [53], while Subramanian and Colby reported a small fraction of LIP neurons that showed properties that could be consistent with feature-selective remapping [54], although these latter results were not robust or completely consistent with true feature remapping.

Is feature and object perception affected by a saccade?

A related – and historically less investigated – question is whether feature perception and object recognition processes themselves are fundamentally affected by eye movements. It has long been known that people are susceptible to perceptual errors such as spatial mislocalization and saccadic suppression around the time of a saccade [reviewed in 55]. Visual memory across saccades may also be impaired [56], though the costs may be less severe in native retinotopic coordinates [25, 57].

Such costs may be expected consequences of unstable spatial representations during the remapping process. But given that spatial attention plays another fundamental role in visual perception – linking information about features and objects in the world – then theories of remapping should consider the consequences for feature/object perception as well. In the case of the dual-spotlight remapping theory, the critical postulation is that during dynamic remapping, attention can be simultaneously highlighting two different spatial locations. If true, this divided attention may carry interesting perceptual consequences beyond spatial cognition.

Spatial remapping and the feature-binding problem.

A principle long touted in the field of visual object perception is that spatial attention is critical for knowing “what” is “where” and for solving the famous Binding Problem [58]. Many theories of object perception center on the idea that object files are defined by location and spatial attention acts as the “glue” that binds an object’s features together [59]. If spatial attention is critical for feature-binding, but spatial attention is remapped across eye movements in a two-stage or dual-spotlight process, that begs the question: what happens to feature-binding and integrated object perception during dynamic remapping?

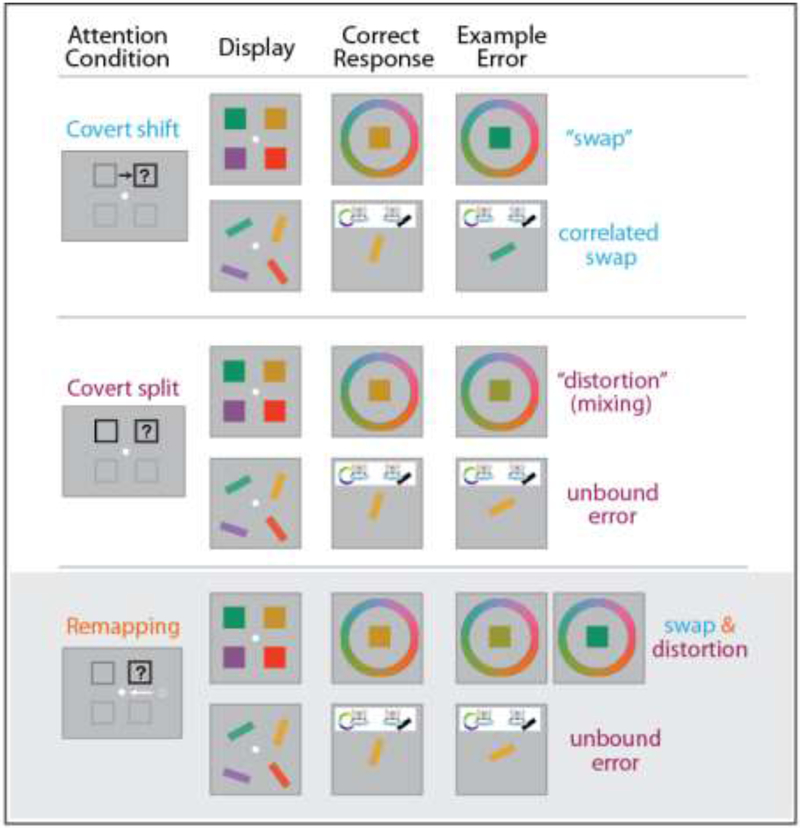

A recent study from our group [27] used a continuous-report paradigm to test feature perception following an eye movement, revealing that when subjects were supposed to report the color of a briefly presented spatiotopic target, the color they actually reported was systematically shifted in color space, toward the color of a simultaneously presented distractor occupying the retinotopic location of the cue. Probabilistic mixture modeling revealed that these perceptual errors consisted of both crude “swapping” errors (reporting the retinotopic color instead of the spatiotopic color) and subtler feature mixing (as if the retinotopic color had blended into the spatiotopic percept). Szinte et al subsequently showed a similar perceptual integration (motion integration) between spatiotopic and future retinotopic locations during the pre-saccadic period [60]. More recently, Dowd & Golomb extended this paradigm to probe multi-feature objects, using a joint continuous-report (i.e., reproduce both the color and orientation of a target) and joint probabilistic modeling to assess object integrity [61]; attentional updating after an eye movement triggered an increase in object-feature binding errors, including illusory conjunctions—e.g., mis-binding the color of the spatiotopic target item with the orientation of the simultaneously presented retinotopic distractor item [26].

Importantly, additional comparison experiments testing dynamic spatial attention outside the context of eye movements [27, 61] demonstrated that both within-feature mixing errors and the multi-feature breakdowns of object binding are specifically associated with situations in which covert attention is simultaneously split across two different locations (Fig 2), providing strong evidence that remapping involves a transient splitting of attention, as predicted by the dual-spotlight theory of remapping. An alternative single-spotlight process of remapping would predict that a single focus of spatial attention shifts from one location to another, such that at any given moment, attention is either at the updated (spatiotopic) location or stuck at the initial (retinotopic trace) location. If this were the case, we would expect remapping to involve primarily a shift of attention, which should result in a mixture of some trials in which attention had successfully remapped and some trials in which attention was still at the previous retinotopic location, which would induce a different pattern of feature-binding errors [27, 61]. The fact that the type of feature-binding errors found immediately after a saccade instead resembles those found during split attention suggests that both the remapped spatiotopic location and the lingering retinotopic trace location are being simultaneously selected by spatial attention during this period, at least on some trials. Moreover, this transient splitting of spatial attention induced by remapping affects not just spatial processing, but can have striking consequences for feature and object perception, such that features from two different spatial locations may be temporarily mixed together. It remains to be seen the practical consequences of these challenges on real-world perception (Box 2).

Figure 2. Implications of dynamic spatial attention on feature perception and object integrity.

Cartoon depicting simplified tasks and results from [27, 26, 61]. Top: When attention must be covertly shifted from one location to another (dark gray to black box), and the stimulus array is presented 50ms after the shift cue, subjects either report the correct features or misreport (swap) the features of the distractor at the initially attended location, but object integrity is preserved. Middle: When covert attention is simultaneously split across two different locations and subjects are post-cued to report one of them, feature reports may be distorted (e.g. blend of both colors), and object integrity is degraded (e.g. reporting color of one item and orientation of the other). Bottom: When covert attention must be maintained at a spatiotopic location (black) while executing an eye movement (white arrow) elsewhere, and the stimulus array is presented 50ms after the eye movement, interference is seen from the distractor at the retinotopic trace location (dark gray). Errors here are consistent with those seen during splitting of attention, suggesting spatial attention is temporarily highlighting both the spatiotopic and retinotopic trace locations after a saccade.

Box 2: Real-world implications.

If the visual system is susceptible to all sorts of perceptual errors around the time of an eye movement, what are the consequences for real-world vision? And why do we still perceive the world as stable? Our visual systems seem to solve the visual stability problem so seamlessly that most non- vision scientists aren’t even aware that we make multiple eye movements every second. One possibility is that remapping is but one of several mechanisms supporting visual stability across saccades, including retinal cues (e.g. stable visual landmarks and object correspondence, including the saccade target itself; [67–70]), as well as top-down, default assumptions or expectations about stability [71, 72]. Also, in the real world, visual objects of interest tend to remain intact as we saccade to directly fixate them, and thus predictive remapping of features and intact object integrity may be less crucial if we can simply re-process the object using the high-resolution fovea upon landing. Thus, perhaps it is not a coincidence that the timing of natural saccades leaves a few hundred milliseconds to re-stabilize before moving again; such that even the post-saccadic stage of remapping would be comfortably completed.

3. Conclusions

When predictive spatial remapping was first discovered [1], it spawned an exciting field of study built on the premise that remapping might help solve the fundamental challenge of visual stability across saccades. However, while much neurophysiological, behavioral, and neuroimaging work has followed over the decades, many fundamental questions and debates remain today. This brief review focused on two timely issues related to spatial and feature remapping: (1) that a view of remapping based solely on the predictive component is limited, as promoted by the more unified and comprehensive dual-spotlight theory of remapping; and (2) that a true understanding of visual stability – or instability – across saccades needs to account for non-spatial processing as well.

Acknowledgements

Thank you to Xiaoli Zhang for helpful comments on the manuscript, Christopher Jones for the dual-spotlight name suggestion, and Emma Wu Dowd, Anna Shafer-Skelton, Jiageng Chen, Mark Lescroart, Nancy Kanwisher, James Mazer, Marvin Chun, and Andrew Leber for influential discussion.

Funding

This work was supported by grants from the National Institutes of Health (R01-EY025648) and the Alfred P. Sloan Foundation.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The author declares no conflict of interest.

References

- 1.Duhamel JR, Colby CL, & Goldberg ME (1992). The updating of the representation of visual space in parietal cortex by intended eye movements. Science, 255(5040), 90–2. [DOI] [PubMed] [Google Scholar]

- 2.Kusunoki M, & Goldberg ME (2003). The time course of perisaccadic receptive field shifts in the lateral intraparietal area of the monkey. J Neurophysiol, 89(3), 1519–27. [DOI] [PubMed] [Google Scholar]

- 3.Nakamura K, & Colby CL (2002). Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc Natl Acad Sci U S A, 99(6), 4026–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sommer MA, & Wurtz RH (2006). Influence of the thalamus on spatial visual processing in frontal cortex. Nature, 444(7117), 374–7. [DOI] [PubMed] [Google Scholar]

- 5.Merriam EP, Genovese CR, & Colby CL (2007). Remapping in human visual cortex. J Neurophysiol, 97(2), 1738–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parks NA, & Corballis PM (2008). Electrophysiological correlates of presaccadic remapping in humans. Psychophysiology, 45(5), 776–83. doi: 10.1111/j.1469-8986.2008.00669.x [DOI] [PubMed] [Google Scholar]

- 7.Rolfs M, Jonikaitis D, Deubel H, & Cavanagh P (2011). Predictive remapping of attention across eye movements. Nat Neurosci, 14(2), 252–6. doi: 10.1038/nn.2711 [DOI] [PubMed] [Google Scholar]

- 8.Neupane S, Guitton D, & Pack CC (2016). Two distinct types of remapping in primate cortical area V4. Nature Communications, 7, 10402. doi: 10.1038/ncomms10402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Zirnsak M, Steinmetz NA, Noudoost B, Xu KZ, & Moore T (2014). Visual space is compressed in prefrontal cortex before eye movements. Nature, 507(7493), 504–507. doi: 10.1038/nature13149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cavanagh P, Hunt AR, Afraz A, & Rolfs M (2010). Visual stability based on remapping of attention pointers. Trends Cogn Sci, 14(4), 147–53. doi: 10.1016/j.tics.2010.01.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Marino AC, & Mazer JA (2018). Saccades Trigger Predictive Updating of Attentional Topography in Area V4. Neuron, 98(2), 429–438.e4. doi: 10.1016/j.neuron.2018.03.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Marino AC, & Mazer JA (2016). Perisaccadic Updating of Visual Representations and Attentional States: Linking Behavior and Neurophysiology. Frontiers in Systems Neuroscience, 10. doi: 10.3389/fnsys.2016.00003 [DOI] [PMC free article] [PubMed] [Google Scholar]; ** This paper provides a thorough and detailed review of several issues related to visual stability, including detailed descriptions (and comparison tables) of controversial findings in both neural and behavioral literatures.

- 13.Golomb JD, Chun MM, & Mazer JA (2008). The native coordinate system of spatial attention is retinotopic. J Neurosci, 28(42), 10654–62. doi: 10.1523/JNEUROSCI.2525-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rolfs M, & Carrasco M (2012). Rapid Simultaneous Enhancement of Visual Sensitivity and Perceived Contrast during Saccade Preparation. The Journal of Neuroscience, 32(40), 13744–13752a. doi: 10.1523/JNEUROSCI.2676-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li H-H, Barbot A, & Carrasco M (2016). Saccade Preparation Reshapes Sensory Tuning. Current Biology, 26(12), 1564–1570. doi: 10.1016/j.cub.2016.04.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ohl S, Kuper C, & Rolfs M (2017). Selective enhancement of orientation tuning before saccades. Journal of Vision, 17(13), 2. doi: 10.1167/17.13.2 [DOI] [PubMed] [Google Scholar]

- 17.Golomb JD, Pulido VZ, Albrecht AR, Chun MM, & Mazer JA (2010). Robustness of the retinotopic attentional trace after eye movements. J Vis, 10(3), 19.1–12. doi: 10.1167/10.3.19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Golomb JD, Marino AC, Chun MM, & Mazer JA (2011). Attention doesn’t slide: spatiotopic updating after eye movements instantiates a new, discrete attentional locus. Atten Percept Psychophys, 73(1), 7–14. doi: 10.3758/s13414-010-0016-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jonikaitis D, Szinte M, Rolfs M, & Cavanagh P (2013). Allocation of attention across saccades. Journal of Neurophysiology, 109(5), 1425–1434. doi: 10.1152/jn.00656.2012 [DOI] [PubMed] [Google Scholar]

- 20.Mathôt S, & Theeuwes J (2010). Gradual remapping results in early retinotopic and late spatiotopic inhibition of return. Psychological Science, 21(12), 1793–1798. [DOI] [PubMed] [Google Scholar]

- 21.Golomb JD, Nguyen-Phuc AY, Mazer JA, McCarthy G, & Chun MM (2010). Attentional facilitation throughout human visual cortex lingers in retinotopic coordinates after eye movements. J Neurosci, 30(31), 10493–506. doi: 10.1523/JNEUROSCI.1546-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Talsma D, White BJ, Mathôt S, Munoz DP, & Theeuwes J (2013). A Retinotopic Attentional Trace after Saccadic Eye Movements: Evidence from Event-related Potentials. Journal of Cognitive Neuroscience, 25(9), 1563–1577. doi: 10.1162/jocn_a_00390 [DOI] [PubMed] [Google Scholar]

- 23.Casarotti M, Lisi M, Umiltà C, & Zorzi M (2012). Paying attention through eye movements: a computational investigation of the premotor theory of spatial attention. Journal of cognitive neuroscience, 24(7), 1519–1531. [DOI] [PubMed] [Google Scholar]

- 24.Golomb JD, & Kanwisher N (2012). Higher level visual cortex represents retinotopic, not spatiotopic, object location. Cerebral Cortex, 22(12), 2794–2810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Golomb JD, & Kanwisher N (2012). Retinotopic memory is more precise than spatiotopic memory Proc Natl Acad Sci U S A, Online Early Edition. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dowd EW, & Golomb JD (under review). The Binding Problem after an eye movement. [DOI] [PMC free article] [PubMed]; ** This paper builds off of previous work demonstrating feature distortions following an eye movement (Golomb et al 2014 [27]), but takes this research a critical step forward by highlighting the integration of multiple features into objects. Attentional updating immediately after an eye movement degraded object integrity and produced more independent errors, including illusory conjunctions—in which one feature of the item at the spatiotopic target location was mis-bound with the other feature of the item at the initial retinotopic location.

- 27.Golomb JD, L’Heureux ZE, & Kanwisher N (2014). Feature-Binding Errors After Eye Movements and Shifts of Attention. Psychological Science, 25(5), 1067–1078. doi: 10.1177/0956797614522068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Khayat PS, Spekreijse H, & Roelfsema PR (2006). Attention lights up new object representations before the old ones fade away. J Neurosci, 26(1), 138–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sun LD, & Goldberg ME (2016). Corollary Discharge and Oculomotor Proprioception: Cortical Mechanisms for Spatially Accurate Vision. Annual Review of Vision Science, 2(1), 61–84. doi: 10.1146/annurev-vision-082114-035407 [DOI] [PMC free article] [PubMed] [Google Scholar]; * An accessible review of some of the different mechanisms supporting remapping and visual stability. Of particular interest for highlighting the different time scales of corollary discharge versus oculomotor proprioceptive signals.

- 30.Bergelt J, & Hamker FH (2018). Spatial updating of attention across eye movements: A neuro-computational approach. bioRxiv, 440727. doi: 10.1101/440727 [DOI] [PubMed] [Google Scholar]; ** This paper presents a computational model that naturally accounts for both the pre-saccadic predictive remapping and post-saccadic retinotopic trace effects, offering a mechanistic explanation of the dual-spotlight model.

- 31.Melcher D (2007). Predictive remapping of visual features precedes saccadic eye movements. Nat Neurosci, 10(7), 903–7. [DOI] [PubMed] [Google Scholar]

- 32.Knapen T, Rolfs M, Wexler M, & Cavanagh P (2010). The reference frame of the tilt aftereffect. Journal of Vision, 10(1). Retrieved from http://www.journalofvision.org/content/10/1/8.short [DOI] [PubMed] [Google Scholar]

- 33.Mathôt S, & Theeuwes J (2013). A reinvestigation of the reference frame of the tilt-adaptation aftereffect. Scientific Reports, 3, 1152. doi: 10.1038/srep01152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wenderoth P, & Wiese M (2008). Retinotopic encoding of the direction aftereffect. Vision research, 48(19), 1949–1954. [DOI] [PubMed] [Google Scholar]

- 35.He D, Mo C, & Fang F (2017). Predictive feature remapping before saccadic eye movements. Journal of Vision, 17(5), 14–14. doi: 10.1167/17.5.14 [DOI] [PubMed] [Google Scholar]

- 36.Wolfe BA, & Whitney D (2015). Saccadic remapping of object-selective information. Attention, Perception, & Psychophysics, 77(7), 2260–2269. doi: 10.3758/s13414-015-0944-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zimmermann E, Morrone MC, Fink GR, & Burr D (2013). Spatiotopic neural representations develop slowly across saccades. Current Biology, 23(5), R193–R194. doi: 10.1016/j.cub.2013.01.065 [DOI] [PubMed] [Google Scholar]

- 38.Fabius JH, Fracasso A, & Van der Stigchel S (2016). Spatiotopic updating facilitates perception immediately after saccades. Scientific Reports, 6, 34488. doi: 10.1038/srep34488 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hayhoe M, Lachter J, & Feldman J (1991). Integration of form across saccadic eye movements. Perception, 20(3), 393–402. [DOI] [PubMed] [Google Scholar]

- 40.Melcher D, & Morrone MC (2003). Spatiotopic temporal integration of visual motion across saccadic eye movements. Nat Neurosci, 6(8), 877–81. [DOI] [PubMed] [Google Scholar]

- 41.Oostwoud Wijdenes L, Marshall L, & Bays PM (2015). Evidence for Optimal Integration of Visual Feature Representations across Saccades. Journal of Neuroscience, 35(28), 10146–10153. doi: 10.1523/JNEUROSCI.1040-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]; * This study probed transsaccadic working memory for color, presenting arrays of colors pre- and post-saccade, and asking participants to report the color at a spatiotopic position. The reported colors were a weighted average of pre- and post-saccadic values, with the weight varying with item location and sensory uncertainty, suggesting a form of optimal integration. Interestingly, the authors only found integration in spatiotopic (not retinotopic) coordinates, though the distance in color space was not equated across these comparisons, such that the colors in the post-saccadic arrays were much closer to the corresponding spatiotopic-location pre-saccadic colors than the retinotopic-location pre- saccadic colors (though this is also more reflective of real-world settings).

- 42.Harrison WJ, & Bex PJ (2014). Integrating Retinotopic Features in Spatiotopic Coordinates. The Journal of Neuroscience, 34(21), 7351–7360. doi: 10.1523/JNEUROSCI.5252-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Morris AP, Liu CC, Cropper SJ, Forte JD, Krekelberg B, & Mattingley JB (2010). Summation of Visual Motion across Eye Movements Reflects a Nonspatial Decision Mechanism. Journal of Neuroscience, 30(29), 9821–9830. doi: 10.1523/JNEUROSCI.1705-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Irwin DE, Yantis S, & Jonides J (1983). Evidence against visual integration across saccadic eye movements. Percept Psychophys, 34(1), 49–57. [DOI] [PubMed] [Google Scholar]

- 45.Paeye C, Collins T, & Cavanagh P (2017). Transsaccadic perceptual fusion. Journal of Vision, 17(1), 14. doi: 10.1167/17.1.14 [DOI] [PubMed] [Google Scholar]; * This study revisited the classic question of transsaccadic perceptual fusion, which has historically been fraught with methodological and interpretation issues (e.g. screen phosphor persistence, memory vs perception accounts). The authors found new evidence for pre- and post-saccadic fusion similar to that seen at fixation, suggesting that some spatiotopic content may be carried over across saccades.

- 46.Shafer-Skelton A, Kupitz CN, & Golomb JD (2017). Object-location binding across a saccade: A retinotopic spatial congruency bias. Attention, Perception, & Psychophysics, 79(3), 765–781. doi: 10.3758/s13414-016-1263-8 [DOI] [PMC free article] [PubMed] [Google Scholar]; * This behavioral study failed to find evidence of automatic remapping of feature information. Object-location binding was preserved exclusively in retinotopic coordinates after saccades – even after longer delays and for more complex visual stimuli – suggesting instead that feature-location binding may need to be re-established following each saccade.

- 47.Van der Stigchel S, & Hollingworth A (2018). Visuospatial Working Memory as a Fundamental Component of the Eye Movement System. Current Directions in Psychological Science, 27(2), 136–143. doi: 10.1177/0963721417741710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Zimmermann E, Weidner R, Abdollahi RO, & Fink GR (2016). Spatiotopic Adaptation in Visual Areas. Journal of Neuroscience, 36(37), 9526–9534. doi: 10.1523/JNEUROSCI.0052-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lescroart MD, Kanwisher N, & Golomb JD (2016). No evidence for automatic remapping of stimulus features or location found with fMRI. Frontiers in Systems Neuroscience, 10, 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Edwards G, VanRullen R, & Cavanagh P (2018). Decoding Trans-Saccadic Memory. Journal of Neuroscience, 38(5), 1114–1123. doi: 10.1523/JNEUROSCI.0854-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Knapen T, Swisher JD, Tong F, & Cavanagh P (2016). Oculomotor Remapping of Visual Information to Foveal Retinotopic Cortex. Frontiers in Systems Neuroscience, 10. doi: 10.3389/fnsys.2016.00054 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Williams MA, Baker CI, Op de Beeck HP, Mok Shim W, Dang S, Triantafyllou C, & Kanwisher N (2008). Feedback of visual object information to foveal retinotopic cortex. Nature Neuroscience, 11(12), 1439–1445. doi: 10.1038/nn.2218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yao T, Treue S, & Krishna BS (2016). An Attention-Sensitive Memory Trace in Macaque MT Following Saccadic Eye Movements. PLOS Biology, 14(2), e1002390. doi: 10.1371/journal.pbio.1002390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Subramanian J, & Colby CL (2013). Shape selectivity and remapping in dorsal stream visual area LIP. Journal of Neurophysiology, 111(3), 613–627. doi: 10.1152/jn.00841.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Higgins E, & Rayner K (2015). Transsaccadic processing: stability, integration, and the potential role of remapping. Attention, Perception, & Psychophysics, 77(1), 3–27. doi: 10.3758/s13414-014-0751-y [DOI] [PubMed] [Google Scholar]

- 56.Schut MJ, Stoep N. V. der, Postma A, & Stigchel S. V. der. (2017). The cost of making an eye movement: A direct link between visual working memory and saccade execution. Journal of Vision, 17(6), 15–15. doi: 10.1167/17.6.15 [DOI] [PubMed] [Google Scholar]

- 57.Shafer-Skelton A, & Golomb JD (2017). Memory for retinotopic locations is more accurate than memory for spatiotopic locations, even for visually guided reaching. Psychonomic Bulletin & Review. doi: 10.3758/s13423-017-1401-x [DOI] [PMC free article] [PubMed] [Google Scholar]; * This study provides a striking demonstration of the dominance of the retinotopic coordinate system and perceptual consequences of the two-stage remapping process. A previous paper [22] found that people are more accurate at remembering spatial locations in raw retinotopic (gaze-centered) than the more ecologically relevant spatiotopic (gaze-independent / world-centered) coordinates, and that errors in reporting spatiotopic locations accumulate with each successive saccade. The current paper shows that even when the task involves visually-guided action (a reaching task), the retinotopic system still dominates, consistent with a natively retinotopic memory store that is imperfectly updated after each eye movement.

- 58.Treisman A (1996). The binding problem. Curr Opin Neurobiol, 6(2), 171–8. [DOI] [PubMed] [Google Scholar]

- 59.Treisman AM, & Gelade G (1980). A feature-integration theory of attention. Cognitive psychology, 12(1), 97–136. [DOI] [PubMed] [Google Scholar]

- 60.Szinte M, Jonikaitis D, Rolfs M, Cavanagh P, & Deubel H (2016). Presaccadic motion integration between current and future retinotopic locations of attended objects. Journal of Neurophysiology, 116(4), 1592–1602. doi: 10.1152/jn.00171.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Dowd EW, & Golomb JD (2019). Object-Feature Binding Survives Dynamic Shifts of Spatial Attention. Psychological Science, 0956797618818481. doi: 10.1177/0956797618818481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lisi M, Cavanagh P, & Zorzi M (2015). Spatial constancy of attention across eye movements is mediated by the presence of visual objects. Attention, Perception, & Psychophysics, 77(4), 1159–1169. doi: 10.3758/s13414-015-0861-1 [DOI] [PubMed] [Google Scholar]

- 63.Boon PJ, Zeni S, Theeuwes J, & Belopolsky AV (2018). Rapid updating of spatial working memory across saccades. Scientific Reports, 8(1), 1072. doi: 10.1038/s41598-017-18779-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pertzov Y, Zohary E, & Avidan G (2010). Rapid formation of spatiotopic representations as revealed by inhibition of return. J Neurosci, 30(26), 8882–7. doi: 10.1523/JNEUROSCI.3986-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Hilchey MD, Klein RM, Satel J, & Wang Z (2012). Oculomotor inhibition of return: How soon is it “recoded” into spatiotopic coordinates? Attention, Perception, & Psychophysics, 74(6), 1145–1153. [DOI] [PubMed] [Google Scholar]

- 66.Yao T, Ketkar M, Treue S, & Krishna BS (2016). Visual attention is available at a task-relevant location rapidly after a saccade. eLife, 5. doi: 10.7554/eLife.18009 [DOI] [PMC free article] [PubMed] [Google Scholar]; * Unlike previous reports, this paper failed to show evidence for attentional interference from either predictive remapping or a retinotopic trace in human behavior, suggesting additional variability in the timecourse of updating.

- 67.Currie CB, McConkie GW, Carlson-Radvansky LA, & Irwin DE (2000). The role of the saccade target object in the perception of a visually stable world. Perception & Psychophysics, 62(4), 673–683. [DOI] [PubMed] [Google Scholar]

- 68.Hollingworth A, Richard AM, & Luck SJ (2008). Understanding the function of visual short-term memory: Transsaccadic memory, object correspondence, and gaze correction. Journal of Experimental Psychology: General, 137(1), 163–181 doi: 10.1037/0096-3445.137.1.163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Deubel H (2004). Localization of targets across saccades: Role of landmark objects. Visual Cognition, 11(2), 173–202. [Google Scholar]

- 70.Churan J, Guitton D, & Pack CC (2011). Context dependence of receptive field remapping in superior colliculus. Journal of Neurophysiology, 106(4), 1862–1874. doi: 10.1152/jn.00288.2011 [DOI] [PubMed] [Google Scholar]

- 71.Rao HM, Abzug ZM, & Sommer MA (2016). Visual continuity across saccades is influenced by expectations. Journal of Vision, 16(5), 7–7. doi: 10.1167/16.5.7 [DOI] [PubMed] [Google Scholar]; * An elegant study varying the probability of stability for different objects (i.e. whether a given object was more or less likely to change position across a saccade). They show that these learned expectations are incorporated into participants’ judgments of visual stability.

- 72.Atsma J, Maij F, Koppen M, Irwin DE, & Medendorp WP (2016). Causal Inference for Spatial Constancy across Saccades. PLOS Computational Biology, 12(3), e1004766. doi: 10.1371/journal.pcbi.1004766 [DOI] [PMC free article] [PubMed] [Google Scholar]