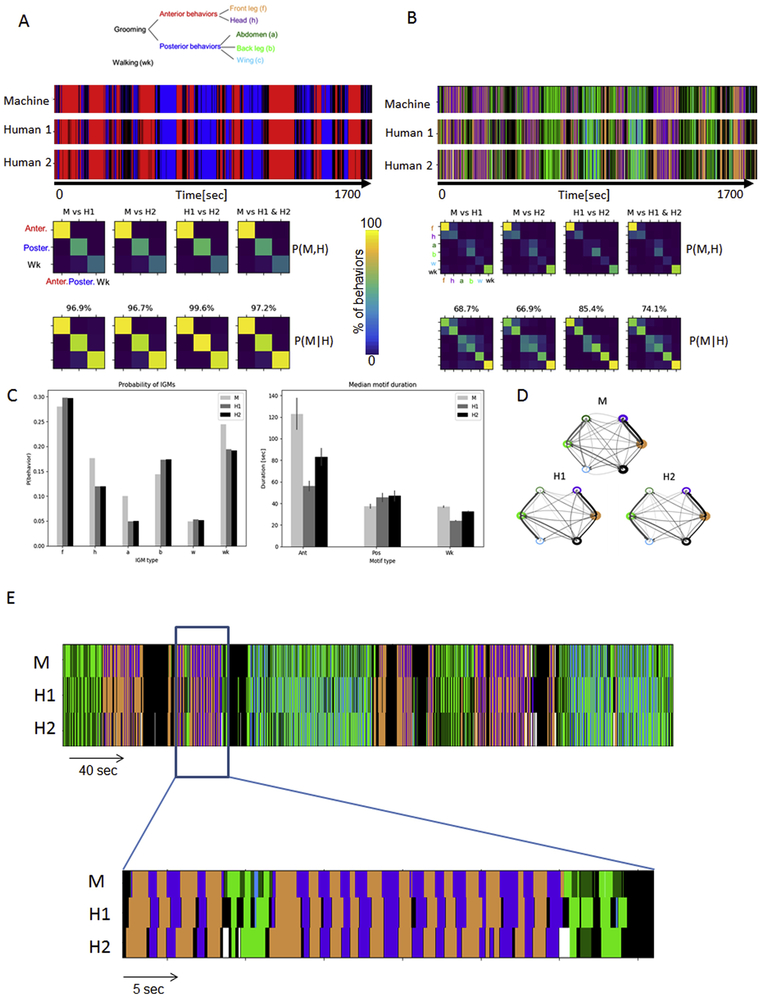

Figure 6. Validation of automatic behavior recognition system (ABRS).

A: Ethograms of grooming motifs (Anterior, red; Posterior, blue; and Walking, black) obtained by machine (M) and human observers (H1, H2) show good agreement, which is quantified below in confusion matrices (rows – machine; columns – humans). Diagonal terms show agreement and off-diagonal terms show disagreement; comparisons between machine (M) and humans (H1 and H2), between two human observers, and between machine and the consensus between humans all show greater than 90% agreement.

B: The accuracy of detecting individual grooming movements (IGMs) was also analyzed by comparison of human and machine ethograms using confusion matrices and shows 60-90% agreement.

C: The ABRS performs similarly to human observers when aggregate characteristics of grooming behavior are considered. The percentage of time spent doing each individual grooming movement (f, h, a, b, w) and walking (wk) are very similar, as assessed by comparing automatic (light grey) and manual (gray and black bars) annotation (left). The median behavioral motif durations are also very similar between all three methods of annotation.

D: Syntax structure of grooming behavior obtained by human and machine. Thickness of edges shows transition probabilities, while thickness of nodes and shade of edges indicates probability of behavior. As an example, front leg <--> head transition probabilities: for machine PM(h∣f)= 0.92 and PM(f∣h)= 0.98; while for humans PH1(h∣f)= 0.92, PH1(f∣h)= 0.73 and PH2(h∣f)= 0.97, PH2(f∣h)= 0.80.

E: Most disagreements between Machine and Humans are in determining the precise beginnings and ends of IGMs. The general pattern of alternations between f and h is similar but the exact start and end points differ.