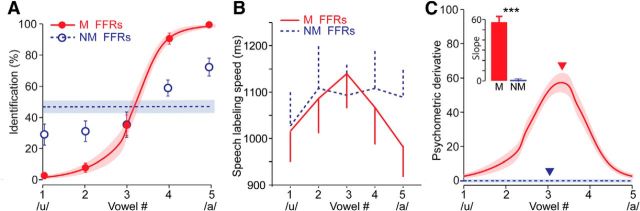

Figure 2.

Enhanced subcortical encoding supports categorical speech percepts. A, Grand-average psychometric functions. Identification scores were fit with sigmoidal logistic functions for each listener's perception of musician and nonmusician brainstem potentials. B, Speech labeling speeds for vowel classification. When listening to acoustic playbacks of musicians' neural FFRs, listeners hear a clear perceptual shift in the phonetic category (/u/ vs /a/) and are much slower at labeling stimuli near the categorical boundary (vw3) compared with within-category tokens (e.g., vws1–2 or 4–5), the hallmarks of categorical perception (Pisoni and Tash, 1974; Bidelman et al., 2013). In contrast, nonmusicians' FFRs are perceived at near chance levels and identified with the same RT. As such, they do not allow listeners to accurately classify speech. C, Slopes of psychometric functions derived from the derivative of listeners' identification functions in A. Inset, Maximal slope of the categorical boundary (max slope = ▾). Musicians' neural speech representations offer steeper, more dichotomous speech percepts that delineate a clearer categorical boundary than nonmusicians'; ***p < 0.001; error bars/shading = ±SEM.