Electrical signals in networks of nanoparticles emulate correlated avalanches of signals and criticality in the brain.

Abstract

Current efforts to achieve neuromorphic computation are focused on highly organized architectures, such as integrated circuits and regular arrays of memristors, which lack the complex interconnectivity of the brain and so are unable to exhibit brain-like dynamics. New architectures are required, both to emulate the complexity of the brain and to achieve critical dynamics and consequent maximal computational performance. We show here that electrical signals from self-organized networks of nanoparticles exhibit brain-like spatiotemporal correlations and criticality when fabricated at a percolating phase transition. Specifically, the sizes and durations of avalanches of switching events are power law distributed, and the power law exponents satisfy rigorous criteria for criticality. These signals are therefore qualitatively and quantitatively similar to those measured in the cortex. Our self-organized networks provide a low-cost platform for computational approaches that rely on spatiotemporal correlations, such as reservoir computing, and are an important step toward creating neuromorphic device architectures.

INTRODUCTION

There is currently huge interest in building neuromorphic computational systems that operate on brain-like principles to perform certain tasks (e.g., classification and pattern recognition) much more efficiently than on traditional computers. Neuromorphic processing has been implemented in a number of hardware platforms, using components ranging from traditional silicon-based technology (1, 2), crossbar memristor arrays (3–6), and novel nanoscale device elements that use atomic-scale dynamics to mimic the behavior of synapses and neurons (7–9). All of these approaches rely on highly ordered architectures, but neuromorphic computational paradigms such as reservoir computing (RC) (10–12) require complex networks with strong inherent spatiotemporal correlations (13, 14). Maximal computational performance (15–17) in these systems requires network architectures that are not only complex but also critical (18).

At a critical point (17–20), the underlying network is scale free (21, 22), and the dynamics are characterized by scale-invariant avalanches, as observed in many systems ranging from earthquakes to biological extinctions and magnetization dynamics (23). Put simply, a critical system is poised such that any event is likely to trigger subsequent events, leading to a cascade (15). Avalanches of events are an important feature of both in vivo and in vitro recordings of neuronal signals (24–27), and substantial evidence has accumulated that the brain itself operates at a self-organized critical point (20), which optimizes information processing, memory, and information transfer (15–19). Therefore, in designing brain-inspired computational systems, it is natural to look for systems that might exhibit similar critical behavior and, especially, systems that would allow electronic signal processing.

The percolation transition

Percolating systems (28) are obvious candidates for investigation since the concept of percolation (Fig. 1) is central to discussions of criticality and avalanches (17). The two-dimensional percolating system of interest here comprises conducting nanoparticles deposited on an insulating substrate (29) (Fig. 1A). During deposition (see Materials and Methods), particles come into contact and form groups that are separated from each other by tunnel gaps. The groups increase in size and complexity as more particles are deposited until the onset of conduction across the system (Fig. 1, B and D) when the fraction of the surface that is covered with conducting elements is pc ~68% (28–31). This onset marks the percolation threshold, which is a critical point between insulating and conducting phases (28) at which avalanches might be expected to propagate (17) (see next paragraph). We hypothesized that when poised at this phase transition, the percolating assembly of nanoparticles might be promising for neuromorphic computing since (i) the correlation length diverges (28) and so long-range correlations are expected; (ii) it has previously been established that synapse-like atomic-scale switching processes occur in the tunnel gaps (29); (iii) devices are stable over periods of months (32) as required for real-world applications; and (iv) the complex networks are self-organized by simple, cost-effective processes (32).

Fig. 1. Device schematic, atomic switches, and percolating networks.

(A) Top: Schematic diagram illustrating our simple two-terminal contact geometry and the percolating network of nanoparticles. The different colors represent groups of particles that are in contact with one another. The zoomed region shows a schematic of the growth of an atomic filament within a tunnel gap (switching event) when a voltage is applied. Bottom: The same network schematic presented so as to show the conducting pathways (black) that result from atomic filament formation within the gaps between groups. (B) Schematic showing the conductance of a device during deposition of conducting nanoparticles follows (28) a power law ~(p − pc)1.3 (30) (the critical surface coverage is pc ~68%). arb, arbitrary units. (C) A scanning electron microscope image of a percolating device. Scale bar, 200 nm. (D) Schematic diagrams showing the following: left—in the subcritical, insulating phase at low coverage, groups of particles are small and well separated, so that if an atomic switch connects two groups, then there are few possibilities that this will trigger another switching event; right—in the supercritical, conducting phase at higher coverages, highly connected pathways across the network mean that when an atomic filament bridges a tunnel gap, an avalanche can propagate only to a few nearby tunnel gaps; center—in the critical phase, avalanches propagate on multiple length and time scales. See also fig. S2 for further details.

Near the percolation threshold, tunneling across gaps between particles contributes to the conductivity of the network (see Fig. 1 and fig. S1), and it is necessary to go beyond standard approaches to continuum percolation that consider only ohmic connections between well-connected particles (28). Percolating-tunneling systems have, however, received relatively little theoretical attention [see (31, 33) and references therein), and quantities such as the relevant percolation critical exponents are yet to be calculated. Experimentally, it is observed that external electrical stimuli cause conductive filaments to be continually formed and broken within the tunnel gaps (Fig. 1A, insets) (29), leading to a complex and dynamical network comprising conducting pathways, switches, and tunnel junctions (further illustrated in fig. S2).

Behavior of critical networks

The importance of criticality for neuromorphic computing was discussed in previous work on silver nanowire networks (13), but criticality in self-organized nanodevices has never been investigated systematically. Here, we investigate criticality in percolating nanoparticle networks using analysis techniques similar to those previously used on recordings of neuronal signals (24–27).

Figure 1 and fig. S2 show how avalanches are generated in the dynamical percolating network. When an atomic switch is activated in one tunnel gap, consequent changes in the electric field (and current) in other tunnel gaps cause further events at other locations in the network (34). That is, each successive switching event leads to others, creating an avalanche. As illustrated in Fig. 1D and fig. S2, the surface coverage is important in controlling the network connectivity: If too many (or too few) events are triggered, then the avalanche will accelerate uncontrollably (or die quickly)—critical dynamics are characterized by power law distributions in which there is no single characteristic length or time scale (17) and in which there is a small but important chance that very large avalanches are observed [see Fig. 1, fig. S2, and (15)]. While the propagation of avalanches is commonly described as a percolation phenomenon (17), avalanches have not previously been reported in percolating networks.

RESULTS

Avalanches of electrical signals and correlations

The simple contact geometry (Fig. 1A) allows us to sensitively observe electrical signals from the entire network, and Fig. 2A shows complex patterns of switching events [observed as discrete changes in the device conductance (G)], which are qualitatively similar to those observed due to neuronal avalanches in cortical tissue (24–27). Figure 2A highlights the self-similar nature of the avalanches, with characteristic bursts of activity on multiple time scales. Quantitative analysis of the avalanches is discussed below. Figure 2 (B and E) shows that the measured changes in conductance (ΔG) range over about three orders of magnitude, with different signal sizes reflecting the locations of the switches on different pathways through the highly branched network. The distribution of measured ΔG values is heavy tailed (Fig. 2, B and E), consistent with spatial self-similarity in percolating structures (35).

Fig. 2. Spatial and temporal self-similarity and correlation in switching activity.

(A) Percolating devices produce complex patterns of switching events that are self-similar in nature. The top panel contains 2400 s of data, with the bottom panels showing segments of the data with 10, 100, and 1000 times greater temporal magnification and with 3, 9, and 27 times greater magnification on the vertical scale (units of G0 = 2e2/h, the quantum of conductance, are used for convenience). The activity patterns appear qualitatively similar on multiple different time scales. (B and E) The probability density function (PDF) for changes in total network conductance, P(ΔG), resulting from switching activity exhibits heavy-tailed probability distributions. (C and F) IEIs follow power law distributions, suggestive of correlations between events. (D and G) Further evidence of temporal correlation between events is given by the autocorrelation function (ACF) of the switching activity (red), which decays as a power law over several decades. When the IEI sequence is shuffled (gray), the correlations between events are destroyed, resulting in a significant increase in slope in the ACF. The data shown in (B) to (D) (sample I) were obtained with our standard (slow) sampling rate, and the data shown in (E) to (G) (sample II) were measured 1000 times faster (see Materials and Methods), providing further evidence for self-similarity.

Histograms of interevent intervals (IEIs) (Fig. 2, C and F) show clear power law behavior, implying scale-free dynamics. The corresponding autocorrelation functions (ACFs) are shown in Fig. 2 (D and G). Again, power law behavior is observed. The data shown in Fig. 2 (D and G) are obtained with sampling rates that are different by a factor of a thousand, and yet the power law exponents (β) obtained are almost identical (β ~0.2). Together, these data cover more than five orders of magnitude in time and provide strong evidence for temporal self-similarity (36). However, so-called renewal processes, where successive IEIs are uncorrelated, can also exhibit power law ACFs (37), and so we show in Fig. 2 (D and G) the ACFs obtained when the sequence of IEIs is randomly shuffled to destroy any correlations. The experimental data have a much smaller slope than the shuffled data, indicating long-range temporal correlations (36). We note that in the neuroscience literature, it is often difficult to directly measure ACFs, and so detrended fluctuation analysis is commonly used to quantify correlations [see (36, 38) and citations therein]. For comparison, we use the relationship β = 2 − 2H (38) to estimate the Hurst exponent (H). We find H ~0.9, consistent with the strong correlations described below. This is a first piece of evidence for highly correlated activity in our networks, but the more detailed analysis below is required to unambiguously demonstrate avalanches and criticality.

Demonstration of criticality

In critical systems, the sizes (S) and durations (T) of avalanches have probability density functions (PDFs) that follow well-defined power law distributions

| (1) |

| (2) |

and the mean sizes of the avalanches are related to their durations by

| (3) |

where 1/σνz is a key characteristic exponent (23). As explained in (39), in critical systems, the estimate of 1/σνz obtained from Eq. 3 should be consistent with that estimated from the “crackling relationship”

| (4) |

In addition, the shapes of the avalanches should also exhibit a characteristic “collapse” onto a single curve (23, 25, 40), yielding an additional independent estimate of 1/σνz. To demonstrate criticality (i.e., to distinguish from other processes, such as random walks, that can also lead to power law distributed avalanches), these three independent estimates of 1/σνz must be in agreement (23, 27, 39).

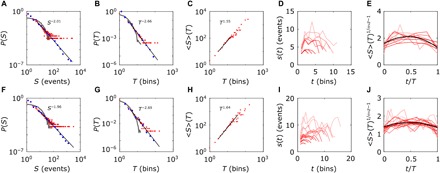

Avalanches in our percolating networks have sizes and durations (see Materials and Methods) that are distributed in accordance with power laws (Eqs. 1 and 2), as shown in Fig. 3 (A and F) and Fig. 3 (B and G). After shuffling the sequence of IEIs in the original data, correlations are destroyed, and both distributions become exponential (gray curves) (41), confirming that the original data are highly correlated. Figure 3 (C and H) shows that the mean avalanche size (〈S〉) depends on avalanche duration (T) as expected from Eq. 3, with 1/σνz ~1.6. Physically, the slope of the 〈S〉(T) plots is related to the fractal dimension of the avalanches (23).

Fig. 3. Statistical distributions of avalanche properties and shape collapse.

The distributions shown in (A) to (E) (sample I, slow sampling rate) and (F) to (J) (sample II, fast sampling rate) demonstrate the existence of self-similar avalanches across multiple time scales. (A and F) PDFs of avalanche size and (B and G) PDFs of avalanche duration both follow power laws with exponents τ ≈ 2 and α ≈ 2.7, respectively, providing strong evidence for temporal correlations. Red, distribution presented using standard (linear) bin sizes; blue, distribution presented using logarithmic bin sizes to allow visualization of the heavy tail; gray, distribution after shuffling of the sequence of IEIs in the original data to destroy correlations. (C and H) The mean avalanche sizes are a power law function of their duration with exponent 1/σνz ≈ 1.6. (D and I) The mean avalanche shapes for each unique duration are shown to collapse (E and J, respectively) onto scaling functions (black lines). This shape collapse yields an independent measure of the critical exponent 1/σνz ≈ 1.6. As discussed in the main text and shown explicitly in Fig. 4, the values of the critical exponents τ, α, and 1/σνz satisfy the crackling relationship (Eq. 4) within the measurement uncertainties.

Figure 3 (D, E, I, and J) shows that the avalanches exhibit shape collapse. As is the case for neuronal recordings (25, 40), this requires an enormous number of avalanches and necessarily yields an estimate of 1/σνz that has a relatively large uncertainty. The avalanche profiles are consistent with the parabolic scaling functions reported for neuronal avalanches (25). Detailed investigation of avalanche shapes may eventually yield further understanding of the underlying processes (42, 43), but such an analysis would require a much larger amount of data so as to improve the signal-to-noise ratio in these plots. Nevertheless, as shown in Fig. 4, the values of 1/σνz obtained from shape collapse are consistent with those obtained from the crackling relationship (Eq. 4) and the mean avalanche sizes (Eq. 3). We emphasize that the important feature of Fig. 4 is that in almost every case, the blue, cyan, and green symbols agree within the uncertainties. This is true for a number of devices, for a range of stimuli, and for repeated independent measurements on the same devices. The fact that all three estimates of 1/σνz are in agreement is very strong evidence for criticality in our percolating devices (39). The agreement of all estimates of 1/σνz even while α and τ vary (Fig. 4) is evidence for self-tuned criticality (18).

Fig. 4. Demonstration of criticality.

Estimates of three independent measures of 1/σνz are obtained from the crackling relationship (green), plots of mean avalanche size given duration (blue), and avalanche shape collapse (cyan). The blue, cyan, and green symbols agree within the measurement uncertainty in almost every case. Each panel also shows the power law exponents of avalanche size (τ; red) and avalanche duration (α; amber). (A) Critical exponents for sample I over a range of low voltages and for a combined dataset (low sampling rate; see Materials and Methods), showing that the critical exponents are substantially independent of voltage. (B) Comparison of critical exponents measured for sample II for repeated, independent 6 V DC measurements (fast sampling rate; see Materials and Methods). (C) Critical exponents for sample III as a function of voltage (slow sampling rate). (D) Data from a second sequence of measurements on sample III identical to that in (C), showing that while the exponents α and τ vary because of internal reconfigurations of the percolating device, the three estimates of 1/σνz remain in good agreement at every voltage. (E and F) Voltage-dependent data from sample IV (E and F, fast and slow sampling rates, respectively), showing that criticality and self-similarity are observed on vastly different time scales. The mean values of 1/σνz were found to be 1.46 ± 0.05, 1.40 ± 0.03, and 1.40 ± 0.04 from the crackling relationship, 〈S〉(T) and shape collapse, respectively (uncertainties are 1 SD), indicating that there is no significant difference between the estimates from the three independent methods. This is confirmed by a single-factor analysis of variance (ANOVA) test (P = 0.47). The (τ, α) data are replotted in fig. S6 to allow a different comparison with the three calculations of 1/σνz (27).

DISCUSSION

The avalanches of switching events observed here exhibit statistics that are qualitatively and quantitatively similar to those of the avalanches observed in cortical tissue (25, 41) and in very recent work on whole organisms (26). Within the brain, criticality is hypothesized to play a role in cognition, adaptive behavior, and disease diagnosis and underpins several therapeutic and diagnostic opportunities (19). Criticality in our system originates from long-range spatiotemporal correlations in the network of atomic switches, as required (18) for neuromorphic computing strategies such as RC (10).

In RC, spatiotemporal correlations are required to allow projection of input signals into higher-dimensional spaces; corresponding output signals can then be combined using linear regression to perform computational tasks such as classification, pattern recognition, and time series prediction (10–12). The need to build critical systems to provide a platform for RC has previously been highlighted in (17, 18). Our percolating networks meet that need.

Previous demonstrations of RC using nanoscale devices have relied on small numbers of device elements (11, 12) because integration into appropriate physical networks is challenging and/or expensive. Furthermore, it is not clear that complex/critical networks could be achieved using lithographic processing. In contrast, the straightforward deposition methods used here immediately provide the required complex network of switches, are inexpensive, and allow electronic readout of correlated signals from the network as required for integration with other electronic components. We believe our processes are scalable because they are compatible with standard complementary metal-oxide semiconductor (CMOS) processing.

Our percolating networks are naturally suited to RC since deposition onto multielectrode arrays will straightforwardly provide the required multiple inputs and outputs. Ultimately, we envisage architectures in which the percolating network is deposited onto chips with predefined CMOS circuitry that processes input and output signals. The question of how best to code input/output signals for particular computational tasks has so far been given little consideration in the RC literature and will require further investigation since combinations of temporal and spatial input/output coding are possible (12).

We believe that percolating devices could provide many further opportunities for brain-like computing; for example, three-dimensional percolating systems could provide additional network complexity. While our attempts to tune the operating point of the system by varying the surface coverage were unsuccessful (see Materials and Methods), it may yet be possible to tune the system through the critical point using other external control parameters. In addition, strategies can be envisaged that would incorporate a wide range of alternative switching elements within the networks, such as phase-change materials, oxide-based memristors, and electrochemical switches (6–9). Last, we highlight the need for theoretical work/simulations to support device development. Percolating-tunneling networks have so far been relatively little studied (31, 33), and important quantities such as the degree distribution for network connections (21), percolation critical exponents (28), and precise universality class (23) are yet to be elucidated.

MATERIALS AND METHODS

Device fabrication

Our percolating devices were fabricated by simple nanoparticle deposition processes (32, 44). Seven-nanometer Sn nanoparticles were deposited between gold electrodes (spacing, 100 μm) on a silicon nitride surface. Deposition was terminated at the onset of conduction, which corresponds to the percolation threshold (28, 44). The deposition takes place in a controlled environment with a well-defined partial pressure of air and humidity, as described in (32). This process leads to controlled coalescence and fabrication of robust structures, which function for many months but which yet allow atomic-scale switching processes to take place unhindered. The individual atomic-scale filaments formed within tunneling gaps exhibit quantized conduction (29), but, as shown in fig. S1 (E and F), the complex percolating-tunneling network comprises many parallel and series paths, and so the device conductance is not quantized.

As described in the discussion of Fig. 4, data from all devices presented here are consistent with criticality. To explore noncritical networks, several devices were fabricated with surface coverages that were either below or above the percolation threshold (see Fig. 1, B and D). In all cases, however, it was not possible to observe avalanches of events. When stimulated, low-coverage devices exhibit a number of irreversible drops in conductance and rapidly become open circuit: Atomic switches can be opened, but the prevalence of large gaps in the network makes closing switches difficult. High-coverage devices exhibit no switching events for small stimulus voltages and exhibit irreversible drops in conductance for larger stimulus voltages due to melting of particles when large currents flow.

Electrical stimulus and measurement

Electrical stimuli were applied to the contact on one side of the percolating device, while the opposite edge of the system was held at ground potential. While a variety of types of stimuli (voltage pulses and ramps) can be applied to the system, constant DC voltages were used in this work because they facilitate observation of ongoing reconfigurations of the states of the switches in the devices and, in particular, the analysis of avalanches (see below). Measurements over long time periods are also necessary to avoid significant cutoffs in the power law distributions (17). Here, we presented data from DC stimulus of four devices, but the data presented are consistent with that obtained from DC, pulsed, and ramped voltage stimulus of a further 10 devices.

Our electrical measurements were performed using two distinct sets of measurement electronics to allow measurement of the device conductance on two distinct time scales. The first method relies on a picoammeter and is limited to a relatively slow sampling rate (0.1 s sampling interval). The second method uses a fast digital oscilloscope to allow a much higher sampling rate (200 μs sampling interval for the data presented here). As shown in Figs. 2 to 4, both methods resulted in qualitatively and quantitatively similar data, with similar power law exponents for each of the main quantities of interest. Hence, our results and conclusions are not influenced by the sampling rate.

Data analysis

The data analysis methods used in this work are substantially the same as those developed in the neuroscience community to analyze microelectrode array recordings from biological brain tissue (24–27). Events are defined as changes in the conductance signal that exceed a threshold value. We show in fig. S3 that, as in the neuroscience literature, the choice of threshold in a reasonable range does not significantly affect the avalanche analysis. Avalanches were defined following the procedure described in (24, 45): The data were analyzed by defining time bins with width equal to the mean IEI (24), and the duration of the avalanche was defined as the number of consecutive nonempty bins between empty bins. Figure S4 shows the effect of bin size on the avalanche analysis.

Power law exponents and “fitting” processes

We followed the maximum likelihood (ML) approach of (46), as implemented in the Neural Complexity and Criticality MATLAB toolbox by (45), to statistically validate criticality. For all data that can be plotted in the form of a PDF (IEIs, S, and T), the ML estimators were obtained for both power law and exponential distributions. We used the Bayesian information criterion (47) to identify which distribution is more likely and find in all cases that it is the power law (46), as shown in fig. S5.

We generated 500 random power laws using the calculated ML estimators and iterated the choice of cutoffs (xmin and xmax) for the data range, finding the probability of obtaining a Kolmogorov-Smirnov (KS) distance (46) at least as great as for that of experimental data. In all cases, we failed to reject the null hypothesis that avalanche distributions are power law distributed (we required P > 0.2). Conversely, we rejected the null hypothesis that avalanche distributions are power law distributed for shuffled data (see Fig. 3, A, B, F, and G) (41), i.e., when we removed the correlations in the experimental avalanche data, we found that the distributions are exponential: The correlations and avalanches are intimately linked.

The KS test is extremely sensitive to small deviations from a mathematical power law (45), and since neuronal avalanche data are commonly observed to have curvature at small x values (48), a minimum x value was introduced (46). The deviation from power law behavior in our data is rather small (see Figs. 2 and 3), but we nevertheless followed (45, 46) and allowed the data to be truncated to show definitively that the distributions are power law. The IEI distributions were found to be power law over about two orders of magnitude in time, with both a lower and upper cutoff. The avalanche size and duration distributions are always found to be power law up to the maximum range of the data, i.e., the data are power law over at least three orders of magnitude for the sizes and two for the durations. In all plots shown here, the range in which the power law model is in agreement with the data is indicated by the solid line. The estimated uncertainties in α and τ are ±0.1. Uncertainties estimated from the ML procedure are smaller but do not reflect the scatter in the data.

The ML methods cannot be applied to data which is not in the form of a probability distribution, and so, as in (23, 25, 45), standard linear regression techniques were used to obtain the measured exponents for 〈S〉(T) and A(t).

Supplementary Material

Acknowledgments

We thank D. O’Neale, M. Plank, and D. Stouffer for helpful discussions and support with ML estimation and associated methods, and J. Beggs, N. McNaughton, and C. Young for discussions on neuronal avalanches and criticality. Funding: This project was financially supported by The MacDiarmid Institute for Advanced Materials and Nanotechnology, the Ministry of Business Innovation and Employment, and the Marsden Fund UOC1604. Author contributions: S.A.B. conceived the study and initiated the project. J.B.M. initiated and performed the avalanche and criticality analysis. J.B.M., S.S., S.K.B., S.K.A., and E.G. performed the experiments. J.B.M., S.S., S.K.A., S.K.B., and S.A.B. performed the data analysis. S.A.B. and J.B.M. wrote the manuscript with comments from all authors. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/11/eaaw8438/DC1

Fig. S1. Illustration of different types of two-dimensional (2D) percolation model.

Fig. S2. Illustration of 2D percolation with tunneling and atomic switches.

Fig. S3. Choice of threshold.

Fig. S4. Choice of time bin size.

Fig. S5. Comparison of power law and other fits to the avalanche size and duration distributions.

Fig. S6. Scatter plot of α(τ).

REFERENCES AND NOTES

- 1.Merolla P. A., Arthur J. V., Alvarez-Icaza R., Cassidy A. S., Sawada J., Akopyan F., Jackson B. L., Imam N., Guo C., Nakamura Y., Brezzo B., Vo I., Esser S. K., Appuswamy R., Taba B., Amir A., Flickner M. D., Risk W. P., Manohar R., Modha D. S., Artificial brains. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673 (2014). [DOI] [PubMed] [Google Scholar]

- 2.Hopkins M., Pineda-García G., Bogdan P. A., Furber S. B., Spiking neural networks for computer vision. Interface Focus 8, 20180007 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wang Z., Joshi S., Savel’ev S., Song W., Midya R., Li Y., Rao M., Yan P., Asapu S., Zhuo Y., Jiang H., Lin P., Li C., Yoon J. H., Upadhyay N. K., Zhang J., Hu M., Strachan J. P., Barnell M., Wu Q., Wu H., Willimas R. S., Xia Q., Yang J. J., Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018). [Google Scholar]

- 4.Sheridan P. M., Cai F., du C., Ma W., Zhang Z., Lu W. D., Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017). [DOI] [PubMed] [Google Scholar]

- 5.Prezioso M., Merrikh-Bayat F., Hoskins B. D., Adam G. C., Likharev K. K., Strukov D. B., Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015). [DOI] [PubMed] [Google Scholar]

- 6.Burr G. W., Shelby R. M., Sebastian A., Kim S., Kim S., Sidler S., Virwani K., Ishii M., Narayanan P., Fumarola A., Sanches L. L., Boybat I., Le Gallo M., Moon K., Woo J., Hwang H., Leblebici Y., Neuromorphic computing using non-volatile memory. Adv. Phys. 2, 89–124 (2017). [Google Scholar]

- 7.Terabe K., Hasegawa T., Nakayama T., Aono M., Quantized conductance atomic switch. Nature 433, 47–50 (2005). [DOI] [PubMed] [Google Scholar]

- 8.Tuma T., Pantazi A., Le Gallo M., Sebastian A., Eleftheriou E., Stochastic phase-change neurons. Nat. Nanotechnol. 11, 693–699 (2016). [DOI] [PubMed] [Google Scholar]

- 9.Wang Z., Joshi S., Savel’ev S. E., Jiang H., Midya R., Lin P., Hu M., Ge N., Strachan J. P., Li Z., Wu Q., Barnell M., Li G. L., Xin H. L., Williams R. S., Xia Q., Yang J. J., Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nat. Mater. 16, 101–108 (2017). [DOI] [PubMed] [Google Scholar]

- 10.Lukoševičius M., Jaeger H., Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 3, 127–149 (2009). [Google Scholar]

- 11.Torrejon J., Riou M., Araujo F. A., Tsunegi S., Khalsa G., Querlioz D., Bortolotti P., Cros V., Yakushiji K., Fukushima A., Kubota H., Yuasa S., Stiles M. D., Grollier J., Neuromorphic computing with nanoscale spintronic oscillators. Nature 547, 428–431 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Du C., Cai F., Zidan M. A., Ma W., Lee S. H., Lu W. D., Reservoir computing using dynamic memristors for temporal information processing. Nat. Commun. 8, 2204 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Stieg A. Z., Avizienis A. V., Sillin H. O., Martin-Olmos C., Aono M., Gimzewski J. K., Emergent criticality in complex turing B-type atomic switch networks. Adv. Mater. 24, 286–293 (2012). [DOI] [PubMed] [Google Scholar]

- 14.Maass W., Natschläger T., Markram H., Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560 (2002). [DOI] [PubMed] [Google Scholar]

- 15.Shew W. L., Plenz D., The functional benefits of criticality in the cortex. Neuroscientist 19, 88–100 (2012). [DOI] [PubMed] [Google Scholar]

- 16.Kinouchi O., Copelli M., Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2, 348–351 (2006). [Google Scholar]

- 17.Muñoz M. A., Colloquium: Criticality and dynamical scaling in living systems. Rev. Mod. Phys. 90, 031001 (2018). [Google Scholar]

- 18.Srinivasa N., Stepp N. D., Cruz-Albrecht J., Criticality as a set-point for adaptive behavior in neuromorphic hardware. Front. Neurosci. 9, 449 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cocchi L., Gollo L. L., Zalesky A., Breakspear M., Criticality in the brain: A synthesis of neurobiology, models and cognition. Prog. Neurobiol. 158, 132–152 (2017). [DOI] [PubMed] [Google Scholar]

- 20.Chialvo D. R., Emergent complex neural dynamics. Nat. Phys. 6, 744–750 (2010). [Google Scholar]

- 21.Albert R., Barabási A.-L., Statistical mechanics of complex networks. Rev. Mod. Phys. 74, 47–97 (2002). [Google Scholar]

- 22.Fraiman D., Balenzuela P., Foss J., Chialvo D. R., Ising-like dynamics in large-scale functional brain networks. Phys. Rev. E. 79, 061922 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sethna J. P., Dahmen K. A., Myers C. R., Crackling noise. Nature 410, 242–250 (2001). [DOI] [PubMed] [Google Scholar]

- 24.Beggs J. M., Plenz D., Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Friedman N., Ito S., Brinkman B. A. W., Shimono M., DeVille R. E. L., Dahmen K. A., Beggs J. M., Butler T. C., Universal critical dynamics in high resolution neuronal avalanche data. Phys. Rev. Lett. 108, 208102 (2012). [DOI] [PubMed] [Google Scholar]

- 26.Ponce-Alvarez A., Jouary A., Privat M., Deco G., Sumbre G., Whole-brain neuronal activity displays crackling noise dynamics. Neuron 100, 1446–1459.e6 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fontenele A. J., de Vasconcelos N. A. P., Feliciano T., Aguiar L. A. A., Soares-Cunha C., Coimbra B., Dalla Porta L., Ribeiro S., Rodrigues A. J., Sousa N., Carelli P. V., Copelli M., Criticality between cortical states. Phys. Rev. Lett. 122, 208101 (2019). [DOI] [PubMed] [Google Scholar]

- 28.D. Stauffer, A. Aharony, Introduction To Percolation Theory (CRC Press, ed. 2, 1992). [Google Scholar]

- 29.Sattar A., Fostner S., Brown S. A., Quantized conductance and switching in percolating nanoparticle films. Phys. Rev. Lett. 111, 136808 (2013). [DOI] [PubMed] [Google Scholar]

- 30.Dunbar A. D. F., Partridge J. G., Schulze M., Brown S. A., Morphological differences between Bi, Ag and Sb nano-particles and how they affect the percolation of current through nano-particle networks. Eur. Phys. J. D 39, 415–422 (2006). [Google Scholar]

- 31.Fostner S., Brown R., Carr J., Brown S. A., Continuum percolation with tunneling. Phys. Rev. B 89, 075402 (2014). [Google Scholar]

- 32.Bose S. K., Mallinson J. B., Gazoni R. M., Brown S. A., Stable self-assembled atomic-switch networks for neuromorphic applications. IEEE Trans. Electron Devices 64, 5194–5201 (2017). [Google Scholar]

- 33.Grimaldi C., Theory of percolation and tunneling regimes in nanogranular metal films. Phys. Rev. B 89, 214201 (2014). [Google Scholar]

- 34.Fostner S., Brown S. A., Neuromorphic behavior in percolating nanoparticle films. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 92, 052134 (2015). [DOI] [PubMed] [Google Scholar]

- 35.Voss R. F., The fractal dimension of percolation cluster hulls. J. Phys. A 17, L373–L377 (1984). [Google Scholar]

- 36.Palva J. M., Zhigalov A., Hirvonen J., Korhonen O., Linkenkaer-Hansen K., Palva S., Neuronal long-range temporal correlations and avalanche dynamics are correlated with behavioral scaling laws. Proc. Natl. Acad. Sci. U.S.A. 110, 3585–3590 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lowen S. B., Teich M. C., Fractal renewal processes generate 1/f noise. Phys. Rev. E 47, 992–1001 (1993). [DOI] [PubMed] [Google Scholar]

- 38.Meisel C., Bailey K., Achermann P., Plenz D., Decline of long-range temporal correlations in the human brain during sustained wakefulness. Sci. Rep. 7, 11825 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Touboul J., Destexhe A., Power-law statistics and universal scaling in the absence of criticality. Phys. Rev. E 95, 012413 (2017). [DOI] [PubMed] [Google Scholar]

- 40.Timme N. M., Marshall N. J., Bennett N., Ripp M., Lautzenhiser E., Beggs J. M., Criticality maximizes complexity in neural tissue. Front. Physiol. 7, 425 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Shew W. L., Clawson W. P., Pobst J., Karimipanah Y., Wright N. C., Wessel R., Adaptation to sensory input tunes visual cortex to criticality. Nat. Phys. 11, 659–663 (2015). [Google Scholar]

- 42.Baldassarri A., Colaiori F., Castellano C., Average shape of a fluctuation: Universality in excursions of stochastic processes. Phys. Rev. Lett. 90, 060601 (2003). [DOI] [PubMed] [Google Scholar]

- 43.Roberts J. A., Iyer K. K., Finnigan S., Vanhatalo S., Breakspear M., Scale-free bursting in human cortex following hypoxia at birth. J. Neurosci. 34, 6557–6572 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Schmelzer J. Jr., Brown S. A., Wurl A., Hyslop M., Blaikie R. J., Finite-size effects in the conductivity of cluster assembled nanostructures. Phys. Rev. Lett. 88, 226802 (2002). [DOI] [PubMed] [Google Scholar]

- 45.Marshall N., Timme N. M., Bennett N., Ripp M., Lautzenhiser E., Beggs J. M., Analysis of power laws, shape collapses, and neural complexity: New techniques and MATLAB support via the NCC toolbox. Front. Physiol. 7, 250 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Clauset A., Shalizi C. R., Newman M. E. J., Power-law distributions in empirical data. SIAM Rev. 51, 661–703 (2009). [Google Scholar]

- 47.Vrieze S. I., Model selection and psychological theory: A discussion of the differences between the Akaike information criterion (AIC) and the Bayesian information criterion (BIC). Psychol. Methods 17, 228–243 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Yaghoubi M., de Graaf T., Orlandi J. G., Girotto F., Colicos M. A., Davidsen J., Neuronal avalanche dynamics indicates different universality classes in neuronal cultures. Sci. Rep. 8, 3417 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/5/11/eaaw8438/DC1

Fig. S1. Illustration of different types of two-dimensional (2D) percolation model.

Fig. S2. Illustration of 2D percolation with tunneling and atomic switches.

Fig. S3. Choice of threshold.

Fig. S4. Choice of time bin size.

Fig. S5. Comparison of power law and other fits to the avalanche size and duration distributions.

Fig. S6. Scatter plot of α(τ).