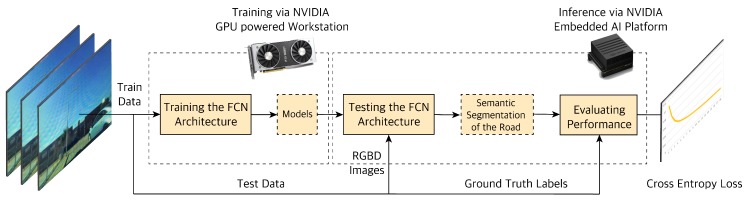

Figure 12.

Overview of the experiment process. Training a Deep Neural Network model requires powerful computing resources; hence, for training we use a GPU powered workstation. Inference is less computationally expensive; thus, when the model is trained, we use an embedded GPU enabled device for testing and inference at the edge in-vehicle.