Abstract

Background

While identifying and cataloging unpublished studies from conference proceedings is generally recognized as a good practice during systematic reviews, controversy remains whether to include study results that are reported in conference abstracts. Existing guidelines provide conflicting recommendations.

Main body

The main argument for including conference abstracts in systematic reviews is that abstracts with positive results are preferentially published, and published sooner, as full-length articles compared with other abstracts. Arguments against including conference abstracts are that (1) searching for abstracts is resource-intensive, (2) abstracts may not contain adequate information, and (3) the information in abstracts may not be dependable. However, studies comparing conference abstracts and fully published articles of the same study find only minor differences, usually with conference abstracts presenting preliminary results. Other studies that have examined differences in treatment estimates of meta-analyses with and without conference abstracts report changes in precision, but usually not in the treatment effect estimate. However, in some cases, including conference abstracts has made a difference in the estimate of the treatment effect, not just its precision. Instead of arbitrarily deciding to include or exclude conference abstracts in systematic reviews, we suggest that systematic reviewers should consider the availability of evidence informing the review. If available evidence is sparse or conflicting, it may be worthwhile to search for conference abstracts. Further, attempts to contact authors of abstracts or search for protocols or trial registers to supplement the information presented in conference abstracts is prudent. If unique information from conference abstracts is included in a meta-analysis, a sensitivity analysis with and without the unique results should be conducted.

Conclusions

Under given circumstances, it is worthwhile to search for and include results from conference abstracts in systematic reviews.

Background

Systematic reviewers aim to be comprehensive in summarizing the existing literature addressing specific research questions. This generally involves a thorough search for published studies as well as for ongoing or recently completed studies that are not yet published. Ongoing and recently completed studies are often identified through searches of registries, such as ClinicalTrials.gov, and of conference proceedings. While identifying and cataloging unpublished studies from conference proceedings is generally recognized as a good practice during systematic reviews, controversy remains whether to include study results that are reported in conference abstracts. Current guidelines are conflicting. The United States Agency for Health Care Research and Quality (AHRQ), through its Effective Healthcare Program, recommends that searches for conference abstracts be considered, but Cochrane and the United States National Academy of Sciences (NAS) both recommend always searching for and including conference abstracts in systematic reviews [1–3]. Our objectives in this commentary are to summarize the existing evidence both for and against the inclusion of conference abstracts in systematic reviews and provide suggestions for systematic reviewers when deciding whether and how to include conference abstracts in systematic reviews.

Main text

Arguments for including conference abstracts in systematic reviews

The main argument for including conference abstracts in systematic reviews is that, by doing so, systematic reviewers can be more comprehensive. In our recent Cochrane methodology review, we reported that the proportion of subsequent full publication of studies presented at conferences is low [4]. We examined 425 biomedical research reports that followed the publication status of 307,028 studies presented as conference abstracts addressing a wide range of medical, allied health, and health policy fields. A meta-analysis of these 425 reports indicated that the overall full publication proportion was only 37% (95% confidence interval [CI], 35 to 39%) for abstracts of all types of studies and only 60% (95% CI, 52 to 67%) for abstracts of randomized controlled trials (RCTs). Through a survival analysis, we found that, among the 181 reports that evaluated time to publication, only 46% of abstracts of all types of studies and 69% of abstracts of RCTs were published, even after 10 years. Thus, at best, approximately 3 in 10 abstracts describing RCTs have never been published in full, implying that the voluntary participation and risk-taking by multitudes of patients have not led to fully realized contributions to science. We and others argue that the failure of trialists to honor their commitment to patients (that patient participation would contribute to science) represents an ethical problem [5, 6].

From a systematic reviewer’s perspective, even if the unpublished abstracts were a random 3 in 10 abstracts, restricting a systematic review search to only the published literature would amount to the loss of an immense amount of information and a corresponding loss of precision in meta-analytic estimates of treatment effect. However, publication is not a matter of random chance. Those conducting systematic reviews have long grappled with this problem, known as “publication bias.” Publication bias occurs when either the likelihood of, or the time to, publication of a study is impacted by the direction of the study’s results [6–12]. The most frequent scenario for publication bias is when studies with “positive” (or “significant”) results are selectively published, or are published sooner, than studies with either null or negative results.

Publication bias can be conceptualized as occurring in two stages: (I) from a study’s end to presentation of its results at a conference (and publication of an accompanying conference abstract) and (II) from publication of a conference abstract to subsequent “full publication” of the study results, typically in a peer-reviewed journal article [13]. In the context of publication bias arising during stage II (i.e., if abstracts with positive or significant results are selectively published in full), systematic reviews relying solely on fully published studies can be biased because positive results would be overrepresented. This would lead to a falsely inflated (or biased) estimate of the treatment effect of the intervention being evaluated in the systematic review. Indeed, in our Cochrane methodology review, we found evidence of publication bias in the studies reported in the abstracts [4]. “Positive” results were associated with full publication, whether “positive” was defined as statistically significant results (risk ratio [RR] = 1.31, 95% CI 1.23 to 1.40) or as results whose direction favored the intervention (RR = 1.17, 95% CI 1.07 to 1.28). Furthermore, abstracts with statistically significant results were published in full sooner than abstracts with non-significant results [14–16], unearthing another aspect of bias that can arise when a systematic review is performed relatively soon after the completion of a trial(s) testing a new intervention.

Arguments against including conference abstracts in systematic reviews

There are various arguments against including abstracts in systematic reviews. First, identifying relevant conferences, locating their abstracts, and sifting through the often thousands of abstracts can be challenging and resource-intensive. However, EMBASE, a commonly searched database during systematic reviews, now includes conference abstracts from important medical conferences, dating back to 2009 [17]. Inclusion of conference abstracts in this searchable database means searching for conference abstracts is less resource-intensive than in the past. Second, largely driven by their brevity, abstracts may not contain adequate information for systematic reviewers to appraise the design, methods, risk of bias, outcomes, and results of studies reported in the abstracts [18–21]. Third, the dependability of results presented in abstracts also is questionable [22–24], which occurs at least in part because (1) most abstracts are not peer-reviewed and (2) results reported in abstracts are often preliminary and/or based on limited analyses conducted in a rush to meet conference deadlines. The most frequent types of conflicting information between abstract and full-length journal article have pertained to authors or authorship order, sample size, and estimates of treatment effects (their magnitude or, less frequently, direction) [25–31]. Mayo-Wilson and colleagues examined the agreement in reported data across a range of unpublished sources related to the same studies in bipolar depression and neuropathic pain [21, 32]. As part of this effort, they compared abstracts with full-length journal articles and clinical study reports and reported that the information presented in abstracts was not dependable either in terms of methods or results.

What are we missing if we do not include conference abstracts in a systematic review?

Various studies have questioned whether the inclusion of “gray” literature or unpublished study results in a systematic review would change the estimates of treatment effect obtained during meta-analyses. Through “meta-epidemiologic” studies, investigators have examined the results of meta-analyses with and without conference abstracts and have reported conflicting, but generally small differences in results [21, 24, 33]. Evidence from a recent systematic review indicates that the inclusion of gray literature (defined more broadly than just conference abstracts) in meta-analyses may change the results from significant to non-significant or from non-significant to significant, or may not change the results [24, 33]. We conducted a similar analysis in our Cochrane methodology review [4]. We were able to do this because some of our included reports that examined full publication of conference abstracts were themselves only available as conference abstracts. Our analysis found that inclusion of reports that were conference abstracts did not change the strength or precision of our meta-analytic results. In our review, it would have been possible to exclude conference abstracts and retain accurate and precise results.

Implications of reasons for non-publication of conference abstracts

The most common reason provided by authors of abstracts for not publishing their study results in full has been reported to simply be “lack of time,” and not because the results were considered unreliable or negative [34]. This finding suggests that the identification of an abstract without a corresponding full-length journal article should prompt systematic reviewers to search for additional evidence, such as gray literature sources and/or contacting the authors. However, a reasonable argument could be made that, when the same information is available in both a published peer-reviewed article and an abstract for a given study, including the abstract in a systematic review would be superfluous and/or ill-advised because a likely more comprehensive and dependable source of the information, i.e., the peer-reviewed article, is available. Therefore, the presence of a journal article might obviate the need for including a corresponding conference abstract in a systematic review, unless unique outcomes are reported in the abstract.

Considerations when including conference abstracts in systematic reviews

Taken together, the evidence reviewed in this paper (summarized in Table 1) suggests that systematic reviewers should take a more nuanced approach to inclusion of conference abstracts. A simple yes or no to the question “Should we include conference abstracts in our systematic review?” is neither sufficient nor appropriate. One aspect to consider is the scope of the review. For example, will the conference abstracts be used to inform policy based on a cadre of systematic reviews or only used within a single review? Benzie and colleagues evaluated the usefulness of including conference abstracts in a “state-of-the-evidence” review and concluded that including conference abstracts validated the results of a search that included only the published literature [35]. These authors discussed four considerations for basing the decision to include conference abstracts: (1) complexity of the intervention, (2) consensus in the existing literature, (3) importance of context in evaluating the effect of the intervention, and (4) presence of other evidence [35]. Others who have incorporated conference abstracts for decision-making have noted that the lack of, or conflicting results in, published evidence often requires inclusion of conference abstracts [36]. In some instances, results in abstracts can confirm the evidence found in fully published studies, but in other instances, abstracts can provide useful additions to the evidence [37].

Table 1.

Arguments for and against including conference abstracts in systematic reviews

| For | Against |

|---|---|

| Comprehensiveness increased | Resources may be inadequate for locating relevant conference abstracts |

| Potential for impact of publication bias decreased | Abstracts may not contain adequate information for systematic reviewers to appraise the design, methods, risk of bias, outcomes, and results of studies reported in the abstracts |

| Increased information leads to increased precision | Dependability of results presented in abstracts is questionable |

When considering the use of conference abstracts in systematic reviews, we largely agree with the recommendations presented in the AHRQ Methods Guide for Comparative Effectiveness Reviews [1]. Although these recommendations generally do not espouse including conference abstracts in systematic reviews, they provide excellent guidance on when including abstracts should be considered:

• Reviewers should routinely consider conducting a search of conference abstracts and proceedings to identify unpublished or unidentified studies.

• Consult the TEP [Technical Expert Panel] for suggestions on particular conferences to search and search those conferences specifically.

• Search for conference abstracts of any conference identified by reading the references of key articles.

• We do not recommend using conference abstracts for assessing selective outcome reporting and selective analysis reporting, given the variable evidence of concordance between conference abstracts and their subsequent full-text publications [1].

Our suggestions

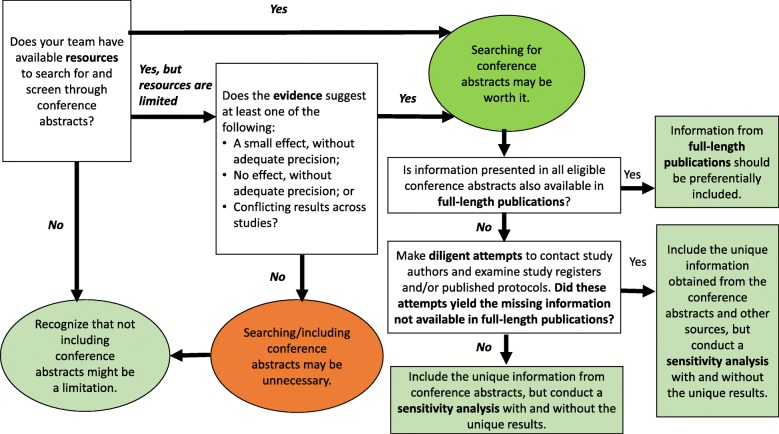

Based on the empirical findings summarized in this review and on our experience, we believe that generally relying on conference abstracts is problematic for the various reasons discussed. While meta-epidemiologic studies have shown that inclusion of abstracts does not greatly impact meta-analytic results, it can sometimes make a difference. The dilemma facing a systematic reviewer is to determine when it might. We suggest the following approach (summarized in Fig. 1). If the evidence suggests a sizeable effect, or the absence of one (i.e., with the estimate of effect centered at or near the null), with reasonable precision, searching for conference abstracts may be unnecessary. On the other hand, if the evidence does not show a sizeable effect, is imprecise, or is conflicting, then the resources spent finding and including conference abstracts may be worth it. In other words, if only a single study in full-length form is identified, or if the studies identified are few and small, then conference abstracts should probably be searched and included. We refrain from making specific suggestions for what should be construed as a “sizeable” effect. Magnitudes of effect sizes and thresholds for what is considered relevant can vary considerably across outcomes and across fields and disciplines. We also refrain from making specific suggestions for what should be construed as “reasonable precision” because of the various problems inherent in the use of statistical significance (e.g., arbitrariness, dependence on sample size) and the arbitrary thresholds for precision that use of statistical significance can engender [38–41].

Fig. 1.

Flow chart showing our suggestions for how to approach the use of conference abstracts in systematic review

If abstracts are indeed included in a systematic review, the consistent use of CONSORT reporting guidelines for abstracts [14] would facilitate extraction of information from abstracts. In many cases, however, these reporting guidelines are not followed [42], so we suggest that diligent attempts be made to contact authors of the abstracts and examine study registers, such as ClinicalTrials.gov, and published protocols to obtain all necessary unreported or unclear information on study methods and results. In addition, to examine the impact of including the abstracts, a sensitivity analysis should always be completed with and without conference abstracts.

Conclusions

Based on the available evidence and on our experience, we suggest that instead of arbitrarily deciding to include conference abstracts or not in a systematic review, systematic reviewers should consider the availability of evidence. If available evidence is sparse or conflicting, it may be worthwhile to include conference abstracts. If results from conference abstracts are included, then it is necessary to make diligent attempts to contact the authors of the abstract and examine study registers and published protocols to obtain further and confirmatory information on methods and results.

Acknowledgements

Not applicable.

Abbreviations

- AHRQ

Agency for Health Care Research and Quality

- CI

Confidence interval

- NAS

United States National Academy of Sciences

- RCT

Randomized controlled trial

- RR

Risk ratio

Authors’ contributions

RWS conceived the idea for the commentary. IJS developed Fig. 1, and both authors were involved in contributing to and critically reading the commentary. Both authors read and approved the final manuscript.

Funding

None.

Availability of data and materials

Not applicable.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Roberta W. Scherer, Phone: +1(410) 502-4636, Email: rschere1@jhu.edu

Ian J. Saldanha, Email: ian_saldanha@brown.edu

References

- 1.Balshem H, Stevens A, Ansari M, Norris S, Kansagara D, Shamliyan Try, et al. Finding Grey Literature Evidence and Assessing for Outcome and Analysis Reporting Biases When Comparing Medical Interventions: AHRQ and the Effective Health Care Program. Methods Guide for Comparative Effectiveness Reviews [Internet]. Rockville: Agency for Healthcare Research and Quality (US): 2008-AHRQ Methods for Effective Health Care. 2013. [PubMed]

- 2.(IOM) IoM . Knowing what works in health care: a roadmap for the nation. Washington, D. C: The National Academies Press; 2008. [Google Scholar]

- 3.Cochrane handbook for systematic reviews of interventions version 5.1.0 [updated March 2011]. The Cochrane Collaboration 2011.

- 4.Scherer RW, Meerpohl JJ, Pfeifer N, Schmucker C, Schwarzer G, von Elm E. Full publication of results initially presented in abstracts. Cochrane Database of Syst Rev. 2018;11:Mr000005. doi: 10.1002/14651858.MR000005.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Antes G, Chalmers I. Under-reporting of clinical trials is unethical. Lancet. 2003;361:978–979. doi: 10.1016/S0140-6736(03)12838-3. [DOI] [PubMed] [Google Scholar]

- 6.Schmucker C, Schell LK, Portalupi S, Oeller P, Cabrera L, Bassler D, Schwarzer G, Scherer RW, Antes G, von Elm E, Meerpohl JJ. Extent of non-publication in cohorts of studies approved by research ethics committees or included in trial registries. PLoS One. 2014;9:e114023. doi: 10.1371/journal.pone.0114023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H., Jr Publication bias and clinical trials. Control Clin Trials. 1987;8:343–353. doi: 10.1016/0197-2456(87)90155-3. [DOI] [PubMed] [Google Scholar]

- 8.Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. JAMA. 1992;267:374–378. doi: 10.1001/jama.1992.03480030052036. [DOI] [PubMed] [Google Scholar]

- 9.Dickersin K, Min YI. NIH clinical trials and publication bias. Online J Curr Clin Trials. 1993. [PubMed]

- 10.Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-Y. [DOI] [PubMed] [Google Scholar]

- 11.Hopewell S, Loudon K, Clarke MJ, Oxman AD, Dickersin K. Publication bias in clinical trials due to statistical significance or direction of trial results. Cochrane Database Syst Rev. 2009;(1):MR000006. 10.1002/14651858.MR000006.pub3. [DOI] [PMC free article] [PubMed]

- 12.Simes RJ. Publication bias: the case for an international registry of clinical trials. J Clin Oncol. 1986;4:1529–1541. doi: 10.1200/JCO.1986.4.10.1529. [DOI] [PubMed] [Google Scholar]

- 13.von Elm E, Costanza MC, Walder B, Tramer MR. More insight into the fate of biomedical meeting abstracts: a systematic review. BMC Med Res Methodol. 2003;3:12. doi: 10.1186/1471-2288-3-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hopewell S, Clarke M, Stewart L, Tierney J. Time to publication for results of clinical trials. Cochrane Database Syst Rev. 2007;18(2):MR000011. [DOI] [PMC free article] [PubMed]

- 15.Ioannidis JP. Effect of the statistical significance of results on the time to completion and publication of randomized efficacy trials. JAMA. 1998;279:281–286. doi: 10.1001/jama.279.4.281. [DOI] [PubMed] [Google Scholar]

- 16.Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–645. doi: 10.1136/bmj.315.7109.640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.EMBASE content: List of conferences covered in Embase. https://www.elsevier.com/solutions/embase-biomedical-research/embase-coverage-and-content. Accessed 26 Marc 2019.

- 18.Scherer RW, Sieving PC, Ervin AM, Dickersin K. Can we depend on investigators to identify and register randomized controlled trials? PLoS One. 2012;7:e44183. doi: 10.1371/journal.pone.0044183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Scherer RW, Huynh L, Ervin AM, Taylor J, Dickersin K. ClinicalTrials.gov registration can supplement information in abstracts for systematic reviews: a comparison study. BMC Med Res Methodol. 2013;13:79. doi: 10.1186/1471-2288-13-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Scherer RW, Huynh L, Ervin AM, Dickersin K. Using ClinicalTrials.gov to supplement information in ophthalmology conference abstracts about trial outcomes: a comparison study. PLoS One. 2015;10:e0130619. doi: 10.1371/journal.pone.0130619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mayo-Wilson E, Li T, Fusco N, Bertizzolo L, Canner JK, Cowley T, Doshi P, Ehmsen J, Gresham G, Guo N, et al. Cherry-picking by trialists and meta-analysts can drive conclusions about intervention efficacy. J Clin Epidemiol. 2017;91:95–110. doi: 10.1016/j.jclinepi.2017.07.014. [DOI] [PubMed] [Google Scholar]

- 22.van Driel ML, De Sutter A, De Maeseneer J, Christiaens T. Searching for unpublished trials in Cochrane reviews may not be worth the effort. J Clin Epidemiol. 2009;62:838–844.e833. doi: 10.1016/j.jclinepi.2008.09.010. [DOI] [PubMed] [Google Scholar]

- 23.Hopewell S, Clarke M, Askie L. Reporting of trials presented in conference abstracts needs to be improved. J Clin Epidemiol. 2006;59:681–684. doi: 10.1016/j.jclinepi.2005.09.016. [DOI] [PubMed] [Google Scholar]

- 24.Hartling L, Featherstone R, Nuspl M, Shave K, Dryden DM, Vandermeer B. Grey literature in systematic reviews: a cross-sectional study of the contribution of non-English reports, unpublished studies and dissertations to the results of meta-analyses in child-relevant reviews. BMC Med Res Methodol. 2017;17:64. doi: 10.1186/s12874-017-0347-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hopewell S. Assessing the impact of abstracts from the Thoracic Society of Australia and New Zealand in Cochrane reviews. Respirology. 2003;8:509–512. doi: 10.1046/j.1440-1843.2003.00508.x. [DOI] [PubMed] [Google Scholar]

- 26.Rosmarakis ES, Soteriades ES, Vergidis PI, Kasiakou SK, Falagas ME. From conference abstract to full paper: differences between data presented in conferences and journals. FASEB J. 2005;19:673–680. doi: 10.1096/fj.04-3140lfe. [DOI] [PubMed] [Google Scholar]

- 27.Tam VC, Hotte SJ. Consistency of phase III clinical trial abstracts presented at an annual meeting of the American Society of Clinical Oncology compared with their subsequent full-text publications. J Clin Oncol. 2008;26:2205–2211. doi: 10.1200/JCO.2007.14.6795. [DOI] [PubMed] [Google Scholar]

- 28.Toma M, McAlister FA, Bialy L, Adams D, Vandermeer B, Armstrong PW. Transition from meeting abstract to full-length journal article for randomized controlled trials. JAMA. 2006;295:1281–1287. doi: 10.1001/jama.295.11.1281. [DOI] [PubMed] [Google Scholar]

- 29.Saldanha IJ, Scherer RW, Rodriguez-Barraquer I, Jampel HD, Dickersin K. Dependability of results in conference abstracts of randomized controlled trials in ophthalmology and author financial conflicts of interest as a factor associated with full publication. Trials. 2016;17:213. doi: 10.1186/s13063-016-1343-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Weintraub WH. Are published manuscripts representative of the surgical meeting abstracts? An objective appraisal. J Pediatr Surg. 1987;22:11–13. doi: 10.1016/S0022-3468(87)80005-2. [DOI] [PubMed] [Google Scholar]

- 31.McAuley L, Pham B, Tugwell P, Moher D. Does the inclusion of grey literature influence estimates of intervention effectiveness reported in meta-analyses? Lancet. 2000;356:1228–1231. doi: 10.1016/S0140-6736(00)02786-0. [DOI] [PubMed] [Google Scholar]

- 32.Mayo-Wilson E, Fusco N, Li T, Hong H, Canner JK, Dickersin K. Multiple outcomes and analyses in clinical trials create challenges for interpretation and research synthesis. J Clin Epidemiol. 2017;86:39–50. doi: 10.1016/j.jclinepi.2017.05.007. [DOI] [PubMed] [Google Scholar]

- 33.Schmucker CM, Blumle A, Schell LK, Schwarzer G, Oeller P, Cabrera L, von Elm E, Briel M, Meerpohl JJ. Systematic review finds that study data not published in full text articles have unclear impact on meta-analyses results in medical research. PLoS One. 2017;12:e0176210. doi: 10.1371/journal.pone.0176210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Scherer RW, Ugarte-Gil C, Schmucker C, Meerpohl JJ. Authors report lack of time as main reason for unpublished research presented at biomedical conferences: a systematic review. J Clin Epidemiol. 2015;68:803–810. doi: 10.1016/j.jclinepi.2015.01.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Benzies KM, Premji S, Hayden KA, Serrett K. State-of-the-evidence reviews: advantages and challenges of including grey literature. Worldviews Evid-Based Nurs. 2006;3:55–61. doi: 10.1111/j.1741-6787.2006.00051.x. [DOI] [PubMed] [Google Scholar]

- 36.Weizman AV, Griesman J, Bell CM. The use of research abstracts in formulary decision making by the Joint Oncology Drug Review of Canada. Appl Health Econ Health Policy. 2010;8:387–391. doi: 10.2165/11530510-000000000-00000. [DOI] [PubMed] [Google Scholar]

- 37.Dundar Y, Dodd S, Williamson P, Dickson R, Walley T. Case study of the comparison of data from conference abstracts and full-text articles in health technology assessment of rapidly evolving technologies: does it make a difference? Int J Technol Assess Health Care. 2006;22:288–294. doi: 10.1017/S0266462306051166. [DOI] [PubMed] [Google Scholar]

- 38.Amrhein V, Greenland S, McShane B. Scientists rise up against statistical significance. Nature. 2019;567:305–307. doi: 10.1038/d41586-019-00857-9. [DOI] [PubMed] [Google Scholar]

- 39.Tong C. Statistical inference enables bad science; statistical thinking enables good science. Am Stat. 2019;73:20–25. doi: 10.1080/00031305.2018.1518264. [DOI] [Google Scholar]

- 40.Ioannidis JPA. What have we (not) learnt from millions of scientific papers with P values? Am Stat. 2019;73:20–25. doi: 10.1080/00031305.2018.1447512. [DOI] [Google Scholar]

- 41.Wasserstein RL, Schirm AL, Lazar NA. Moving to a world beyond “p < 0.05”. Am Stat. 2019;73:1–19. doi: 10.1080/00031305.2019.1583913. [DOI] [Google Scholar]

- 42.Saric L., Vucic K., Dragicevic K., Vrdoljak M., Jakus D., Vuka I., Jelicic Kadic A., Saldanha I.J., Puljak L. Comparison of conference abstracts and full‐text publications of randomized controlled trials presented at four consecutive World Congresses of Pain: Reporting quality and agreement of results. European Journal of Pain. 2018;23(1):107–116. doi: 10.1002/ejp.1289. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.