Abstract

Background

Genomic data analyses such as Genome-Wide Association Studies (GWAS) or Hi-C studies are often faced with the problem of partitioning chromosomes into successive regions based on a similarity matrix of high-resolution, locus-level measurements. An intuitive way of doing this is to perform a modified Hierarchical Agglomerative Clustering (HAC), where only adjacent clusters (according to the ordering of positions within a chromosome) are allowed to be merged. But a major practical drawback of this method is its quadratic time and space complexity in the number of loci, which is typically of the order of to for each chromosome.

Results

By assuming that the similarity between physically distant objects is negligible, we are able to propose an implementation of adjacency-constrained HAC with quasi-linear complexity. This is achieved by pre-calculating specific sums of similarities, and storing candidate fusions in a min-heap. Our illustrations on GWAS and Hi-C datasets demonstrate the relevance of this assumption, and show that this method highlights biologically meaningful signals. Thanks to its small time and memory footprint, the method can be run on a standard laptop in minutes or even seconds.

Availability and implementation

Software and sample data are available as an R package, adjclust, that can be downloaded from the Comprehensive R Archive Network (CRAN).

Keywords: Hierarchical agglomerative clustering, Adjacency constraint, Segmentation, Ward’s linkage, Similarity, Min heap, Genome-Wide Association Studies and Hi-C

Background

Genetic information is coded in long strings of DNA organised in chromosomes. High-throughput sequencing such as RNAseq, DNAseq, ChipSeq and Hi-C makes it possible to study biological phenomena along the entire genome at a very high resolution [32].

In most cases, we expect neighboring positions to be statistically dependent. Using this a priori information is one way of addressing the complexity of genome-wide analyses. For instance, it is common practice to partition each chromosome into regions, because such regions hopefully correspond to biological relevant or interpretable units (such as genes or binding sites) and because statistical modelling and inference are simplified at the scale of an individual region. In simple cases, such regions are given (for example, in RNAseq analysis, only genic and intergenic regions are usually considered and differential analysis is commonly performed at the gene or transcript level). However, in more complex cases, regions of interest are unknown and need to be discovered by mining the data. This is the case in the two leading examples considered in this paper. In the context of Genome Wide Association Studies (GWAS), region-scale approaches taking haplotype blocks into account can result in substantial statistical gains [17]. Hi-C studies [12] have demonstrated the existence of topological domains, which are megabase-sized local chromatin interaction domains correlating with regions of the genome that constrain the spread of heterochromatin. Hence, the problem of partitioning a chromosome into biologically relevant regions based on measures of similarity between pairs of individual loci has been extensively studied for genomic applications.

Recovering the “best” partition of p loci for each possible number, K, of classes is equivalent to a segmentation problem (also known as “multiple changepoint problem”). In the simplest scenario where the signals to be segmented are piecewise-constant, such as in the case of DNA copy numbers in cancer studies, segmentation can be cast as a least squares minimization problem [23, 30]. More generally, kernel-based segmentation methods have been developed to perform segmentation on data described by a similarity measure [3, 22]. Such segmentation problems are combinatorial in nature, as the number of possible segmentations of p loci into K blocks (for a given ) is . The “best” segmentation for all can be recovered efficiently in a quadratic time and space complexity using dynamic programming. As discussed in Celisse et al. [7], in the case of kernel-based segmentation, this complexity cannot be improved without making additional assumptions on the kernel (or the corresponding similarity). Indeed, for a generic kernel, even computing the loss (that is, the least square error) of any given segmentation in a fixed number of segments K has a computational cost of .

The goal of this paper is to develop heuristics that can be applied to genomic studies in which the number of loci is so large (typically of the order of to ) that algorithms of quadratic time and space complexity cannot be applied. This paper stems from a modification of the classical hierarchical agglomerative clustering (HAC) [26], where only adjacent clusters are allowed to be merged. This simple constraint is well-suited to genomic applications, in which loci can be ordered along chromosomes provided that an assembled genome is available. Adjacency-constrained HAC can be seen as a heuristic for segmentation; it provides not only a single partition of the original loci, but a sequence of nested partitions.

The idea of incorporating such constraints was previously mentioned by Lebart [27] to incorporate geographical (two-dimensional) constraints to cluster socio-economic data, and by Michel et al. [28] to cluster functional Magnetic Resonance Imaging (fMRI) data into contiguous (three-dimensional) brain regions. The totally ordered case that is the focus of this paper has been studied by Grimm [19], and an R package implementing this algorithm, rioja [25], has been developed.1 However, the algorithm remains quadratic in both time and space. Its time complexity cannot be improved because all of the similarities are used in the course of the algorithm. To circumvent this difficulty, we assume that the similarity between physically distant loci is zero, where two loci are deemed to be “physically distant” if they are separated by more than h other loci. The main contribution of this paper is to propose an adjacency-constrained clustering algorithm with quasi-linear complexity [namely, in space and in time] under this assumption, and to demonstrate its relevance for genomic studies. This algorithm is obtained by combining (i) constant-time calculation of Ward’s likage after a pre-calculation step of linear time and space complexity, and (ii) storage of candidate fusions in a binary heap.

The rest of the paper is organized as follows. In “Method” section we describe the algorithm, its time and space complexity and its implementation. The resulting segmentation method is then applied to GWAS datasets (“Linkage disequilibrium block inference in GWAS” section) and to Hi-C datasets (“Hi-C analysis” section), in order to illustrate that the above assumption makes sense in such studies, and that the proposed methods can be used to recover biologically relevant signals.

Method

Adjacency-constrained HAC with Ward’s linkage

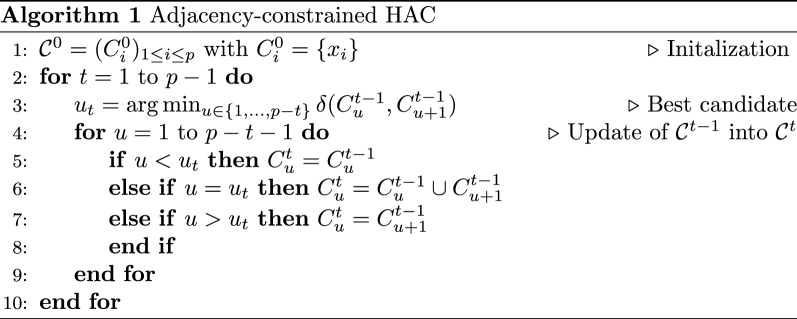

In its unconstrained version, HAC starts with a trivial clustering where each object is in its own cluster and iteratively merges the two most similar clusters according to a distance function called a linkage criterion. We focus on Ward’s linkage, which was defined for clustering objects taking values in the Euclidean space . Formally, Ward’s linkage between two clusters C and defines the distance between two clusters as the increase in the error sum of squares (or equivalently, as the decrease in variance) when C and are merged: , where is the Error Sum of Squares of cluster C (also known as “inertia of C”) and . It is one of the most widely used linkages because of its natural interpretation in terms of within/between cluster variance and because HAC with Ward’s linkage can be seen as a greedy algorithm for least square minimization, similarly to the k-means algorithm. In this paper, the p objects to be clustered are assumed to be ordered by their indices . We focus on a modification of HAC where only adjacent clusters are allowed to be merged. This adjacency-constrained HAC is described in Algorithm 1.

An implementation in Fortran of this algorithm was provided by Grimm [19]. This implementation has been integrated in the R package rioja [25].

Extension to general similarities

HAC and adjacency-constrained HAC are frequently used when the objects to be clustered do not belong to but are described by pairwise dissimilarities that are not necessarily Eulidean distance matrices. This case has been formally studied in Székely and Rizzo [35], Strauss and von Maltitz [34], Chavent et al. [8] and generally involves extending the linkage formula by making an analogy between the dissimilarity and the distance in (or the squared distance in some cases). These authors have shown that the simplified update of the linkage at each step of the algorithm, known as the Lance-Williams formula, is still valid in this case and that the objective criterion can be interpreted as the minimization of a so-called “pseudo inertia”. A similar approach can be used to extend HAC to data described by an arbitrary similarity between objects, , using a kernel framework as in [1, 31]. More precisely, when S is positive definite, the theory of Reproducing Kernel Hilbert Spaces [4] implies that the data can be embedded in an implicit Hilbert space. This allows to formulate Ward’s linkage between any two clusters in terms of the similarity using the so-called “kernel trick”: ,

| 1 |

where only depends on S and not on the embedding. This expression shows that Ward’s Linkage also has a natural interpretation as the decrease in average intra-cluster similarity after merging two clusters. Equation (1) is proved in Section S1.1 of Additional file 1.

Extending this approach to the case of a general (that is, possibly non-positive definite) similarity matrix has been studied in Miyamoto et al. [29]. Noting that (i) for a large enough , the matrix is positive definite and that (ii) , Miyamoto et al. [29, Theorem 1] concluded that applying Ward’s HAC to S and yields the exact same hierarchy, only shifting the linkage values by . This result, which a fortiori holds for the adjacency-constrained Ward’s HAC, justifies the use of Eq. (1) in the case of a general similarity matrix.

Band similarity assumption

In the case described in “Adjacency-constrained HAC with Ward’s linkage” section where the p objects to be clustered belong to , with , the computation of Ward’s linkage between two clusters can be done in by exploiting its explicit alternative formulation as the distance between centers of gravity. In such cases, it is possible to obtain unconstrained HAC in in time [14], and lower complexities could possibly be achieved for adjacency-constrained HAC. However, we focus in this paper in the situation described in “Extension to general similarities” section, where the input objects are represented by pairwise similarities. In such cases there is generally no explicit or finite-dimensional representation of the centers of gravity, and the time complexity of adjacency-constrained HAC (e.g. in rioja) is intrinsically quadratic in p because all of the similarities are used to compute all of the required linkage values (Algorithm 1, line 3).

Note that the implementation provided in rioja is also quadratic in space, as it takes as an input a (dense) dissimilarity matrix. However, Algorithm 1 can be made sub-quadratic in space in situations where the similarity matrix is sparse (see Ah-Pine and Wang [1] for similar considerations in the unconstrained case) or when the similarities can be computed on the fly, that is, at the time they are required by the algorithm, as in Dehman et al. [11].

In applications where adjacency-constrained clustering is relevant, such as Hi-C and GWAS data analysis, this quadratic time complexity is a major practical bottleneck because p is typically of the order of to for each chromosome. Fortunately, in such applications it also makes sense to assume that the similarity between physically distant objects is small. Specifically, we assume that S is a band matrix of bandwidth , where : for . This assumption is not restrictive, as it is always fulfilled for . However, we will be mostly interested in the case where . In the next section, we introduce an algorithm with improved time and space complexity under this band similarity assumption.

Algorithm

Ingredients

Our proposed algorithm relies on (i) constant-time calculation of each of the Ward’s linkages involved at line 3 of Algorithm 1 using Eq. (1), and (ii) storage of the candidate fusions in a min-heap. These elements are described in the next two subsections.

Ward’s linkage as a function of pre-calculated sums

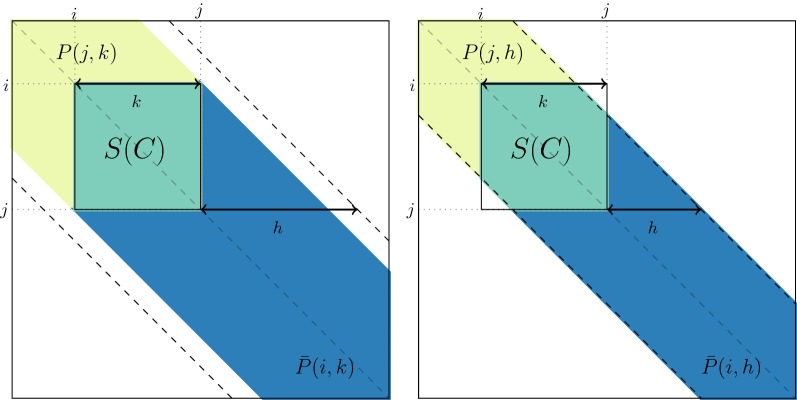

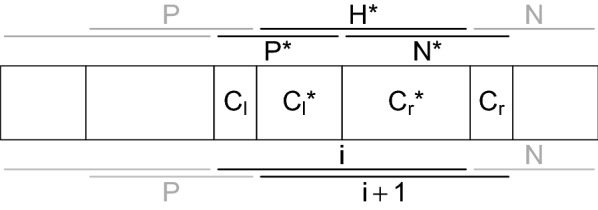

The key point of this subsection is to show that the sums of similarities involved in Eq. (1) may be expressed as a function of certain pre-calculated sums. We start by noting that the sum of all similarities in any cluster of size can easily be obtained from sums of elements in the first subdiagonals of S. To demonstrate that this is the case we define, for , P(r, l) as the sum of all elements of S in the first l subdiagonals of the upper-left block of S. Formally,

| 2 |

and symmetrically, . This notation is illustrated in Fig. 1, with . In the left panel, , while in the right panel, . In both panels, is the sum of elements in the yellow and green regions, while is the sum of elements in the green and blue regions. Because P and are sums of elements in pencil-shaped areas, we call P(r, l) a forward pencil and a backward pencil.

Fig. 1.

Example of forward pencils (in yellow and green) and backward pencils (in green and blue), and illustration of Eq. (3) for cluster . Left: cluster smaller than bandwidth (); right: cluster larger than bandwidth

Figure 1 illustrates that the sum of all similarities in cluster C can be computed from forward and backward pencils using the identity:

| 3 |

where and is the “full” pencil of bandwidth (which also corresponds to ). The above formula makes it possible to compute in constant time from the pencil sums using Eq. (1). By construction, all the bandwidths of the pencils involved are less than h. Therefore, only pencils P(r, l) and with and have to be pre-computed, so that the total number of pencils to compute and store is less than 2ph. These computations can be performed recursively in a time complexity. Further details about the time and space complexity of this pencil trick are given in Section S1.2 of Additional file 1.

Storing candidate fusions in a min-heap

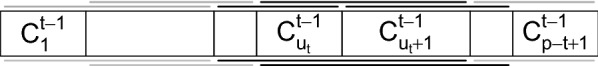

Iteration t of Algorithm 1 consists in finding the minimum of elements, corresponding to the candidate fusions between the clusters in , and merging the corresponding clusters. Storing the candidate fusions in an unordered array and calculating the minimum at each step would mean a quadratic time complexity. One intuitive strategy would be to make use of the fact that all but 2 to 3 candidate fusions at step t are still candidate fusions at step , as illustrated by Fig. 2 where candidate fusions are represented by horizontal bars above the clusters. However, maintaining a totally-ordered list of candidate fusions is not efficient because the cost of deleting and inserting an element in an ordered list is linear in p, again leading to a quadratic time complexity. Instead, we propose storing the candidate fusions in a partially-ordered data structure called a min heap [36]. This type of structure achieves an appropriate tradeoff between the cost of maintaining the structure and the cost of finding the minimum element at each iteration, as illustrated in Table 1.

Fig. 2.

The merging step in adjacency-constrained HAC in Algorithm 1. The clusters are represented by rectangular cells. Candidate fusions are represented by horizontal bars: above the corresponding pair of clusters at step t and below it at step , assuming that the best fusion is the one between the clusters of indices and . Gray bars indicate candidate fusions that are present at both steps

Table 1.

Time complexities () of the three main elementary operations required by one step of adjacency-constrained clustering (in columns), for three implementation options (in rows), for a problem of size p

| Find | Insert | Delete | Total | |

|---|---|---|---|---|

| Unordered array | p | 1 | p | p |

| Min heap | 1 | |||

| Ordered array | 1 | p | p | p |

A min heap is a binary tree such that the value of each node is smaller than the value of its two children. The advantage of this structure is that all the operations required in Algorithm 1 to create and maintain the list of candidate fusions can be done very efficiently. We provide a detailed description of the method, which is implemented in the adjclust package. We also give illustrations of the first steps of this algorithm when applied to the RLGH data set provided in the package rioja, that are relative abundances of 41 taxa in stratigraphic samples. A detailed description of this data set is provided in the help of the RLGH data set.

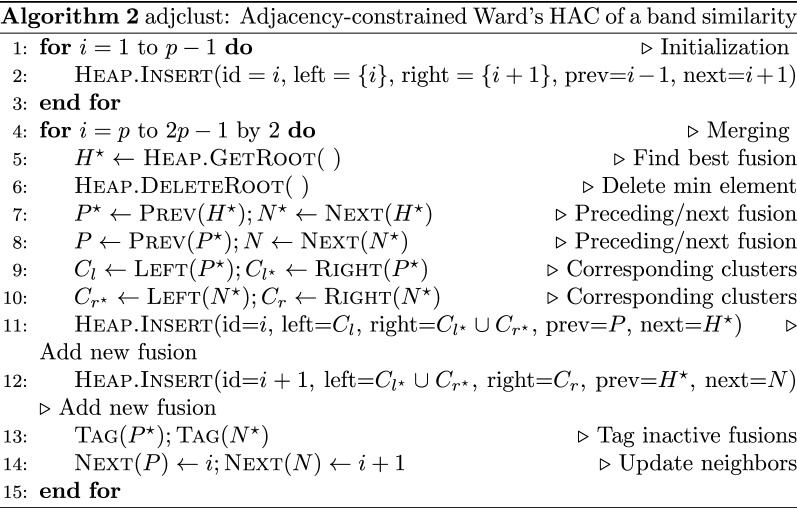

Proposed algorithm

Description and illustration

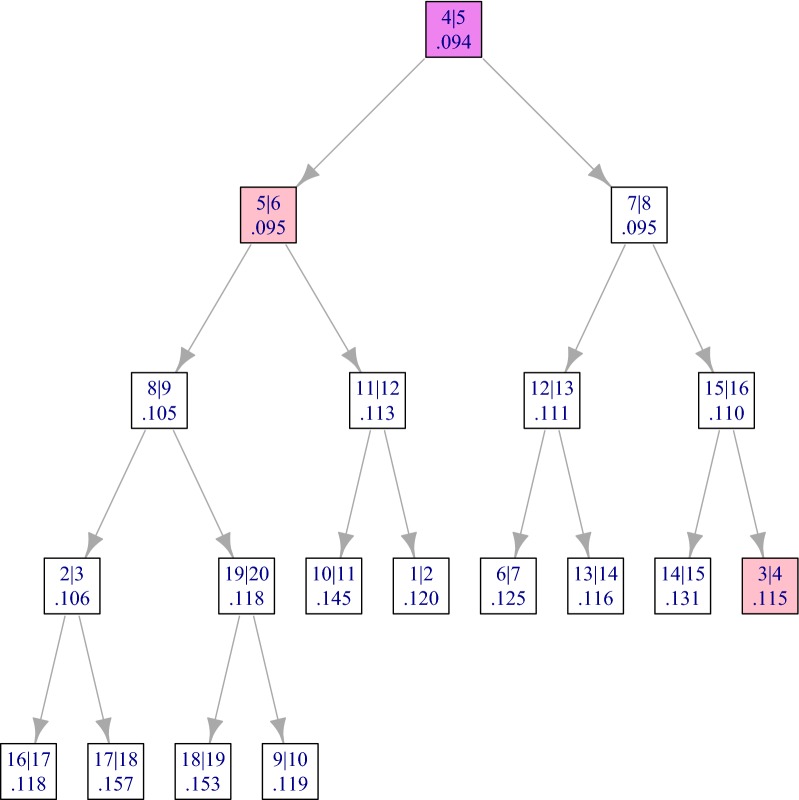

Our proposed algorithm is summarized by Algorithm 2. It is best expressed in terms of candidate fusions, contrary to Algorithm 1 which was naturally described in terms of clusters.

The initialization step (lines 1 to 3) consists in building the heap of candidate fusions between the p adjacent items. At the end of this step, the root of the heap contains the best such fusion. This is illustrated in Fig. 3 for the RLGH data set. The best candidate fusion, which is by definition the root of the tree, consists in merging and . It is highlighted in violet and the two “neighbor fusions”, i.e., the fusions that involve either or , are highlighted in pink. The initialization step has a time complexity because the complexity of inserting each of the elements in the heap is upper bounded by the maximal depth of the heap, that is, .

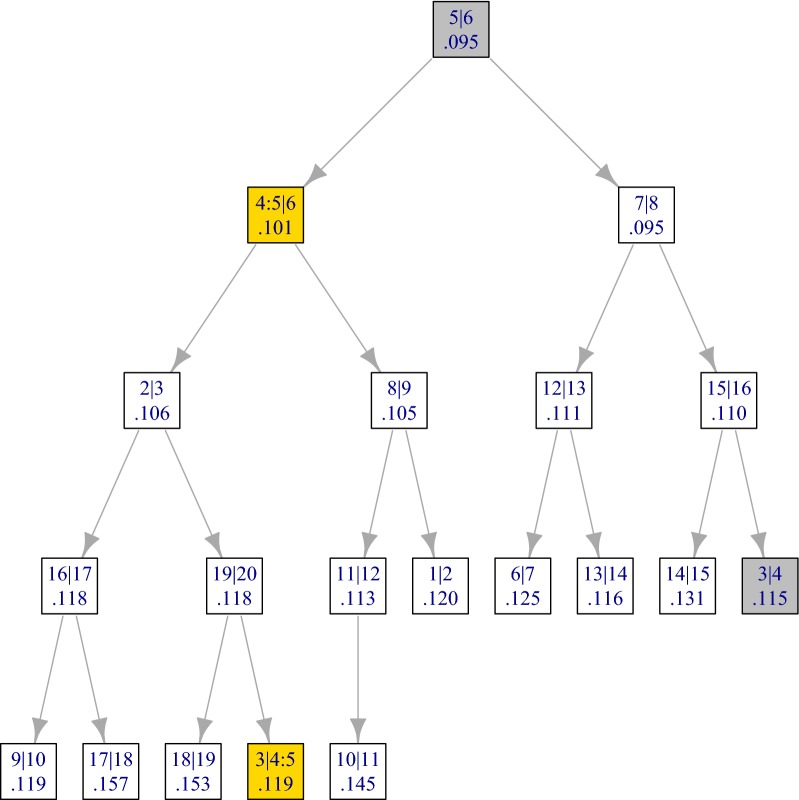

Fig. 3.

Min heap after the initialization step of the RLGH data set. Each node corresponds to a candidate fusion, and is represented by a label of the form giving the indices of the items to be merged, and (ii) the value of the corresponding linkage . The nodes corresponding to the best fusion and the two neighbor fusions are highlighted

As stated in the previous section, the merging step consists in finding the best candidate fusion (line 5), removing it from the heap (line 6) and inserting (up to) two possible fusions (lines 11–12). The other lines of the algorithm explain how the information regarding the adjacent fusions and clusters are retrieved and updated. The notation is illustrated in Fig. 4, elaborating on the example of Fig. 2.

Fig. 4.

Illustration of the result of a merging step in Algorithm 2

The state of the heap after the first fusion is illustrated by Fig. 5, where the two new candidate fusions are highlighted in yellow. The two fusions highlighted in grey are the neighbors of the first fusion.

Fig. 5.

Min heap after the first merging step for the RLGH data set. The nodes corresponding to the fusion that have changed since initialization (Fig. 3) are highlighted

In Algorithm 2, we have omitted several points for simplicity and conciseness of exposition. For a more complete description, the following remarks can be made:

The calculation of the linkage is not mentioned explicitly in the calls to Heap.Insert. As explained in “Ward’s linkage as a function of pre-calculated sums” section, the linkage between any two clusters can be calculated in constant time from pre-calculated pencil sums.

Algorithm 2 should take appropriate care of cases when the best fusion involves the first or last cluster. In particular, only one new fusion is defined and inserted in such cases. This is taken care of in the adjclust package, but not in Algorithm 2 for simplicity of exposition.

At each merging step the algorithm also tags as inactive the fusions involving the merged clusters (13). Indeed, once a cluster is fused with its left neighbor it can no longer be fused with its right neighbor and vice-versa. These fusions are highlighted in pink in Fig. 3 and in gray (once tagged) in Fig. 5. In order to avoid invalid fusions, each candidate fusion has an active/inactive label (represented by the gray highlight in Fig. 5), and when retrieving the next best candidate fusion (line 5), the min heap is first cleaned by deleting its root as long as it corresponds to an inactive fusion. In the course of the whole algorithm, this additional cleaning step will at worst delete 2p roots for a total complexity of .

The insertion instructions in Algorithm 2 indicate that the heap not only contains the value of the candidate fusions, but also the left and right clusters of each fusion, and the preceding and next candidate fusions in the order of the original objects to be clustered. In practice this side information is not actually stored in the heap, but in a dedicated array, together with the values of the corresponding linkage and the validity statuses of each candidate fusion. The heap only stores the index of each fusion in that array. The state of this array before and after the first fusion for the RLGH data set are given in Tables 2 and 3.

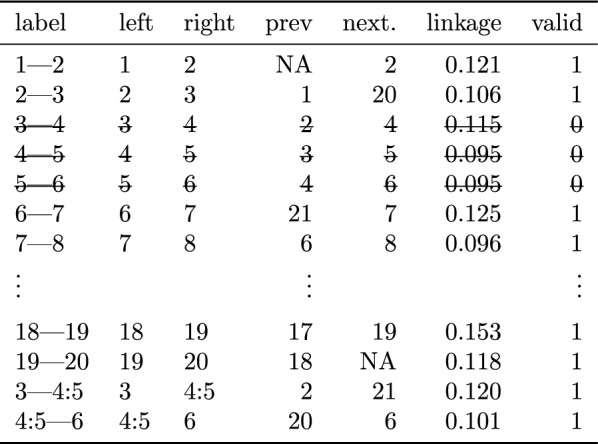

Table 2.

State of the array after initialization of the clustering for the RLGH data set, as in Fig. 3

| Left | Right | Prev | Next | Linkage | Valid |

|---|---|---|---|---|---|

| 1 | 2 | NA | 2 | 0.121 | 1 |

| 2 | 3 | 1 | 3 | 0.106 | 1 |

| 3 | 4 | 2 | 4 | 0.115 | 1 |

| 4 | 5 | 3 | 5 | 0.095 | 1 |

| 5 | 6 | 4 | 6 | 0.095 | 1 |

| 18 | 19 | 17 | 19 | 0.153 | 1 |

| 19 | 20 | 18 | NA | 0.118 | 1 |

Table 3.

State of the array after the first merge in the clustering for the RLGH data set, as in Fig. 5

Complexity of the proposed algorithm

By pre-calculating the ph initial pencils recursively using cumulative sums, the time complexity of the pre-computation step is ph and the time complexity of the computation of the linkage of the merged cluster with its two neighbors is (see Section S1.2 of Additional file 1 for further details). Its total time complexity is thus , where comes from the pre-computation of pencils, and comes from the p iterations of the algorithm (to merge clusters from p clusters up to 1 cluster), each of which has a complexity of . The space complexity of this algorithm is because the size of the heap is and the space complexity of the pencil pre-computations is . Therefore, the method achieves a quasi-linear (linearithmic) time complexity and linear space complexity when , which in our experience is efficient enough for analyzing large genomic datasets.

Implementation

Our method is available in the R package adjclust, using an underlying implementation in C and available on CRAN.2 Additional features have been implemented to make the package easier to use and results easier to interpret. These include:

Plots to display the similarity or dissimilarity together with the dendrogram and a clustering corresponding to a given level of the hierarchy as illustrated in Additional file 1: Figure S2;

Wrappers to use the method with SNP data or Hi-C data that take data from standard bed files or outputs of the packages snpStats and HiTC respectively;

A function to guide the user towards a relevant cut of the dendrogram (and thus a relevant clustering). In practice the underlying number of clusters is rarely known, and it is important to choose one based on the data. Two methods are proposed in adjclust: the first is based on a broken stick model [6] for the dispersion. Starting from the root of the dendrogram, the idea is to iteratively check whether the decrease in within-cluster variance corresponding to the next split can or cannot be explained by a broken stick model and to stop if it can. To the best of our knowledge this broken stick strategy is ad hoc in the sense that it does not have a statistical justification in terms of model selection, estimation of the signal, or consistency. The second method is based on the slope heuristic that is statistically justified in the case of segmentation problems [3, 18], for which HAC provides an approximate solution. This later approach is implemented using the capushe package [2], with a penalty shape of .

Clustering with spatial constraints has many different applications in genomics. The next two sections illustrate the relevance of our adjacency constraint clustering approach in dealing with SNP and Hi-C data. In both cases samples are described by up to a few million variables. All simulations and figures were performed using the R package adjclust, version 0.5.7.

Linkage disequilibrium block inference in GWAS

Genome-Wide Association Studies (GWAS) seek to identify causal genomic variants associated with rare human diseases. The classical statistical approach for detecting these variants is based on univariate hypothesis testing, with healthy individuals being tested against affected individuals at each locus. Given that an individual’s genotype is characterized by millions of SNPs this approach yields a large multiple testing problem. Due to recombination phenomena, the hypotheses corresponding to SNPs that are close to each other along the genome are statistically dependent. A natural way to account for this dependence in the process is to reduce the number of hypotheses to be tested by grouping and aggregating SNPs [11, 20] based on their pairwise Linkage Disequilibrium (LD). In particular, a widely used measure of LD in the context of GWAS is the coefficient, which can be estimated directly from genotypes measured by genotyping array or sequencing data using standard methods [9]. The similarity induced by LD can be shown to be a kernel (see Section S1.3 of Additional file 1). Identifying blocks of LD may also be useful to define tag SNPs for subsequent studies, or to characterize the recombination phenomena.

Numerical experiments were performed on a SNP dataset coming from a GWA study on HIV [10] based on 317k Illumina genotyping microarrays. For the evaluation we used five data sets corresponding to five chromosomes that span the typical number of SNPs per chromosome observed on this array ( for chromosome 1, for chromosome 6, for chromosome 11, for chromosome 16 and for chromosome 21).

For each dataset, we computed the LD using the function ld of snpStats, either for all SNP pairs () or with a reduced number of SNP pairs, corresponding to a bandwidth The packages rioja [25] (which requires the full matrix to be given as a dist object3) and adjclust with sparse matrices of the class dgCMatrix (the default output class of ld) were then used to obtain hierarchical clusterings. All simulations were performed on a 64 bit Debian 4.9 server, with 512G of RAM, 3GHz CPU (192 processing units) and concurrent access. The available RAM was enough to perform the clustering on the full dataset () with rioja although we had previously noticed that rioja implementation could not handle more than 8000 SNPs on a standard laptop because of memory issues.

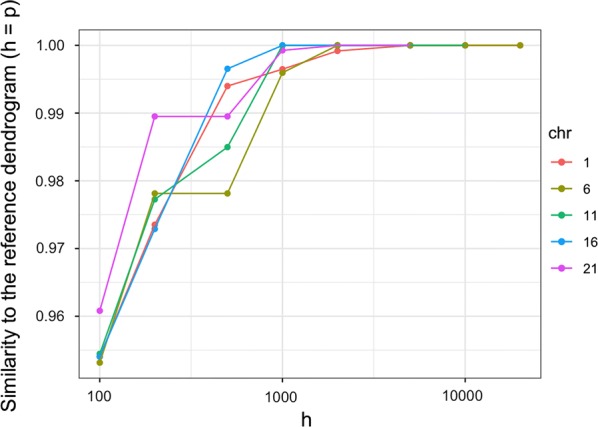

Quality of the band approximation

First, we evaluated the relevance of the band approximation by comparing the dendrogram obtained with to the reference dendrogram obtained with the full bandwidth (). To perform this comparison we simply recorded the index t of the last clustering step (among ) for which all the preceding fusions in the two dendrograms are identical. The quantity can then be interpreted as a measure of similarity between dendrograms, ranging from 0 (the first fusions are different) to 1 (the dendrograms are identical). Figure 6 displays the evolution of for different values of h for the five chromosomes considered here. For example, for all five chromosomes, at , the dendrograms differ from the reference dendrogram only in the last of the clustering step. For the dendrograms are exactly identical to the reference dendrogram. We also considered other criteria for evaluating the quality of the band approximation, including Baker’s Gamma correlation coefficient [5], which corresponds to the Spearman correlation between the ranks of fusion between all pairs of objects. The results obtained with these indices are not shown here because they were consistent with those reported in Fig. 6.

Fig. 6.

Quality of the band approximation as a function of the bandwidth h for five different chromosomes

One important conclusion that may be drawn from these results is that the influence of the bandwidth parameter is the same across chromosomes, that is, across values of p (that range from 5000 to 23000 in this experiment). Therefore, it makes sense to assume that h does not depend on p and that the time and space complexity of our proposed algorithm, which depends on h, is indeed quasi-linear in p.

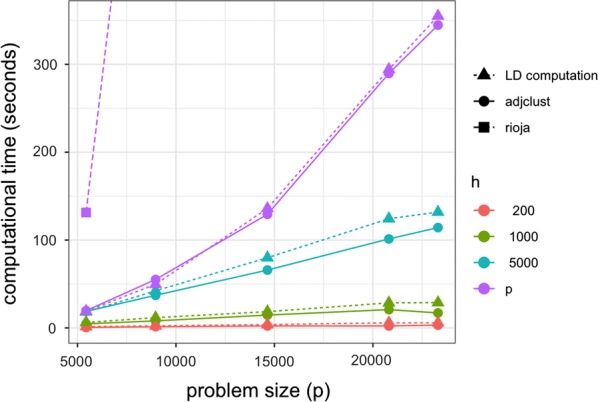

Scalability and computation times

Figure 7 displays the computation time for the LD matrix (dotted lines) and for the CHAC with respect to the size of the chromosome (x axis), both for rioja (dashed line) and adjclust (solid lines). As expected, the computation time for rioja did not depend on the bandwidth h, so we only represented . For adjclust, the results for varying bandwidths are represented by different colors. Only the bandwidths 200, 1000, and 5000 are representend in Fig. 7 for clarity.

Fig. 7.

Computation times versus p: LD matrices, for CHAC rioja and adjclust with varying values for the band h

Several comments can be made from Fig. 7. First, the computation times of rioja are much larger than those of adjclust, even when where both methods implement the exact same algorithm. For the largest chromosome considered here (chromosome 1, ), the running time of rioja is 18900 seconds (more than 5 h), compared to 345 seconds (less than 6 minutes). As expected, the complexity of adjclust with is quadratic in p, while it is essentially linear in p for fixed values of . For large values of p the gain of the band approximation is substantial: for (chromosome 1), the running time of adjclust for (which is a relevant value in this application according to the results of the preceding section) is of the order of 20 s.

We also note that regardless of the value of h, the total time needed for the clustering is of the order of (and generally lower than) the time needed for the computation of the LD.

Hi-C analysis

Hi-C protocol identifies genomic loci that are located nearby in vivo. These spatial co-locations include intra-chromosomal and inter-chromosomal interactions. After bioinformatics processing (alignment, filtering, quality control...), the data are provided as a sparse square matrix with entries that give the number of reads (contacts) between any given pair of genomic locus bins at genome scale. Typical sizes of bins are 40 kb, which results in more than 75,000 bins for the human genome. Constrained clustering or segmentation of intra-chromosomal maps is a tool frequently used to search for e.g., functional domains (called TADs, Topologically Associating Domains). A number of methods have been proposed for TAD calling (see Forcato et al. [15] for a review and comparison), among which the ones proposed by Fraser et al. [16], Haddad et al. [21] that take advantage of a hierarchical clustering, even using a constrained version for the second reference. In the first article, the authors proceed in two steps with a segmentation of the data into TADs using a Hidden Markov Model on the directionality index of Dixon, followed by a greedy clustering on these TADs, using the mean interaction as a similarity measure between TADs. Proceeding in two steps reduces the time required for the clustering, which is otherwise. However, from a statistical and modeling perspective these two steps would appear redundant. Also, pipelining different procedures (each of them with their sets of parameters) makes it very difficult to control errors. Haddad et al. [21] directly use adjacency-constrained HAC, with a specific linkage that is not equivalent to Ward’s. They do not optimize the computational time of the whole hierarchy, instead stopping the HAC when a measure of homogeneity of the cluster created by the last merge falls below a parameter. Both articles thus highlight the relevance of HAC for exploratory analysis of Hi-C data. Our proposed approach provides, in addition, a faster way to obtain an interpretable solution, using the interaction counts as a similarity and a h similar to the bandwidth of the Dixon index.

Data and method

Data used to illustrate the usefulness of constrained hierarchical clustering for Hi-C data came from Dixon et al. [12], Shen et al. [33]. Hi-C contact maps from experiments in mouse embryonic stem cells (mESC), human ESC (hESC), mouse cortex (mCortex) and human IMR90 Fibroblast (hIMR90) were downloaded from the authors’ website at http://chromosome.sdsc.edu/mouse/hi-c/download.html (raw sequence data are published on the GEO website, accession number GSE35156.

Even if these data do not perfectly fulfill the sparse band assumption, their sparsity is very high, especially outside a band centered on the diagonal. Taking as an example the largest and smallest chromosomes of the hESC data (chromosomes 1 and 22 respectively), the proportion of bin pairs with a positive count (present bin pairs) correspond to 10.7% and 25.8% respectively. This proportion is even smaller when focusing on bins pairs with a count larger than one (3.2% and 10.5% respectively). In addition, these bin pairs are mostly concentrated close to the diagonal: the proportion of present bin pairs that are located within a 10% diagonal band correspond to 60.1% and 45.6% of the present bin pairs, respectively. Finally, respectively 92.5% and 87.8% of the remaining present bin pairs have a count equal to only 1.

All chromosomes were processed similarly:

Counts were -transformed to reduce the distribution skewness;

Constrained hierarchical clustering was computed on -transformed data using, for the similarity, either the whole matrix () or the sparse approach with a sparse band size equal to ;

Model selection was finally performed using both the broken stick heuristic and the slope heuristic.

All computations were performed using the Genotoul cluster.

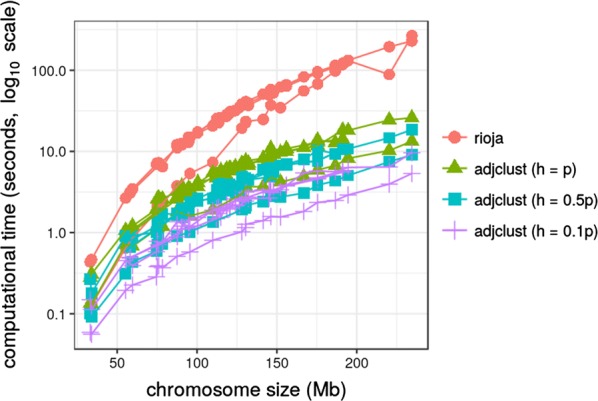

Influence of the bandwidth parameter

The effect of h (sparse band parameter) on computational time, dendrogram organization and clustering were assessed. Figure 8 gives the computational times versus the chromosome size for the three values of h together with the computational time obtained by the standard version of constrained hierarchical clustering as implemented in the R package rioja. As expected, the computational time is substantially reduced by the sparse version (even though not linearly with respect to h because of the preprocessing step that extracts the band around the diagonal), making the method suitable for dealing efficiently with a large number of chromosomes and/or a large number of Hi-C experiments. rioja, that cannot cope efficiently with the sparse band assumption, requires considerably more computational time (10 times the time needed by adjclust). In addition, the memory required by the two approaches is very different: adjclust supports sparse matrix representation (as implemented in the R package Matrix), which fits the way Hi-C matrices are typically stored (usually these matrices are given as rows with bin number pairs and associated count). For instance, the sparse version (dsCMatrix class) of the largest chromosome (chromosome 1) in the hESC data is 23 Mb, as opposed to 231 Mb for the full version. The sparse version of the smallest chromosome (chromosome 22) is 1.1 Mb, versus 5.2 Mb for the full version. The sparse version of the band for these two chromosomes is, respectively, 13.2 M and 0.4 Mb respectively.

Fig. 8.

Impact of sparsity on the computational time. Dots that correspond to the same datasets but different chromosomes are linked by a path

However, this gain in time and space did not impact the results of the method: the indexes of the first difference were computed between the dendrograms obtained by the full version () and by the two sparse versions () for every chromosome. For most of the clusterings there was no difference in merge for (with the similarity computed as in Fig. 6 always larger than 0.9992, and equal to 1 in more than 3 clusterings out of 4). For , the similarity ranged from 0.9811 to 0.9983. Baker’s Gamma index and Rand indices [24] for selected clusterings (both with broken stick and slope heuristic) confirmed this conclusion (results not shown).

Results

Additional file 1: Figure S1 provides the average cluster size for each chromosome versus the chromosome length. It shows that the average cluster size is fairly constant among the chromosomes and does not depend on the chromosome length. Both model selection methods found typical cluster sizes of 1-2 Mb, which is in line with what is reported in Forcato et al. [15] for some TAD callers.

Additional file 1: Figure S2 shows that clusters for a given chromosome (here chromosome 11 for hIMR90 and chromosome 12 for mCortex) can have different sizes and also different interpretations: some clusters exhibit a dense interaction counts (deep yellow) and are thus good TAD candidates whereas a cluster approximately located between bin 281 and bin 561 in chr12-mCortex map has almost no interaction and can be viewed as possibly separating two dense interaction regions.

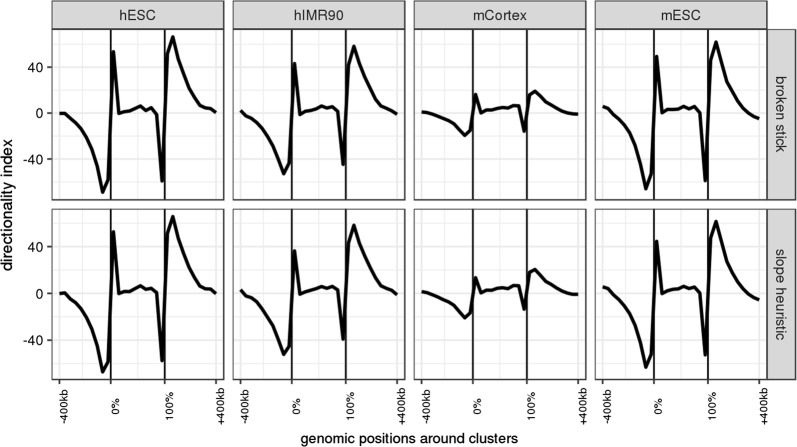

The directionality Index (DI, Dixon et al. [12]) quantifies a directional (upstream vs downstream) bias in interaction frequencies, based on a statistic. DI is the original method used for TAD calling in Hi-C. Its sign is expected to change and DI values are expected to show a sharp increase at TADs boundaries. Figure 9 displays the average DI, with respect to the relative bin position within the cluster and the absolute bin position outside the cluster. The clusters found by constrained HAC show a relation with DI that is similar to what is expected for standard TADs, with slightly varying intensities.

Fig. 9.

Evolution of the Directionality Index (DI) around clusters

Finally, boundaries of TADs are known to be enriched for the insulator binding protein CTCF Dixon et al. [12]. CTCF ChIP-seq peaks were retrieved from ENCODE [13] and the distribution of the number of the 20% most intense peaks was computed at Kb of cluster boundaries, as obtained with the broken stick heuristic (Additional file 1: Figure S3). The distribution also exhibited an enrichment at cluster boundaries, which indicates that the clustering is relevant with respect to the functional structure of the chromatin.

Conclusions

We have proposed an efficient approach to perform constrained hierarchical clustering based on kernel (or similarity) datasets with several illustrations of its usefulness for genomic applications. The method is implemented in a package that is shown to be fast and that currently includes wrappers for genotyping and Hi-C datasets. The package also provides two possible model selection procedures to choose a relevant clustering in the hierarchy. The output of the method is a dendrogram, which can be represented graphically, and provides a natural hierarchical model for the organization of the objects.

The only tuning parameter in our algorithm is the bandwidth h. The numerical experiments reported in this paper suggest that at least for GWAS and Hi-C studies, there exists a range of values for h such that (which implies very fast clustering) and the result of the HAC is identical or extremely close to the clustering obtained for . While the range of relevant values of h will depend on the particular application, an interesting extension of the present work would be to propose a data-driven choice of h by running the algorithm on increasing (yet small) values for h on a single chromosome, and deciding to stop when the dendrogram is stable enough. In addition, by construction, all groups smaller than h are identical in both clusterings (with and without the h-band approximation).

While HAC is a tool for exploratory data analysis, an important prospect of the present work will be to make use of the low time and memory footprint of the algorithm in order to perform inference on the estimated hierarchy using stability/resampling-based methods. Such methods could be used to propose alternative model selection procedures, or to compare hierarchies corresponding to different biological conditions, which has been shown to be relevant to Hi-C studies [16].

Supplementary information

Additional file 1. Supplementary methods and results.

Acknowledgements

The authors would like to warmly thank Michel Koskas for very interesting discussions, and for proposing a very elegant alternative implementation.

The authors are grateful to the GenoToul bioinformatics platform (INRA Toulouse, http://bioinfo.genotoul.fr/) and its staff for providing computing facilities. PN and NV would like to thank Shubham Chaturvedi for his contribution to the package adjclust via the R project in google summer of code 2017.

The authors would like to thank two anonymous referees whose comments helped us to improve the manuscript. All authors read and approved the final manuscript.

Authors’ contributions

CA and PN conceived the study. AD and GR proposed the algorithm. AD, PN and NV wrote the software. CA and PN performed the analysis and interpretation of Hi-GWAS data. NV performed the analysis and interpretation of Hi-C data. CA, PN, GR and NV wrote the manuscript. All authors read and approved the final manuscript.

Funding

This work was supported by CNRS project SCALES (Mission “Osez l’interdisciplinarité”). The work of GR was funded by an ATIGE from Génopole.

Availability of data and materials

GWAS data analyzed in this paper are available as described in “Linkage disequilibrium block inference in GWAS” section. Hi-C data analyzed in this paper are available as described in “Data and method” section.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Available on CRAN at https://cran.r-project.org/package=rioja.

The time needed to compute this matrix was 50-1000 times larger than the computation of the LD matrix itself. However, we did not include this in the total computation time required by rioja because we have not tried to optimize it from a computational point of view.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Christophe Ambroise, Email: christophe.ambroise@univ-evry.fr.

Alia Dehman, Email: alia.dehman@hyphen-stat.com.

Pierre Neuvial, Email: pierre.neuvial@math.univ-toulouse.fr.

Guillem Rigaill, Email: guillem.rigaill@inra.fr.

Nathalie Vialaneix, Email: nathalie.vialaneix@inra.fr.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s13015-019-0157-4.

References

- 1.Boström Henrik, Knobbe Arno, Soares Carlos, Papapetrou Panagiotis., editors. Advances in Intelligent Data Analysis XV. Cham: Springer International Publishing; 2016. [Google Scholar]

- 2.Arlot S, Brault V, Baudry J-P, Maugis C, Michel B. capushe: CAlibrating Penalities Using Slope HEuristics, 2016. https://CRAN.R-project.org/package=capushe. R package version 1.1.1.

- 3.Arlot S, Celisse A, Harchaoui Z. A kernel multiple change-point algorithm via model selection. Preprint arXiv: 1202.3878, 2016.

- 4.Aronszajn N. Theory of reproducing kernels. Trans Am Math Soc. 1950;68(3):337–404. doi: 10.1090/S0002-9947-1950-0051437-7. [DOI] [Google Scholar]

- 5.Baker FB. Stability of two hierarchical grouping techniques case I: sensitivity to data errors. J Am Stat Assoc. 1974;69(346):440–445. doi: 10.1080/01621459.1974.10482971. [DOI] [Google Scholar]

- 6.Bennett KD. Determination of the number of zones in a biostratigraphical sequence. New Phytol. 1996;132(1):155–170. doi: 10.1111/j.1469-8137.1996.tb04521.x. [DOI] [PubMed] [Google Scholar]

- 7.Celisse A, Marot G, Pierre-Jean M, Rigaill G. New efficient algorithms for multiple change-point detection with reproducing kernels. Comput Stat Data Analy. 2018;128:200–220. doi: 10.1016/j.csda.2018.07.002. [DOI] [Google Scholar]

- 8.Chavent M, Kuentz-Simonet V, Labenne A, Saracco J. ClustGeo2: an R package for hierarchical clustering with spatial constraints. Comput Stat. 2018;33(4):1799–1822. doi: 10.1007/s00180-018-0791-1. [DOI] [Google Scholar]

- 9.Clayton D. snpStats: SnpMatrix and XSnpMatrix classes and methods, 2015; R package version 1.24.0.

- 10.Dalmasso C, Carpentier W, Meyer L, Rouzioux C, Goujard C, Chaix M-L, Lambotte O, Avettand-Fenoel V, Le Clerc S, de Senneville LD, Deveau C, Boufassa F, Debré P, Delfraissy J-F, Broet P, Theodorou I. Distinct genetic loci control plasma HIV-RNA and cellular HIV-DNA levels in HIV-1 infection: the ANRS Genome Wide Association 01 study. PLoS ONE. 2008;3(12):e3907. doi: 10.1371/journal.pone.0003907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dehman A, Ambroise C, Neuvial P. Performance of a blockwise approach in variable selection using linkage disequilibrium information. BMC Bioinform. 2015;16(1):148. doi: 10.1186/s12859-015-0556-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dixon J, Selvaraj S, Yue F, Kim A, Li Y, Shen Y, Hu M, Liu J, Ren B. Topological domains in mammalian genomes identified by analysis of chromatin interactions. Nature. 2012;485:376–380. doi: 10.1038/nature11082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.ENCODE Project Consortium An integrated encyclopedia of DNA elements in the human genome. Nature. 2012;489:57–74. doi: 10.1038/nature11247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Eppstein D. Fast hierarchical clustering and other applications of dynamic closest pairs. J Exp Algor. 2000;5:1. doi: 10.1145/351827.351829. [DOI] [Google Scholar]

- 15.Forcato M, Nicoletti C, Pal K, Livi C, Ferrari F, Bicciato S. Comparison of computational methods for Hi-C data analysis. Nat Methods. 2017;14(7):679–685. doi: 10.1038/nmeth.4325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Fraser J, Ferrai C, Chiariello A, Schueler M, et al. Hierarchical folding and reorganization of chromosomes are linked to transcriptional changes in cellular differentiation. Mol Syst Biol. 2015;11:852. doi: 10.15252/msb.20156492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gabriel SB, Schaffner SF, Nguyen H, Moore JM, Roy J, Blumenstiel B, Higgins J, DeFelice M, Lochner A, Faggart M, Liu-Cordero SN, Rotimi C, Adeyemo A, Cooper R, Ward R, Lander ES, Daly MJ, Altshuler D. The structure of haplotype blocks in the human genome. Science. 2002;296(5576):2225–2229. doi: 10.1126/science.1069424. [DOI] [PubMed] [Google Scholar]

- 18.Garreau D, Arlot S. Consistent change-point detection with kernels. Electron J Stat. 2018;12(2):4440–4486. doi: 10.1214/18-EJS1513. [DOI] [Google Scholar]

- 19.Grimm E. CONISS: a fortran 77 program for stratigraphically constrained analysis by the method of incremental sum of squares. Comput Geosci. 1987;13(1):13–35. doi: 10.1016/0098-3004(87)90022-7. [DOI] [Google Scholar]

- 20.Guinot F, Szafranski M, Ambroise C, Samson F. Learning the optimal scale for GWAS through hierarchical SNP aggregation. BMC Bioinform. 2018;19(1):459. doi: 10.1186/s12859-018-2475-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Haddad N, Vaillant C, Jost D. IC-Finder: inferring robustly the hierarchical organization of chromatin folding. Nucleic Acids Res. 2017;45(10):e81. doi: 10.1093/nar/gkx036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Harchaoui Z, Cappé O. Retrospective mutiple change-point estimation with kernels. In: Proceedings of the 14th workshop on statistical signal processing (SSP’07), Madison; 2007. p. 768–772. IEEE. 10.1109/SSP.2007.4301363.

- 23.Hocking TD, Schleiermacher G, Janoueix-Lerosey I, Boeva V, Cappo J, Delattre O, Bach F, Vert J-P. Learning smoothing models of copy number profiles using breakpoint annotations. BMC Bioinform. 2013;14(1):164. doi: 10.1186/1471-2105-14-164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hubert L, Arabie P. Comparing partitions. J Classif. 1985;2(1):193–218. doi: 10.1007/BF01908075. [DOI] [Google Scholar]

- 25.Juggins S. rioja: Analysis of Quaternary Science Data, 2018; URL https://cran.r-project.org/package=rioja. R package version 0.9-15.1.

- 26.Kaufman L, Rousseeuw PJ. Finding Groups in Data: an introduction to cluster analysis, volume 344 of Wiley series in probability and statistics. Hoboken: Wiley; 2009. [Google Scholar]

- 27.Lebart L. Programme d’agrégation avec contraintes. Les Cahiers de l’Analyse des Données, 1978; 3(3):275–87. http://www.numdam.org/item?id=CAD_1978__3_3_275_0.

- 28.Michel V, Gramfort A, Varoquaux G, Eger E, Keribin C, Thirion B. A supervised clustering approach for fmri-based inference of brain states. Pattern Recogn. 2012;45(6):2041–2049. doi: 10.1016/j.patcog.2011.04.006. [DOI] [Google Scholar]

- 29.Miyamoto S, Abe R, Endo Y, Takeshita J. Ward method of hierarchical clustering for non-Euclidean similarity measures. In Proceedings of the VIIth international conference of soft computing and pattern recognition (SoCPaR 2015); 2015.

- 30.Picard F, Robin S, Lavielle M, Vaisse C, Daudin J-J. A statistical approach for array-CGH data analysis. BMC Bioinform. 2005;6(27):1471–2105. doi: 10.1186/1471-2105-6-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Qin J, Lewis DP, Noble WS. Kernel hierarchical gene clustering from microarray expression data. Bioinformatics. 2003;19(16):2097–2104. doi: 10.1093/bioinformatics/btg288. [DOI] [PubMed] [Google Scholar]

- 32.Reuter JA, Spacek DV, Snyder MP. High-throughput sequencing technologies. Mol Cell. 2015;58(4):586–597. doi: 10.1016/j.molcel.2015.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Shen Y, Yu F, McCleary DF, Ye Z, Edsall L, Kuan S, Wagner U, Dixon J, Lee L, Lobanenkov VV, Ren B. A map of the cis-regularoty sequence in the mouse genome. Nature. 2012;488:116–120. doi: 10.1038/nature11243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Strauss T, von Maltitz MJ. Generalising Ward’s method for use with Manhattan distances. PLoS ONE. 2017;12:e0168288. doi: 10.1371/journal.pone.0168288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Székely GJ, Rizzo ML. Hierarchical clustering via joint between-within distances: extending Ward’s minimum variance method. J Classif. 2005;22(2):151–183. doi: 10.1007/s00357-005-0012-9. [DOI] [Google Scholar]

- 36.Williams JWJ. Algorithm 232-heapsort. Commun ACM. 1964;7(6):347–348. doi: 10.1145/512274.512284. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Supplementary methods and results.

Data Availability Statement

GWAS data analyzed in this paper are available as described in “Linkage disequilibrium block inference in GWAS” section. Hi-C data analyzed in this paper are available as described in “Data and method” section.