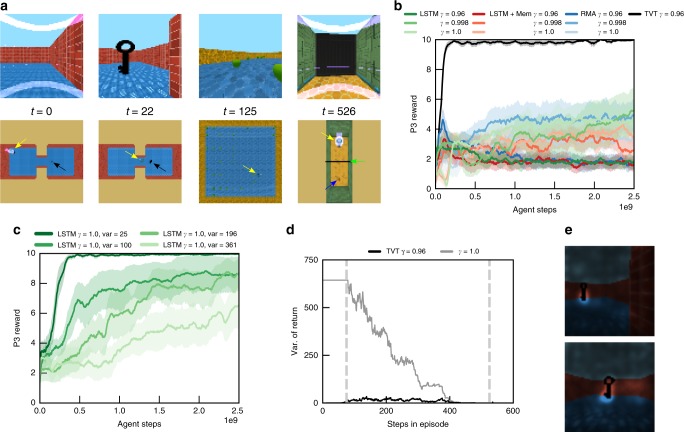

Fig. 4.

Type 2 causation tasks. a First person (upper row) and top–down view (lower row) in Key-to-Door task. The agent (indicated by yellow arrow) must pick up a key in P1 (black arrow), collect apples in P2, and, if it possesses the key, it can open the door (green arrow) in P3 to acquire the distal reward (blue arrow) (Supplementary Movie 2). b Learning curves for P3 reward (TVT in black). Although this task requires no memory for the policy in P3, computing the value prediction still triggers TVT splice events, which promote key retrieval in P1. c Increasing the variance of reward available in P2 disrupted the performance of LSTM agents at acquiring the distal reward. d On 20 trials produced by a TVT agent, we compared the variance of the TVT bootstrapped return against the undiscounted return. The TVT return’s variance was orders of magnitude lower. Vertical lines mark phase boundaries. e Saliency analysis of the pixels in the input image in P1 that the value function gradient is sensitive to. The key pops out in P1. In b, c, error bars represent standard errors across five agent training runs