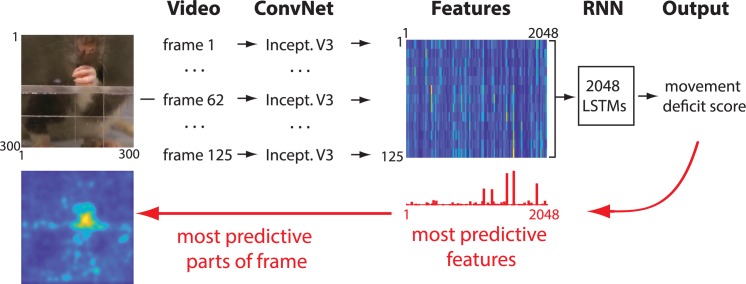

Fig 1. Network architecture.

Each frame is first passed through a ConvNet, called Inception V3 (“Incept. V3”), that reduces dimensionality by extracting high-level image features [36]. The features from 125 successive video frames are then given as an input to an RNN. The RNN is composed of LSTM units with the capacity to analyze temporal information across frames. The RNN outputs the movement deficit score for each video. After the network is trained, information is extracted from the network weights in order to identify image features and the parts of each video frame that were most predictive of the network score (red arrows). Network code is available at github.com/hardeepsryait/behaviour_net, and weights of trained model are available at http://people.uleth.ca/~luczak/BehavNet/g04-features.hdf5. See Methods for details. ConvNet, convolutional network; LSTM, long short-term memory; RNN, recurrent neural network.