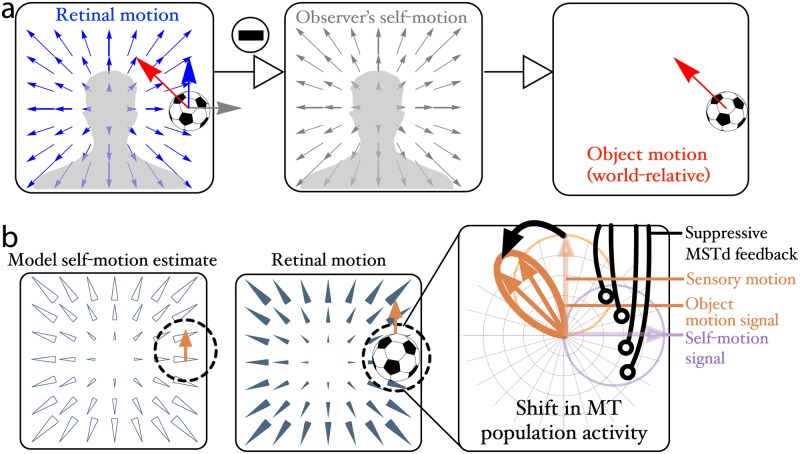

Fig 1.

(a) Optic flow components on the retina of a mobile observer. The retinal motion (blue, left panel) is the sum of motion created through self-motion relative to world-fixed stationary environment (center panel) and the motion created by objects that move independently from the observer (red, right panel). The visual system could recover the world-relative motion of objects (red arrow, left and right panels), by subtracting the self-motion component (center panel) from the retinal pattern (left panel). (b) Neural algorithm proposed by Layton & Fajen [4] to recover world-relative object motion. The call-out on the right is a polar plot showing direction responses to the moving object. MSTd cells that respond to the observer’s self-motion send feedback to suppress MT cells (light blue region, right panel) that signal the retinal motion (light orange region, right panel) consistent with the preferred MSTd tuning (open arrows, left panel). Suppression shifts the direction signaled by the MT population toward the world-relative direction (dark orange, right panel).