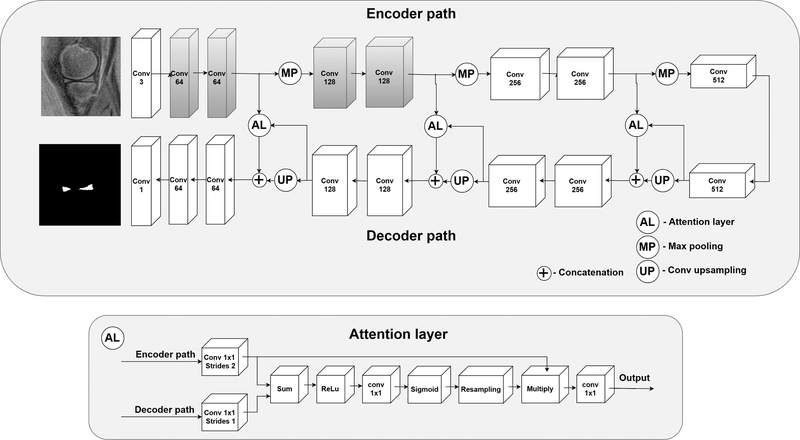

Figure 2.

The proposed 2D attention U-Net CNN for the menisci segmentation. Gray colors indicate the convolutional blocks initiated with the weights extracted from the VGG19 network. For each block the number of filters is indicated below the block type. AL – attention layer, Conv – 2D convolutional block, Max pool – max pooling operator, Up – up sampling with a 2D transposed convolutional block (kernel size of 2×2, stride of 2×2). Each convolutional block, except for the first and the last block, used the rectifier linear unit (ReLu) as the activation function and 3×3 convolutional filters. The first utilized 1D 1×1 convolutional filters and no activation function was employed for this layer. The last block utilized the sigmoid activation function suitable for the binary classification. AL layers were applied to process the feature maps propagated through the skip connections, to let the network focus more on particular regions in feature maps, instead of analyzing the entire image representations.