Abstract

Background:

Atypical face processing is a prominent feature of autism spectrum disorder (ASD) but is not universal and is subject to individual variability. This heterogeneity could be accounted for by reliable, yet unidentified subgroups within the diverse population of individuals with ASD. Alexithymia, which is characterized by difficulties in emotion recognition and identification, serves as a potential grouping factor. Recent research demonstrates that emotion recognition impairments in ASD are predicted by its comorbidity with alexithymia. The current study assessed the relative influence of autistic versus alexithymic traits on neural indices of face and emotion perception.

Methods:

Capitalizing upon the temporal sensitivity of event-related potentials (ERPs), it investigates the distinct contributions of alexithymic versus autistic traits at specific stages of emotional face processing in 27 typically developing adults (18 female). ERP components reflecting sequential stages of perceptual processing (P100, N170 and N250) were recorded in response to fear and neutral faces.

Results:

The results indicated that autistic traits were associated with structural encoding of faces (N170), whereas alexithymic traits were associated with more complex emotion decoding (N250).

Conclusions:

These findings have important implications for deconstructing heterogeneity within ASD.

Keywords: autism, alexithymia, EEG, face perception, N170

1. Introduction

The ability to perceive and interpret emotions in everyday human interactions is an integral part of social development. Specifically, the capacity to discern meaningful affect from faces and use it to guide social communication is key for adaptive reciprocal social behavior (Ekman & Friesen, 1976). Faces provide infants’ earliest exposure to social information (Goren et al., 1975; Johnson et al., 1991; Meltzoff & Moore, 1977, 1989), and the development of face processing offers useful information regarding both typical and atypical social trajectories (Batty et al., 2011; Chawarska et al., 2013; Elsabbagh et al., 2012). There is wide individual variability in face emotion processing, and proficiency in decoding of facial expressions influences social performance (Adolphs et al., 2001; Dawson et al., 2002, 2004; García-Villamisar et al., 2010; Grelotti et al., 2002, Miu et al., 2012).

Atypical facial expression perception is observed in both clinical populations and the general population. Difficulties perceiving emotional facial expressions are commonly observed in autism spectrum disorder (ASD; American Psychiatric Association, 2013; Capps et al., 1992; Celani et al., 1999; Coucouvanis et al., 2012; Gepner et al., 2001; Lerner et al., 2013; Ozonoff et al., 1990; Rump et al., 2009), though they are neither universal nor required for diagnosis. Aligning with the principles of the National Institute of Mental Health’s Research Domain Criteria initiative (RDoC; Insel et al., 2010), perception of emotion may represent a functional process that contributes to symptomatology in ASD (and other neurodevelopmental disorders) but is not necessary for manifestation of the phenotype. Specific difficulties with emotion recognition have also been described in individuals without ASD or other clinical conditions (Lane et al., 1996, Miu et al., 2012). Alexithymia refers to the reduced capacity to recognize and understand affect (Fitzgerald & Bellgrove, 2006; Fitzgerald & Molyneux, 2004, Sifneos, 1972, 1973), characterized by a compromised ability to identify and interpret emotions, including difficulty with facial affect decoding (Grynberg et al., 2010; Kano et al., 2003; McDonald & Prkachin, 1990; Prkachin et al., 2009; Sifneos, 1973; Taylor et al., 1997). Alexithymic traits are distributed on a continuous spectrum across the population (Bird et al., 2011), with the level of alexithymic traits present in an individual reflecting his or her level of difficulty in processing emotion (Sifneos, 1973, Taylor et al., 1997). Alexithymia is estimated to affect 10 percent of the typically developing population (Salminen et al., 1999) and 50 percent of individuals with ASD (Hill et al., 2004; Silani et al., 2008).

This high co-occurrence of alexithymia and ASD suggests that alexithymic traits may contribute to observed emotion processing deficits to the detriment of overall social performance (Bird et al., 2011). Behavioral and neuroimaging studies provide evidence that alexithymia better predicts emotion deficits in ASD than do broader autistic traits (Allen et al., 2013; Bird et al., 2010, 2011; Cook et al., 2013; Lane et al., 1996; Szatmari et al., 2008). This line of inquiry suggests distinct contributions of alexithymia versus autistic traits to clinical phenotypes. However, the specific nature and neural basis of these potentially unique contributors to social processing are poorly understood. For example, alexithymia may reflect difficulties in higher levels of information processing rather than in low-level perceptual processes, while autistic traits may influence more basic sensory perception, potentially creating secondary downstream influences on subsequent stages of processing (Cook et al., 2013).

In the current study, we applied electrophysiological brain recordings to examine the relative influences of autistic versus alexithymic traits on distinct facets of face emotion processing (e.g., structural encoding of faces, recognition of emotions). The high temporal resolution of brain electrophysiology enables dissociation of brain activity associated with discrete stages of processing. In perception of facial emotion, electrophysiological studies demonstrate relevance of three event-related potential (ERP) components marking distinct stages of perceptual processing of faces (Eimer et al., 2003; Eimer & Holmes, 2002). The P100 reflects neural responses to basic sensory inputs of visual percepts (Itier & Taylor, 2004; Liu et al., 2002; Taylor et al., 2001; Taylor, 2002). The N170 is primarily associated with perceptual encoding of facial features (Bentin et al., 1996; Blau et al., 2007), although, there is some evidence that suggests there may be a relationship between this ERP component and emotion processing (Batty & Taylor, 2003). The N250 response is hypothesized to index higher-order perceptual decoding of emotional expression (Lerner et al., 2013; Turetsky et al., 2007; Wynn et al., 2008). Electrophysiological research investigating face processing in ASD versus typically developing individuals has found that N170 latency is slower for faces in ASD, with reduced hemispheric lateralization compared to typically developing control groups (Dawson et al., 2005; McPartland et al., 2011, 2004). Evidence also shows there is no differential P100 response to inverted versus upright faces in ASD, which differs from the enhanced P100 amplitude recorded to inverted faces in typically developing samples (Hileman et al., 2011; McCleery et al., 2009; O’Connor et al., 2005). No ERP study to date has examined the neural bases of face emotion processing in individuals with alexithymia.

The current study capitalized upon the temporal resolution of ERPs to examine the influence of subclinical alexithymic and autistic traits on these discrete stages of emotion processing in an adult sample without clinical diagnoses. We predicted that, consistent with prior ASD research, autistic traits would be associated with variability in the early stages of face processing, indexed by the P100 and N170. In contrast, we predicted that alexithymic traits would associate with higher order emotion decoding, indexed by the N250. The findings of this study regarding neural circuits subserving face emotion processing in typically developing adults are intended to provide information about the relation between social brain activity and affective function, providing information about a key neural circuit germane to both typical development and clinical populations with ASD.

2. Methods

2.1. Participants

Participants included 27 typically developing adults (18 female), between 19 and 28 years of age (M=22.85 years, SD=2.62), recruited from the Yale University community in New Haven, Connecticut. None reported a history of psychiatric, neurological or motor impairments. All participants gave informed consent and were compensated for their participation.

2.2. Procedure

2.2.1. Behavioral Measures

Level of autistic traits was assessed using the Autism Quotient (AQ, Baron-Cohen et al., 2001). The AQ is a 50 item self-report questionnaire, consisting of five subscales: Social Skill, Attention Switching, Attention to Detail, Communication, and Imagination. Respondents select one of four responses for each item on the questionnaire (Baron-Cohen et al., 2001). Scores on the AQ Total Score range from 0–50, with higher levels of autistic traits indicated by increasing scores (Baron-Cohen et al., 2001). The AQ is demonstrated to be a reliable and valid tool for determining level of autistic traits in the typically developing population (Armstrong & Iarocci, 2013; Baron-Cohen et al., 2001; Hoekstra et al., 2008; Woodbury-Smith et al., 2005), with scores of 32 or higher suggesting a clinical level of autistic traits (Woodbury-Smith et al., 2005).

Level of alexithymic traits was assessed using the Bermond Vorst Alexithymia Questionnaire (BVAQ, Vorst & Bermond, 2001). The BVAQ is a 20-item self-report questionnaire that includes five subscales: Emotionalizing, Fantasizing, Identifying, Analyzing, and Verbalizing. Respondents select among 5 response options ranging from “I strongly disagree” to “I strongly agree” (Vorst & Bermond, 2001). Higher scores on this measure correspond to greater degree of alexithymic traits. The BVAQ has been found to be a reliable and valid measure of alexithymic traits with high internal consistency and good validity (Gori, 2012).

2.2.2. Experimental Task

Experimental stimuli consisted of dynamic, grayscale computer-generated faces. Stimuli subtended a visual angle of 10.2×6.4°. Stimuli were presented centrally on a 17-inch computer screen (60Hz, 1,024×768 resolution) using E-prime 2.0 software (Schneider et al., 2002). The face stimulus set consisted of 70 unique affective faces (fearful expression) and 70 unique neutral faces. Two experimental, non-neutral face conditions (i.e., featural disarrangement and puffed cheeks) were not included in ERP data analyses due to our specific focus on contrasting emotional versus neutral faces. Moving balls were interspersed as a target detection task to monitor attention.

The paradigm (Naples et al., 2014) was designed such that, within each trial, the presentation of faces included an initial static pose followed by a dynamic change of facial expression. The paradigm consisted of five blocks, each containing 93 stimuli: 14 fearful faces, 14 neutral faces, 56 faces from excluded conditions (featural disarrangement, puffed cheeks), and 9 attentional target stimuli. Faces in their initial static pose were presented for 500ms. A blank screen was presented for 1000ms at the end of each trial. The presentation of face stimuli was preceded by a crosshair fixation whose presentation time was jittered between 200–300ms.

Participants sat 72cm from the computer screen and were required to passively view the faces on the screen. To ensure participants’ attention to the screen, participants were instructed to press a button every time they saw the grey ball (i.e., on attentional control trials).

2.2.3. EEG recording

2.2.3.1. Data Collection:

A 128-electrode channel Geodesic Sensor Net (Electrical Geodesics Incorporated, Tucker, 1993) was used to record continuous EEG activity. The amplifier was automatically calibrated and impedances were kept below 40kΩ. A 0.1Hz high pass filter was used to attenuate low frequencies and noise online. The EEG signal was amplified (X1000) using EGI NetAmps 200 and digitized at a sampling rate of 500Hz using an analog-to-digital converter. All data were referenced to the vertex electrode (Cz) during recording and then re-referenced post data collection using an average reference. Participants were instructed to sit as still as possible throughout the task to minimize movement artifacts.

2.2.3.2. Data Processing and Analyses:

Netstation 4.3.1 was used for all data processing and analyses. Raw EEG data were filtered offline using a 30Hz low pass filter. Data segmentation was registered offline to stimulus onset, using a window of 100ms pre-stimulus and 500ms post-stimulus onset. The two face trials directly following the attentional control stimuli were discarded (a total of 9 stimuli within each block). EEG data were corrected for low signal quality due to bad electrode channels, eye blinks and eye movements at thresholds of 200uV, 140uV and 100uV, respectively. Individual electrode channels were excluded from analyses if they recorded a bad signal on 40% of the trials. In order to account for exclusion of data recorded from excluded electrodes, spline interpolation was used to infer and replace data based on activity recorded from surrounding electrodes. Individual trials were excluded if they contained more than 10 bad channels, an eye blink or eye movement. Remaining data was then re-referenced to an average reference. The data for each experimental condition were averaged across trials for each participant and then baseline corrected using the 100ms prestimulus interval. Participants that had less than 25% of good trials in each condition were excluded from analyses. Mean number of included trials was 62.15 (standard deviation = 6.21) for the fear condition, and 61.48 (standard deviation = 9.89) for the neutral condition.

2.2.3.3. ERP components of interest:

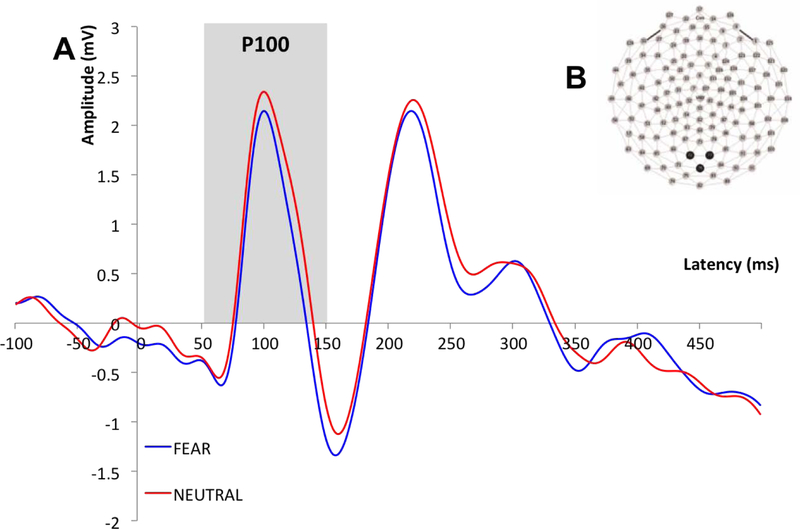

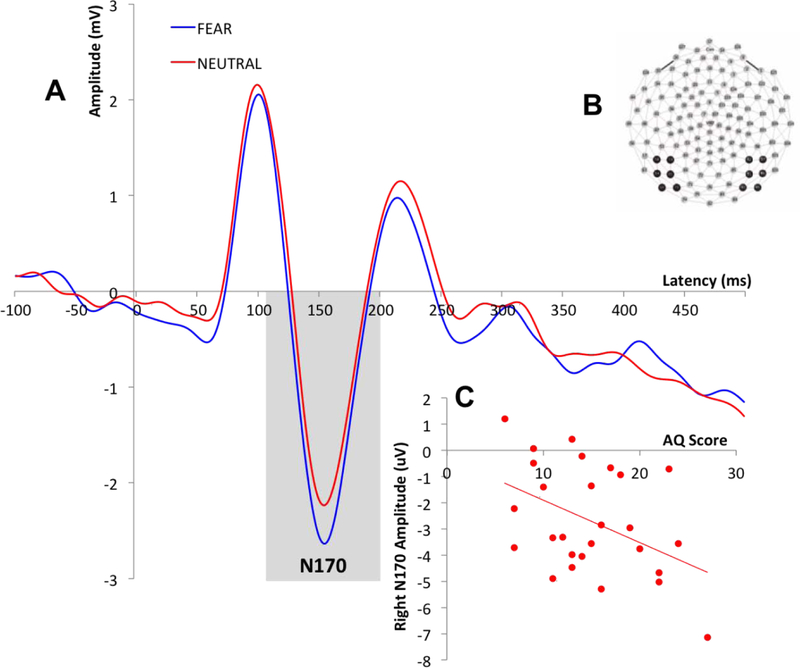

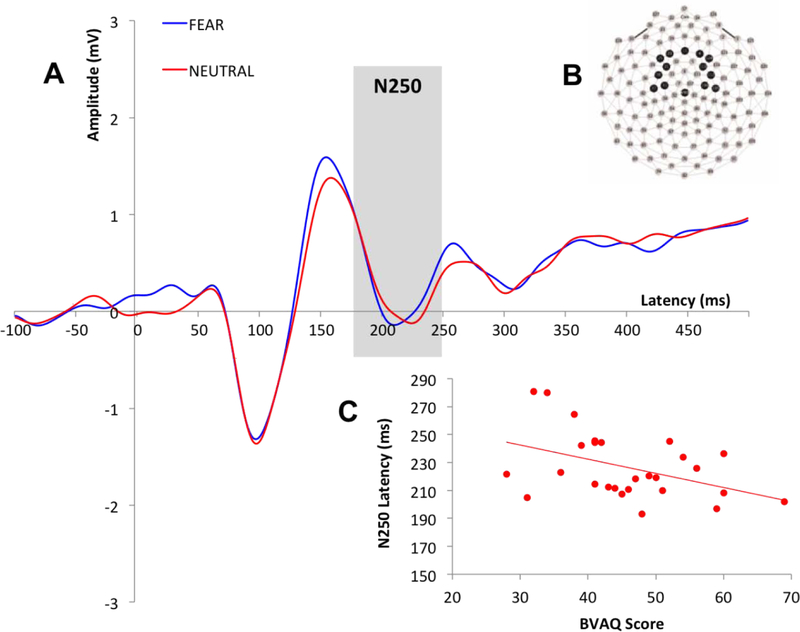

Time windows for the ERP components were selected based on visual inspection of the grand average waveform and then confirmed in individual subject files. The time windows were as follows: 58–152ms (P100), 132–204ms (N170) and 172–318ms (N250). Recording sites and electrodes of interest were selected based on precedent (Eimer, 2000; Lerner et al., 2013; Liu et al., 2002; Luo et al., 2010; McPartland et al., 2004; Wynn et al., 2008). Data for the P100 was averaged across electrodes over the occipital region (Liu et al., 2002) (Figure 1B). For the N170, data was examined separately for each hemisphere over the left and right posterior lateral regions of the scalp (approximating T5 and T6 sites in the 10/20 system; Eimer et al., 1998, 2000; Halit et al., 2000; McPartland et al., 2004) (Figure 2B). Data for the N250 was averaged over fronto-central scalp based on previous literature (Lerner et al., 2013; Luo et al., 2010; Wynn et al., 2008) and visual inspection of N250 magnitude across sites (Figure 3B). For each ERP component, peak amplitude and latency to peak was averaged across electrodes of interest, for each participant.

Figure 1.

A: Grand average waveforms describing the P100 across all participants for fear and neutral conditions; B: P100 recording sites.

Figure 2.

A: Grand average waveforms describing the right lateralized N170 across all participants for fear and neutral conditions; B: N170 recording sites; C: Correlation between right lateralized N170 amplitude for neutral condition and AQ scores.

Figure 3.

A: Grand average waveforms describing the N250 across all participants for fear and neutral conditions; B: N250 recording sites; C: Correlation between N250 latency for neutral condition and BVAQ scores.

2.3. Statistical analyses

AQ and BVAQ scores were first examined for collinearity to identify appropriateness for further analyses investigating their independent contribution to ERP components. Repeated measures analyses of covariance (ANCOVA) was then conducted to: 1) Examine the relation between behavioral measures and the amplitude and latency of ERP components; and 2) Examine the effect of emotion on the amplitude and latency of ERP components, after adjusting for the relation between behavioral measures and ERP components. The dependent variables were the latencies and amplitudes of each ERP component. Within-subjects factors included experimental conditions and hemisphere (for the N170). Alexithymic traits and autistic traits were considered as covariates. Bonferroni adjustment controlled for multiple comparisons, and post-hoc bivariate correlations were conducted to infer the directionality of identified effects and further examine the relationship between the AQ subscales (Social Skill, Attention Switching, Attention to Detail, Communication and Imagination) and ERP measures where significant findings were identified.

3. Results

3.1. Behavioral results

Scores on the AQ ranged from 6 to 27 (M=14.93, SD=5.56), and scores on the BVAQ ranged from 28 to 69 (M=45.78, SD=9.91). Kolmogorov-Smirnov goodness of fit test was conducted to examine distributions. Scores on both behavioral measures were normally distributed [AQ: D=.09. p>.05; BVAQ: D=06, p>.05] and without outliers. The AQ and BVAQ were not significantly correlated [r(25)=.14, p=.48], suggesting the scales measured distinct attributes within the sample.

3.2. P100

3.2.1. Amplitude

No main effects of emotion on P100 amplitude were detected when either behavioral measure was included in the analysis as a covariate [Range: F(1,25)=0.01-F(1,25)=0.36; p=.56- p=.92], and no interaction effects for either emotion and AQ or emotion and BVAQ were detected [Range: F(1,25)=0.53- F(1,25)=0.29; p=.60- p=.82]. There was no statistically significant main effect of either AQ score [F(1,25)=.32, p=.57] or BVAQ score [F(1,25)=.03, p=.87] on P100 amplitude, indicating that neither autistic nor alexithymic traits had a significant effect on early visual processing of faces, indexed by the P100 amplitude.

3.2.2. Latency

There were no statistically significant main effects of emotion on P100 latency when either AQ or BVAQ was included in the analysis as a covariate [Range: F(1,25)=1.15- F(1,25)=2.36; p=.14- p=.29], and there was no interaction effect of emotion with either AQ [F(1,25)=.28, p=.60] or BVAQ [F(1,25)=1.46, p=.24]. There was no statistically significant main effect of either AQ [F(1,25)=1.84, p=.19] or BVAQ [F(1,25)=.58, p=.45] on P100 latency. Thus, neither autistic nor alexithymic traits had a significant effect on the speed of early visual processing of faces, indexed by the P100 latency.

3.3. N170

3.3.1. Amplitude

Results showed no statistically significant main effects of either emotion [Range: F(1,25)=0.03- F(1,25)=2.49; p=.13- p=.87] or hemisphere [Range: F(1,25)=0.33- F(1,25)=1.34; p=.26- p=.57] on N170 amplitude after including either behavioral measure as a covariate. There was no statistically significant interaction effect of emotion with AQ [F(1,25)=.44, p=.51] or with BVAQ [F(1,25)=1.76, p=.20]. There was also no statistically significant interaction effect of hemisphere with AQ [F(1,25)=2.02, p=.17] or with BVAQ [F(1,25)=.23, p=.63]. However, there was a statistically significant three-way interaction of emotion, hemisphere and AQ [F(1,25)=4.47, p=.05]. There was also a statistically significant main effect of AQ on N170 amplitude [F(1,25)=7.84, p=.01], but no statistically significant main effect of BVAQ on N170 amplitude [F(1,25)=.13, p=.73]. There was no statistically significant interaction of emotion, hemisphere and BVAQ [F(1,25)=.11, p=.74].

Correlational analyses to clarify the observed three-way interaction between AQ, hemisphere and emotion revealed significant negative associations between AQ scores and right N170 amplitude for fearful [r(25)=−.55, p=.003] and neutral [r(25)=−.44, p=.02] faces (Figure 2C), as well as for left N170 amplitude for the neutral condition [r(25)=−.49, p=.01]. There were no statistically significant relationships between BVAQ scores and N170 amplitude for either emotion condition [p>.05].

To clarify whether a particular subset of autistic traits was driving the negative association between N170 amplitude and AQ total score, additional correlational analyses were conducted between N170 amplitude and individual AQ subscales. These analyses revealed a negative association between the Attention Switching subscale and bilateral N170 amplitude for both fearful [left: r(25)=−.42, p=.03; right: r(25)=−.58, p=.002] and neutral [left: r(25)=−.51, p=.01; right: r(25)=−.50, p=.01] faces. A significant negative relationship was also found between the Social Skills subscale and right N170 amplitude for fearful faces [r(25)=−.41, p=.04]; no other relationships were significant.

3.3.2. Latency

There were no statistically significant main effects of emotion [Range: F(1,25)=.77- F(1,25)=2.16; p=.15- p=.39] or hemisphere [Range: F(1,25)=.92- F(1,25)=3.18; p=.09- p=.35] on N170 latency after including either behavioral measure as a covariate. There were also no statistically significant interactions between emotion and either AQ [F(1,25)=1.20, p=.28] or BVAQ [F(1,25)=1.37, p=.25] or between hemisphere and either AQ [F(1,25)=.27, p=.61] or BVAQ [F(1,25)=2.31, p=.14]. There was no statistically significant three-way interaction [F(1,25)=.17, p=.69]. Finally, there were no statistically significant main effects of either AQ [F(1,25)=1.90, p=.18] or BVAQ [F(1,25)=.15, p=.70] on N170 latency. These results indicate that emotion, hemisphere, and degree of either alexithymic or autistic traits did not influence efficiency of structural face decoding, indexed by N170 latency.

3.4. N250

3.4.1. Amplitude

Results showed no statistically significant main effect of emotion on N250 amplitude when either behavioral measure [Range: F(1,25)=.003- F(1,25)=.90; p=.35- p=.96] was included in the analysis as a covariate and no interaction effect for emotion and either AQ [F(1,25)=.76, p=.39] or BVAQ [F(1,25)=.001, p=.98]. These results indicate that the N250 amplitude was not influenced by emotion irrespective of autistic or alexithymic traits. There were no statistically significant main effects of either AQ [F(1,25)=.03, p=.87] or BVAQ [F(1,25)=1.28, p=.27] on N250 amplitude, indicating that neither autistic nor alexithymic traits influenced N250 amplitude.

3.4.2. Latency

There were no statistically significant main effects of emotion on N250 latency when either behavioral measure was included in the analysis as a covariate [Range: F(1,25)=1.19-F(1,25)=1.89; p=.18- p=.29], and there were no significant interactions for emotion and either AQ [F(1,25)=1.10, p=.31] or BVAQ [F(1,25)=.77, p=.39]. These results indicate that N250 latency was not influenced by emotion irrespective of scores on either behavioral measure. There was no statistically significant main effect of AQ on N250 latency [F(1,25)=.17, p=.68]. However, results revealed a statistically significant main effect of BVAQ on N250 latency [F(1,25)=4.99, p=.04]; suggesting that alexithymic, not autistic traits modulated the efficiency of higher order emotion processing, as indexed by N250 latency.

Correlational analyses showed a negative correlation [r(25)=−.43, p=.03] between BVAQ and N250 latency for the neutral condition (Figure 3C). This suggests that individuals with higher levels of alexithymic traits have more rapid N250 responses to neutral faces. There were no statistically significant associations between AQ scores and N250 latency for either condition [Range: r(25)=.04- r(25)=.12, p=.54- p=.85], and the relationship between BVAQ scores and N250 latency to fearful faces was also non-significant [r(25)=−.37, p=.06].

4. Discussion

This study examined the differential influence of subclinical alexithymic and autistic traits on the neural processing of facial expressions in typically developing adults. The three ERP components of interest were the P100, N170 and N250, which index visual processing, structural encoding, and perceptual decoding of emotions, respectively. Results provide initial evidence that autistic traits and alexithymic traits differentially contribute to specific facets of face processing. Autistic traits were associated with structural encoding of faces, indexed by the N170, whereas alexithymic traits were associated with higher order emotion processing, indexed by the N250.

The P100 reflects early, low-level visual processing of stimuli and is evoked as an endogenous response to faces (Hileman et al., 2011; Itier & Taylor, 2004). The results of this study showed no effect of level of either autistic or alexithymic traits on P100 amplitude or latency, in response to either fearful or neutral faces. Thus, early stages of visual processing appear not to depend on either the emotional content of face stimuli and do not differ as a function of autistic or alexithymic traits in typically developing adults.

The N170 is associated with the subsequent stage of perceptual processing and reflects basic structural encoding of faces (Bentin et al., 1996; Eimer, 2000). In line with our hypotheses and prior studies in clinical populations (McPartland et al., 2011), level of autistic traits influenced N170 response; however, counter to our predictions and prior research in clinical populations, higher levels of autistic traits were associated with a more negative (i.e., larger) N170 amplitude. This contrasts with prior research in clinical groups, which has revealed attenuated amplitude in individuals with ASD. Interestingly, post-hoc analyses of AQ subscales showed that, bilaterally, the observed negative relationship was largely driven by the Attention Switching subscale, across both fearful and neutral conditions; a reduced ability to shift attention was associated with larger N170 amplitude. This may suggest that individuals with limited attentional flexibility demonstrate persistent attention to visual information, in this case exemplified by enhanced response to face stimuli. This would predict similar enhancement to non-face stimuli, which were not included in the present study, suggesting an important direction for future research. There was no effect of alexithymic traits on the strength of face processing as indexed by the N170, irrespective of condition or hemisphere, suggesting that autistic but not alexithymic traits exert effects on initial stages of face processing.

In line with our predictions, alexithymic but not autistic traits were associated with emotion decoding processes indexed by the N250 component. Specifically, alexithymic traits had a significant influence on the efficiency of face emotion processing. Higher levels of alexithymia were associated with shorter latency to faces without emotional expressions. This unpredicted association with neutral expressions suggests that alexithymic traits may confer benefits, in terms of processing efficiency, in contexts in which facial information is not conflated with emotional expression. Though the current findings support the notion of alexithymic traits relating to efficiency in affective decoding at a later stage in perceptual processing (Parker et al., 2005; Prkachin et al., 2009), the nature of this relationship requires clarification in future research.

The N250 component has been proposed to reflect higher-order processing of emotion (Luo et al., 2010). However, in this study we found no main effect of emotion (neutral versus fear) on N250 amplitude or latency, despite elicitation of a robust N250 response by face stimuli. This may reflect our experimental design’s reliance on neutral faces, which may not categorically be perceived as purely neutral (e.g., neutral differs from a natural resting expression; Young et al., 1997; Etcoff & Magee, 1992). Rather, neutral faces can be perceived as negatively-valenced and can evoke increased responses in the amygdala, which is associated with the processing of emotions such as fear (Birbaumer et al., 1998; Thomas et al., 2001). It is possible that our study may not have shown distinct N250 responses to emotional expressions because our design contrasted fearful faces with neutral faces, the latter inadvertently convey negative valence. Although the use of fearful faces to study emotional face processing is well researched, particularly in studies examining the N250 component (Lerner et al., 2013; Luo et al., 2010; Turetsky et al., 2007; Wynn, Lee, Horan, & Green, 2008), future studies examining this ERP component with a broader range of emotional stimuli and specifically in contrast to neutral expressions will better inform the specific role of the N250 in emotion decoding.

This study targeted a typically developing population, wherein subclinical autistic and alexithymic traits exist on a continuum with their phenotypic presentation in an attenuated form relative to the clinical populations. One limitation of this study is that it did not use a clinical sample to investigate the relative influence of traits on social perception. However, the results of this study inform broader understanding of both the clinical overlap between ASD and alexithymia and the degree to which emotion processing relates to clinical features associated with ASD (Bolte et al., 2011; Constantino & Todd, 2003; Miu et al., 2012). Furthermore, study findings regarding the neural circuits subserving face emotion processing enable parallels to be drawn to the ASD population and provide a base upon which future studies can be conducted in the clinical population. Another key caveat to this study is the sample size. Though normative for neuroscience studies, our sample of 27 may yield limited statistical power to detect small effects assessed by personality questionnaires. This work requires replication in larger samples.

The few studies that have directly examined the role of co-occurring alexithymia in ASD individuals showed that alexithymia, rather than ASD symptomatology itself, was the most important contributor to emotion processing problems underlying social dysfunction in the patient population (Bird et al., 2010, 2011; Cook et al., 2013). A recent behavioral study exploring this overlap showed that alexithymia, rather than ASD, was associated with impaired ability to decode emotions. Alexithymia did not, in contrast, contribute to deficits in earlier stages of sensory information processing (Cook et al., 2013). Our current findings, which utilized electrophysiological methods, corroborate and expand upon these previous findings by demonstrating unique contributions of alexithymia and autistic traits to differential stages of face perceptual processing. Aligning with the results of prior behavioral research, our results revealed an association between alexithymia and emotion-specific stages of information processing, whereas ASD symptoms were more strongly associated with deficits at earlier stages of information processing (i.e., face structural encoding).

These findings have important implications for parsing heterogeneity associated with ASD and support the notion that alexithymia is relevant in explaining behavioral heterogeneity in ASD. In particular, the independent role of alexithymia in emotion processing deficits could be informative in considering possible neurobiologically relevant subgroups within ASD. More specifically, there may be a need to define subgroups of individuals with ASD having more or less alexithymic traits in order to better understand the stages of perceptual information processing that may drive their social difficulties (Georgiades, 2013). This endeavor would enable tailoring of future ASD intervention techniques to more specific core deficits or underlying dysfunction, rather than to the broad endpoint deficit in emotion recognition and understanding (McPartland & Pelphrey, 2012). Therefore, there is a need to account for this overlap with alexithymia when investigating social emotion perception in ASD. Future research in individuals with ASD is necessary to further deconstruct the concept of heterogeneity in emotion processing evidenced in this study as it applies to clinical populations.

Alexithymic and autistic traits uniquely contribute to different stages of emotion processing.

Level of autistic traits influence the N170, which is associated with structural encoding of faces.

Level of alexithymic traits associated with emotion decoding processes, indexed by the N250 component.

Results have important implications for parsing behavioral heterogeneity within ASD.

Acknowledgements

This work was supported by NIMH K23 MH086785 (JCM), NIMH R21MH091309 (JCM), NIMH R01 MH100173 (JCM), and NIMH R01 MH107426. This publication was made possible by CTSA Grant Number UL1 TR000142 from the National Center for Advancing Translational Science (NCATS), a component of the National Institutes of Health (NIH). Its contents are solely the responsibility of the authors and do not necessarily represent the official view of NIH.

Footnotes

Disclosures

There were no biomedical financial interests or potential conflicts of interest.

References

- Adolphs R, Sears L, & Piven J (2001). Abnormal Processing of Social Information from Faces in Autism. J Cogn Neurosci, 13, 232. [DOI] [PubMed] [Google Scholar]

- Allen R, Davis R, & Hill E (2013). The Effects of Autism and Alexithymia on Physiological and Verbal Responsiveness to Music. Journal of Autism and Developmental Disorders, 43, 432–444. 10.1007/s10803-012-1587-8 [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Washington, DC: Author. [Google Scholar]

- Armstrong K, & Iarocci G (2013). Brief Report: The Autism Spectrum Quotient has Convergent Validity with the Social Responsiveness Scale in a High-Functioning Sample. Journal of Autism and Developmental Disorders. 10.1007/s10803-013-1769-z [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Skinner R, Martin J, & Clubley E (2001). The autism-spectrum quotient (AQ): Evidence from asperger syndrome/high-functioning autism, malesand females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17. [DOI] [PubMed] [Google Scholar]

- Batty M, & Taylor MJ (2003). Early processing of the six basic facial emotional expressions. Brain Res Cogn Brain Res, 17(3), 613–620 [DOI] [PubMed] [Google Scholar]

- Batty M, Meaux E, Wittemeyer K, Rogé B, & Taylor MJ (2011). Early processing of emotional faces in children with autism: An event-related potential study. Journal of Experimental Child Psychology, 109(4), 430–444. 10.1016/j.jecp.2011.02.001 [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, & McCarthy G (1996). Electrophysiological studies of face perception in humans. J Cogn Neurosci, 8(6), 551–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birbaumer N, Grodd W, Diedrich O, Klose U, Erb M, Lotze M, … Flor H (1998). fMRI reveals amygdala activation to human faces in social phobics. Neuroreport, 9(6), 1223–1226. [DOI] [PubMed] [Google Scholar]

- Bird G, Press C, & Richardson DC (2011). The Role of Alexithymia in Reduced Eye-Fixation in Autism Spectrum Conditions. Journal of Autism and Developmental Disorders, 41(11), 1556–1564. 10.1007/s10803-011-1183-3 [DOI] [PubMed] [Google Scholar]

- Bird G, Silani G, Brindley R, White S, Frith U, & Singer T (2010). Empathic brain responses in insula are modulated by levels of alexithymia but not autism. Brain, 133, 1515–1525. 10.1093/brain/awq060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau V, Maurer U, Tottenham N, & McCandliss B (2007). The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions, 3(1), 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolte S, Westerwald E, Holtmann M, Freitag C, & Poustka F (2011). Autistic traits and autism spectrum disorders: the clinical validity of two measures presuming a continuum of social communication skills. J Autism Dev Disord, 41, 66–72. 10.1007/s10803-010-1024-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capps L, Yirmiya N, & Sigman M (1992). Understanding of Simple and Complex Emotions in Non-retarded Children with Autism. Journal of Child Psychology and Psychiatry, 33(7), 1169–1182. [DOI] [PubMed] [Google Scholar]

- Celani G, Battacchi M, & Arcidiacono L (1999). The Understanding of the Emotional Meaning of Facial Expressions in People with Autism. Journal of Autism and Developmental Disorders, 29(1), 57–66. 10.1023/A:1025970600181 [DOI] [PubMed] [Google Scholar]

- Chawarska K, Macari S, & Shic F (2013). Decreased Spontaneous Attention to Social Scenes in 6-Month-Old Infants Later Diagnosed with Autism Spectrum Disorders. Biological Psychiatry, (0). 10.1016/j.biopsych.2012.11.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Constantino JN, & Todd RD (2003). Autistic Traits in the General Population: A Twin Study. Archives of General Psychiatry, 60, 524–530. [DOI] [PubMed] [Google Scholar]

- Cook R, Brewer R, Shah P, & Bird G (2013). Alexithymia, Not Autism, Predicts Poor Recognition of Emotional Facial Expressions. Psychological Science, 24(5), 723–732. 10.1177/0956797612463582 [DOI] [PubMed] [Google Scholar]

- Coucouvanis J, Hallas D, & Farley JN (2012). Autism Spectrum Disorder. In Child and Adolescent Behavioral Health (pp. 238–261). John Wiley & Sons, Ltd; Retrieved from 10.1002/9781118704660.ch13 [DOI] [Google Scholar]

- Dawson G, Carver L, Meltzoff AN, Panagiotides H, McPartland J, & Webb SJ (2002). Neural correlates of face and object recognition in young children with autism spectrum disorder, developmental delay, and typical development. Child Dev, 73, 700–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, Carver L, Panagiotides H, & McPartland J (2004). Young children with autism show atypical brain responses to fearful versus neutral facial expressions of emotion. Dev Sci, 7, 340–59. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, & McPartland J (2005). Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Dev Neuropsychol, 27, 403–24. doi: 10.1207/s15326942dn2703_6 [DOI] [PubMed] [Google Scholar]

- Eimer M (1998). Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroscience Reports, 9(13), 2945–2948. Retrieved from http://sfx.library.yale.edu/sfx_local?sid=Entrez: [DOI] [PubMed] [Google Scholar]

- Eimer M (2000). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport, 11(10), 2319–2324. [DOI] [PubMed] [Google Scholar]

- Eimer M, & Holmes A (2002). An ERP study on the time course of emotional face processing. Neuroreport, 13(4), 427–431. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A, & McGlone FP (2003). The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn Affect Behav Neurosci, 3(2), 97–110. [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen W (1976). Pictures of facial affect. Palo Alto, CA: Consulting Psychologist’s. [Google Scholar]

- Elsabbagh M, Mercure E, Hudry K, Chandler S, Pasco G, Charman T, … Johnson MH (2012). Infant Neural Sensitivity to Dynamic Eye Gaze Is Associated with Later Emerging Autism. Current Biology, 22(4), 338–342. 10.1016/j.cub.2011.12.056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Etcoff NL, & Magee JJ (1992). Categorical perception of facial expressions. Cognition, 44(3), 227–240. [DOI] [PubMed] [Google Scholar]

- Fitzgerald M, & Bellgrove MA (2006). The overlap between alexithymia and asperger’s syndrome [1]. Journal of Autism and Developmental Disorders, 36, 573–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald M, & Molyneux G (2004). Overlap between Alexithymia and Asperger’s Syndrome. The American Journal of Psychiatry, 161, 2134–2135. [DOI] [PubMed] [Google Scholar]

- García-Villamisar D, Rojahn J, Zaja RH, & Jodra M (2010). Facial emotion processing and social adaptation in adults with and without autism spectrum disorder. Research in Autism Spectrum Disorders, 4, 755–762. 10.1016/j.rasd.2010.01.016 [DOI] [Google Scholar]

- Georgiades S (2013). Importance of studying heterogeneity in autism. Neuropsychiatry, 3(2), 123–125. [Google Scholar]

- Gepner B, Deruelle C, & Grynfeltt S (2001). Motion and Emotion: A Novel Approach to the Study of Face Processing by Young Autistic Children. Journal of Autism and Developmental Disorders, 31(1), 37–45. doi: 10.1023/A:1005609629218 [DOI] [PubMed] [Google Scholar]

- Goren CC, Sarty M, & Wu PY (1975). Visual following and pattern discrimination of face-like stimuli by newborn infants. Pediatrics, 56(4), 544–549. [PubMed] [Google Scholar]

- Gori A (2012). Assessment of Alexithymia: Psychometric Properties of the Psychological Treatment Inventory-Alexithymia Scale (PTI-AS). Psychology, 03(03), 231–236. 10.4236/psych.2012.33032 [DOI] [Google Scholar]

- Grelotti DJ, Gauthier I, & Schultz RT (2002). Social interest and the development of cortical face specialization: what autism teaches us about face processing. Developmental Psychobiology, 40(3), 213–225. [DOI] [PubMed] [Google Scholar]

- Grynberg D, Luminet O, Corneille O, Grèzes J, & Berthoz S (2010). Alexithymia in the interpersonal domain: A general deficit of empathy? Personality and Individual Differences, 49, 845–850. [Google Scholar]

- Halit H, de Haan M, & Johnson MH (2000). Modulation of event-related potentials by prototypical and atypical faces. Neuroreport, 11(9), 1871–1875. Retrieved from http://sfx.library.yale.edu/sfx_local?sid=Entrez:PubMed&id=pmid:1088403 [DOI] [PubMed] [Google Scholar]

- Hileman CM, Henderson H, Mundy P, Newell L, & Jaime M (2011). Developmental and Individual Differences on the P1 and N170 ERP Components in Children With and Without Autism. Developmental Neuropsychology, 36(2), 214–236. 10.1080/87565641.2010.549870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill E, Berthoz S, & Frith U (2004). Brief report: Cognitive processing of own emotions in individuals with autistic spectrum disorder and in their relatives. Journal of Autism and Developmental Disorders, 34(2), 229–235. [DOI] [PubMed] [Google Scholar]

- Hoekstra RA, Bartels M, Cath DC, & Boomsma DI (2008). Factor Structure, Reliability and Criterion Validity of the Autism-Spectrum Quotient (AQ): A Study in Dutch Population and Patient Groups. Journal of Autism and Developmental Disorders, 38(8), 1555–1566. 10.1007/s10803-008-0538-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, … Wang P (2010). Research domain criteria (RDoC): toward a new classification framework for research on mental disorders. American Journal of Psychiatry. Retrieved from http://ajp.psychiatryonline.org/doi/abs/10.1176/appi.ajp.2010.09091379 [DOI] [PubMed] [Google Scholar]

- Itier RJ, & Taylor MJ (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb Cortex, 14(2), 132–142. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Dziurawiec S, Ellis H, & Morton J (1991). Newborns’ preferential tracking of face-like stimuli and its subsequent decline. Cognition, 40(1–2), 1–19. [DOI] [PubMed] [Google Scholar]

- Kano M, Fukudo S, Gyoba J, Kamachi M, Tagawa M, Mochizuki H, … Yanai K (2003). Specific brain processing of facial expressions in people with alexithymia: an H215O‐PET study. Brain, 126(6), 1474–1484. 10.1093/brain/awg131 [DOI] [PubMed] [Google Scholar]

- Lane RD, Sechrest L, Reidel R, Weldon V, Kaszniak A, & Schwartz GE (1996). Impaired verbal and nonverbal emotion recognition in alexithymia. Psychosom Med, 58, 203–210. [DOI] [PubMed] [Google Scholar]

- Lerner MD, McPartland JC, & Morris JP (2013). Multimodal emotion processing in autism spectrum disorders: An event-related potential study. Dev Cogn Neurosci, 3, 11–21. 10.1016/j.dcn.2012.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Harris A, & Kanwisher N (2002). Stages of processing in face perception: an MEG study. Nat Neurosci, 5(9), 910–916. [DOI] [PubMed] [Google Scholar]

- Luo W, Feng W, He W, Wang NY, & Luo YJ (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. Neuroimage, 49, 1857–67. 10.1016/j.neuroimage.2009.09.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCleery JP, Akshoomoff N, Dobkins KR, & Carver LJ (2009). Atypical Face Versus Object Processing and Hemispheric Asymmetries in 10-Month-Old Infants at Risk for Autism. Autism: Molecular Genetics and Neurodevelopment, 66(10), 950–957. 10.1016/j.biopsych.2009.07.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald PW, & Prkachin KM (1990). The expression and perception of facial emotion in alexithymia: a pilot study. Psychosomatic Medicine, 52(2), 199–210. [DOI] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb SJ, Panagiotides H, & Carver LJ (2004). Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. J Child Psychol Psychiatry, 45, 1235–45. 10.1111/j.1469-7610.2004.00318.x [DOI] [PubMed] [Google Scholar]

- McPartland J, & Pelphrey K (2012). The Implications of Social Neuroscience for Social Disability. Journal of Autism and Developmental Disorders, 42, 1256–1262. 10.1007/s10803-012-1514-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPartland J, Wu J, Bailey CA, Mayes LC, Schultz RT, & Klin A (2011). Atypical neural specialization for social percepts in autism spectrum disorder. Social Neuroscience, 6(5–6), 436–451. 10.1080/17470919.2011.586880 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meltzoff AN, & Moore MK (1977). Imitation of facial and manual gestures by human neonates. Science, 198(4312), 75–78. doi: 10.1126/science.198.4312.75 [DOI] [PubMed] [Google Scholar]

- Meltzoff AN, & Moore MK (1989). Imitation in newborn infants: Exploring the range of gestures imitated and the underlying mechanisms. Developmental Psychology, 25(6), 954–962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miu AC, Pană SE, & Avram J (2012). Emotional face processing in neurotypicals with autistic traits: Implications for the broad autism phenotype. Psychiatry Res, 198, 489–494. 10.1016/j.psychres.2012.01.024 [DOI] [PubMed] [Google Scholar]

- Naples A, Nguyen-Phuc A, Coffman M, Kresse A, Faja S, Bernier R, & McPartland JC (2014). A computer-generated animated face stimulus set for psychophysiological research. Behavior Research Methods, 47(2), 562–570. 10.3758/s13428-014-0491-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Connor K, Hamm JP, & Kirk IJ (2005). The neurophysiological correlates of face processing in adults and children with Asperger’s syndrome. Brain and Cognition, 59(1), 82–95. [DOI] [PubMed] [Google Scholar]

- Ozonoff S, Pennington BF, & Rogers SJ (1990). Are there Emotion Perception Deficits in Young Autistic Children? Journal of Child Psychology and Psychiatry, 31(3), 343–361. doi: 10.1111/j.1469-7610.1990.tb01574.x [DOI] [PubMed] [Google Scholar]

- Parker PD, Prkachin KM, & Prkachin GC (2005). Processing of Facial Expressions of Negative Emotion in Alexithymia: The Influence of Temporal Constraint. Journal of Personality, 73(4), 1087–1107. 10.1111/j.1467-6494.2005.00339.x [DOI] [PubMed] [Google Scholar]

- Prkachin G, Casey C, & Prkachin KM (2009). Alexithymia and perception of facial expressions of emotion. Personality and Individual Differences, 46, 412–417. [Google Scholar]

- Rump KM, Giovannelli JL, Minshew NJ, & Strauss MS (2009). The development of emotion recognition in individuals with autism. Child Development, 80(5), 1434–1447. 10.1111/j.1467-8624.2009.01343.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salminen JK, Saarijärvi S, Aärelä E, Toikka T, & Kauhanen J (1999). Prevalence of alexithymia and its association with sociodemographic variables in the general population of Finland. Journal of Psychosomatic Research, 46(1), 75–82. [DOI] [PubMed] [Google Scholar]

- Schneider W, Eschman A, & Zuccolotto A (2002). E-Prime User’s Guide. Pittsburgh: Psychology Software Tools, Inc. [Google Scholar]

- Sifneos PE (1972). Short-term psychotherapy and emotional crisis. Cambridge, MA: Harvard University Press [Google Scholar]

- Sifneos PE (1973). The prevalence of ‘alexithymic’ characteristics in psychosomatic patients. Psychotherapy and Psychosomatics, 22, 255–262. [DOI] [PubMed] [Google Scholar]

- Silani G, Bird G, Brindley R, Singer T, Frith C, & Frith U (2008). Levels of emotional awareness and autism: An fMRI study. Social Neuroscience, 3, 97–112. [DOI] [PubMed] [Google Scholar]

- Szatmari P, Georgiades S, Duku E, Zwaigenbaum L, Goldberg J, & Bennett T (2008). Alexithymia in parents of children with autism spectrum disorder. Journal of Autism and Developmental Disorders, 38, 1859–1865. [DOI] [PubMed] [Google Scholar]

- Taylor M (2002). Non-spatial attentional effects on P1. Clinical Neurophysiology, 113(12), 1903–1908. [DOI] [PubMed] [Google Scholar]

- Taylor GJ, Bagby RM, & Parker JDA (1997). Disorders of affect regulation: Alexithymia in medical and psychiatric illness. Cambridge: Cambridge University Press. [Google Scholar]

- Taylor MJ, Edmonds GE, McCarthy G, & Allison T (2001). Eyes first! Eye processing develops before face processing in children. Neuroreport, 12(8), 1671–1676. [DOI] [PubMed] [Google Scholar]

- Thomas KM, Drevets WC, Whalen PJ, Eccard CH, Dahl RE, Ryan ND, & Casey BJ (2001). Amygdala response to facial expressions in children and adults. Biological Psychiatry, 49(4), 309–316. 10.1016/S0006-3223(00)01066-0 [DOI] [PubMed] [Google Scholar]

- Tucker DM (1993). Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr Clin Neurophysiol, 87(3), 154–163. [DOI] [PubMed] [Google Scholar]

- Turetsky BI, Kohler CG, Indersmitten T, Bhati MT, Charbonnier D, & Gur RC (2007). Facial emotion recognition in schizophrenia:When and why does it go awry? Schizophrenia Research, 94(1–3), 253–263. doi: 10.1016/j.schres.2007.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vorst HCM, & Bermond B (2001). Validity and reliability of the Bermond-Vorst Alexithymia Questionnaire. Personal Individ Differ, 30, 413–434. [Google Scholar]

- Woodbury-Smith MR, Robinson J, Wheelwright S, & Baron-Cohen S (2005). Screening Adults for Asperger Syndrome Using the AQ: A Preliminary Study of its Diagnostic Validity in Clinical Practice. Journal of Autism and Developmental Disorders, 35(3), 331–335. 10.1007/s10803-005-3300-7 [DOI] [PubMed] [Google Scholar]

- Wynn JK, Lee J, Horan WP, & Green MF (2008). Using event related potentials to explore stages of facial affect recognition deficits in schizophrenia. Schizophrenia Bulletin, 34, 679–687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, & Perrett DI (1997). Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition, 63(3), 271–313. 10.1016/S0010-0277(97)00003-6 [DOI] [PubMed] [Google Scholar]