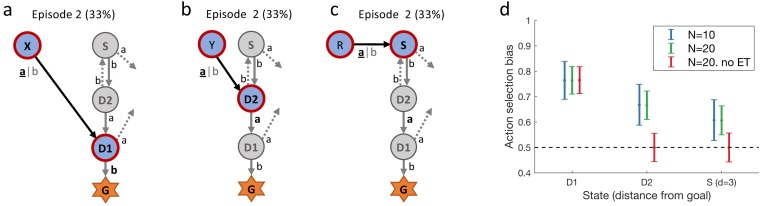

Figure 10. Simulated experiment.

( Q-λ model). (a) and (b): Task structure (same as in Figure 2). Simulated agents performed episodes 1 and 2 and we recorded the decisions at states D1 and D2 in episode 2. (c): Additionally, we also simulated the model’s behavior at state S, by extending the structure of the (simulated) experiment with a new state R, leading to S. (d): We calculated the action-selection bias at states D1, D2 and S during episode 2 from the behavior of (blue) and (green) simulated agents. The effect size (observed during episode 2 and visualized in panel (d)) decreases when (in episode 1) the delay between taking the action and receiving the reward increases. It is thereby smallest at state S. When setting the model’s eligibility trace parameter to 0(red, no ET), the effect at state D1 is not affected (see Equation 1) while at D2 and S the behavior was not reinforced. Horizontal dashed line: chance level 50%. Errorbars: standard deviation of the simulated effect when estimating 1000 times the mean bias from and simulated agents with individually sampled model parameters.