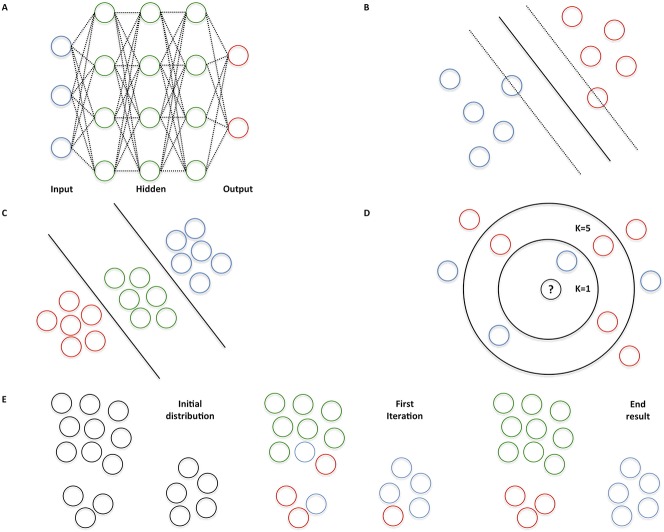

Fig. 1 A-E.

Two common AI techniques exist. Supervised learning applies to iterative training of an algorithm with a dataset consisting of input features with ground truth labels. For example, radiographs of the wrist are provided as input features labelled fracture and no fracture. By providing new wrist radiographs without a label, the algorithm learns to make a prediction of both classes on its own. Unsupervised learning applies to data exposure without ground truth labels. During the training phase, the algorithm attempts to find labels that best organize the data (“clustering”). Generally, unsupervised learning requires more computational power and larger datasets, and its performance is more challenging to evaluate. Therefore, supervised algorithms are often used in medical applications. (A) Neural networks are based on interconnected neurons in the human brain. The blue dots represent input features, whereas the red dots are the output of the algorithm. The green dots mathematically weigh the input features to predict an output. (B) A support vector machine is used to define an optimal separating “hyperplane” to maximize the distance from the closest points of two classes. (C) A linear discriminant analysis is a linear classification technique to distinguish among three or more classes. (D) K-nearest neighbors classify an input feature by a majority vote of its K-closest neighbors. For instance, the unknown dot will be assigned blue if K = 1 (inner circle), whereas the unknown dot will be assigned red if K = 5 (outer circle). (E) K-means groups objects based on their characteristics by iteratively aggregating clusters to centroids by minimizing the distance to the middle point of the cluster. For example, three clusters are aggregated (K = 3): green, red, and blue dots.