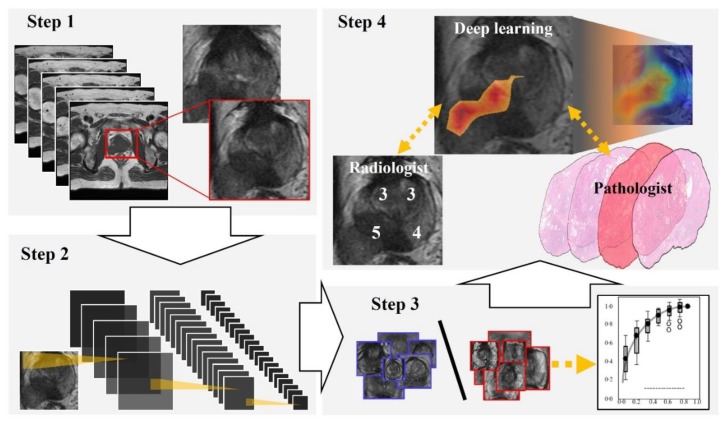

Figure 1.

Flowchart of our study. Step 1: We extracted a rectangular region of the prostate from within the magnetic resonance (MR) images and adjusted the image size to 256 × 256 pixels. Step 2: For preparing explainable model, we applied a well-established deep neural network to MR images for cancer classification. Step 3: For evaluating the classification by the deep neural network, we constructed a receiver operating characteristic (ROC) curve with the corresponding area under the curve (AUC). Step 4: Deep learning-focused regions were compared with both radiologist-identified targets on MR images and pathologist-identified locations through 3D reconstruction of pathological images.