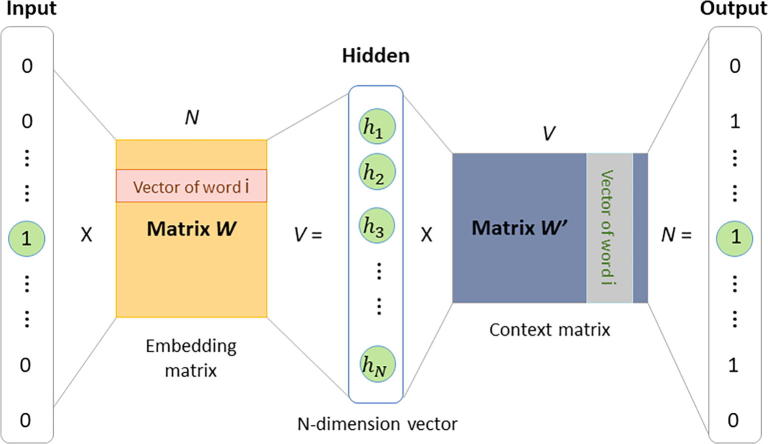

Fig. 2.

The skip-gram word embedding model. Lnc2vec and pro2vec model were trained by using this model and genome-wide human lncRNA and protein sequences. Skip-gram is trained by predicting words surrounding the central word, after training, the weights matrix W of the hidden layer is obtained, that is word vectors.