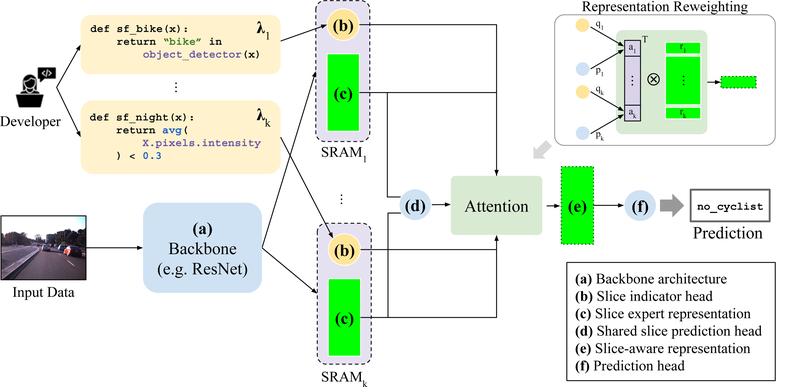

Figure 2: Model Architecture:

A developer writes SFs (λi=1,…,k) over input data and specifies any. (a) backbone architecture (e.g. ResNet [15], BERT [12]) as a feature extractor. Extracted features are shared parameters for k slice-residual attention modules; each learns a (b) slice indicator head, which is supervised by a corresponding λi, and a (c) slice expert representation, which is trained only on examples belonging to the slice using a (d) shared slice prediction head. An attention mechanism reweights these representations into a combined, (e) slice-aware representation. A final (f) prediction head makes model predictions based on the slice-aware representation.