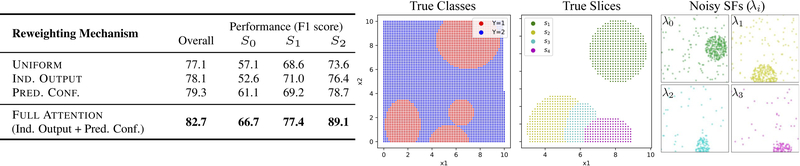

Figure 3: Architecture Ablation:

Using a synthetic, two-class dataset (Figure, left) with four randomly specified (size, shape, location) slices (Figure, middle), we specify corresponding, noisy SFs (Figure, right) and ablate specific model components by modifying the reweighting mechanism for slice expert representations. We compare overall/slice performance for uniform, indicator output, prediction confidence weighting, and the proposed attention weighting using all components. Our Full Attention approach performs most consistently on slices without worsening overall performance.