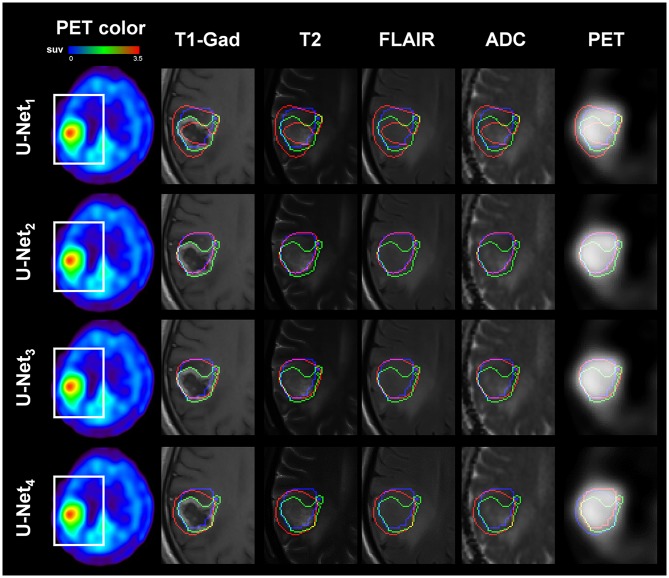

Figure 2.

Representative example of AMT PET-learned MRI-based tumor volume: PM(x) (voxels inside red contour) where multi-modal MRI data of Patient No. 13 (images acquired with Philips Protocol) were separately analyzed by U-Net1(Siemens) (1st row), U-Net2(Philips) (2nd row), U-Net3 (3rd row), and U-Net4 (4th row). U-Net2(Philips) and U-Net3 learned multi-modal MRI of Patient 13 and outperformed the other two U-Net systems to spatially match P(x) with the target, AMT-PET tumor volume: P(x) (blue contour), sensitivity/specificity/PPV/NPV = 0.65/1.00/0.40/1.00, 0.95/1.00/0.79/1.00, 0.94/1.00/0.75/1.00, and 0.91/1.00/0.68/1.00 for U-Net1(Siemens), U-Net2(Philips), U-Net3 and U-Net4, respectively. For comparison, T1-Gad tumor volume: M(x) (voxels inside green contour) was superimposed. White box indicates the region of interest where T1-Gad, T2, FLAIR, ADC map, and AMT-PET slices were captured to show the contours.