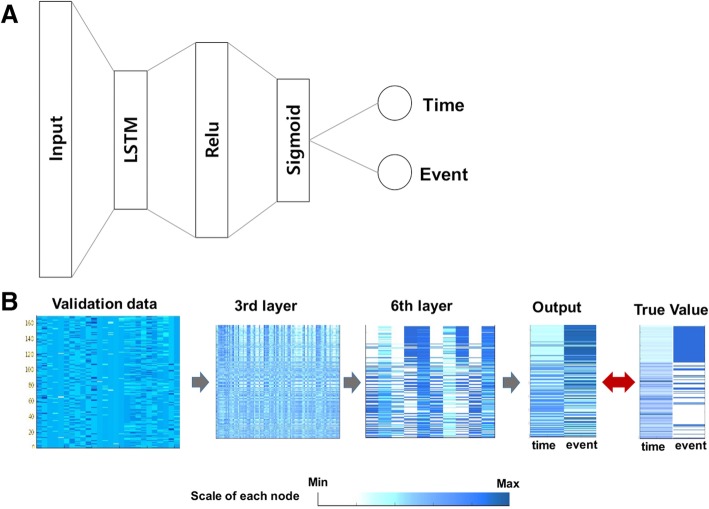

Fig. 2.

The architecture of the basic learning unit of the RED_SNN model. (a) The network architecture of the basic unit was composed of 8 layers, including two long short term memory (LSTM) layers. The input layer was comprised of 28 nodes that represented 26 input features and 2 latent survival features. The output layer was composed of 2 nodes implementing linear function, representing time and event. Since the two target nodes have different characteristics, we did not use the softmax function. The other layers were composed of fully-connected nodes implementing a rectified linear unit function. (b) The validation data (n = 169) were inputted to the pre-trained network. The number of nodes was gradually reduced across the hidden layers. The output time and event were compared to the true target values